Computerized batch processing is a method of running software programs called jobs in batches automatically. While users are required to submit the jobs, no other interaction by the user is required to process the batch. Batches may automatically be run at scheduled times as well as being run contingent on the availability of computer resources.

MOSIX is a proprietary distributed operating system. Although early versions were based on older UNIX systems, since 1999 it focuses on Linux clusters and grids. In a MOSIX cluster/grid there is no need to modify or to link applications with any library, to copy files or login to remote nodes, or even to assign processes to different nodes – it is all done automatically, like in an SMP.

HTCondor is an open-source high-throughput computing software framework for coarse-grained distributed parallelization of computationally intensive tasks. It can be used to manage workload on a dedicated cluster of computers, or to farm out work to idle desktop computers – so-called cycle scavenging. HTCondor runs on Linux, Unix, Mac OS X, FreeBSD, and Microsoft Windows operating systems. HTCondor can integrate both dedicated resources and non-dedicated desktop machines into one computing environment.

Grid MP is a commercial distributed computing software package developed and sold by Univa, a privately held company based primarily in Austin, Texas. It was formerly known as the MetaProcessor prior to the release of version 4.0, however the letters MP in Grid MP do not officially stand for anything.

A job scheduler is a computer application for controlling unattended background program execution of jobs. This is commonly called batch scheduling, as execution of non-interactive jobs is often called batch processing, though traditional job and batch are distinguished and contrasted; see that page for details. Other synonyms include batch system, distributed resource management system (DRMS), distributed resource manager (DRM), and, commonly today, workload automation (WLA). The data structure of jobs to run is known as the job queue.

Maui Cluster Scheduler is a job scheduler for use on clusters and supercomputers initially developed by Cluster Resources, Inc. Maui is capable of supporting multiple scheduling policies, dynamic priorities, reservations, and fairshare capabilities.

The Terascale Open-source Resource and Queue Manager (TORQUE) is a distributed resource manager providing control over batch jobs and distributed compute nodes. TORQUE can integrate with the non-commercial Maui Cluster Scheduler or the commercial Moab Workload Manager to improve overall utilization, scheduling and administration on a cluster.

Distributed Resource Management Application API (DRMAA) is a high-level Open Grid Forum (OGF) API specification for the submission and control of jobs to a distributed resource management (DRM) system, such as a cluster or grid computing infrastructure. The scope of the API covers all the high level functionality required for applications to submit, control, and monitor jobs on execution resources in the DRM system.

Meta-scheduling or super scheduling is a computer software technique of optimizing computational workloads by combining an organization's multiple job schedulers into a single aggregated view, allowing batch jobs to be directed to the best location for execution.

The Open Grid Forum (OGF) is a community of users, developers, and vendors for standardization of grid computing. It was formed in 2006 in a merger of the Global Grid Forum and the Enterprise Grid Alliance. The OGF models its process on the Internet Engineering Task Force (IETF), and produces documents with many acronyms such as OGSA, OGSI, and JSDL.

In computer science, high-throughput computing (HTC) is the use of many computing resources over long periods of time to accomplish a computational task.

Dynamic Infrastructure is an information technology concept related to the design of data centers, whereby the underlying hardware and software can respond dynamically and more efficiently to changing levels of demand. In other words, data center assets such as storage and processing power can be provisioned to meet surges in user's needs. The concept has also been referred to as Infrastructure 2.0 and Next Generation Data Center.

SynfiniWay was middleware with which a virtualised IT framework can be created that provides a uniform and global view of resources within a department, a company, or a company with its suppliers. This virtualised IT framework is service-oriented, meaning that applications are run as services, which are a system-independent view of applications. Several applications can be linked in a workflow, and data exchange between the applications participating in the workflow is implicitly managed by the IT framework. SynfiniWay is platform-independent, allowing almost any distributed heterogeneous platform to be linked into its virtualised IT framework.

gLite is a middleware computer software project for grid computing used by the CERN LHC experiments and other scientific domains. It was implemented by collaborative efforts of more than 80 people in 12 different academic and industrial research centers in Europe. gLite provides a framework for building applications tapping into distributed computing and storage resources across the Internet. The gLite services were adopted by more than 250 computing centres, and used by more than 15000 researchers in Europe and around the world.

The Grid and Cloud User Support Environment (gUSE), also known as WS-PGRADE /gUSE, is an open source science gateway framework that enables users to access grid and cloud infrastructures. gUSE is developed by the Laboratory of Parallel and Distributed Systems (LPDS) at Institute for Computer Science and Control (SZTAKI) of the Hungarian Academy of Sciences.

Ignacio Martín Llorente is an entrepreneur, researcher and educator in the field of cloud and distributed computing. He is the director of OpenNebula, a visiting scholar at Harvard University and a full professor at Complutense University.

GridRPC in distributed computing, is Remote Procedure Call over a grid. This paradigm has been proposed by the GridRPC working group of the Open Grid Forum (OGF), and an API has been defined in order for clients to access remote servers as simply as a function call. It is used among numerous Grid middleware for its simplicity of implementation, and has been standardized by the OGF in 2007. For interoperability reasons between the different existing middleware, the API has been followed by a document describing good use and behavior of the different GridRPC API implementations. Works have then been conducted on the GridRPC Data Management, which has been standardized in 2011.

A data grid is an architecture or set of services that gives individuals or groups of users the ability to access, modify and transfer extremely large amounts of geographically distributed data for research purposes. Data grids make this possible through a host of middleware applications and services that pull together data and resources from multiple administrative domains and then present it to users upon request. The data in a data grid can be located at a single site or multiple sites where each site can be its own administrative domain governed by a set of security restrictions as to who may access the data. Likewise, multiple replicas of the data may be distributed throughout the grid outside their original administrative domain and the security restrictions placed on the original data for who may access it must be equally applied to the replicas. Specifically developed data grid middleware is what handles the integration between users and the data they request by controlling access while making it available as efficiently as possible. The adjacent diagram depicts a high level view of a data grid.

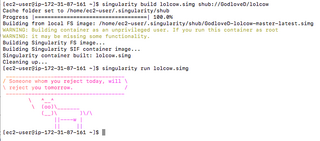

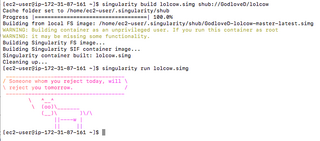

Singularity is a free and open-source computer program that performs operating-system-level virtualization also known as containerization.