Related Research Articles

The John von Neumann Theory Prize of the Institute for Operations Research and the Management Sciences (INFORMS) is awarded annually to an individual who has made fundamental and sustained contributions to theory in operations research and the management sciences.

In statistics, Markov chain Monte Carlo (MCMC) methods comprise a class of algorithms for sampling from a probability distribution. By constructing a Markov chain that has the desired distribution as its equilibrium distribution, one can obtain a sample of the desired distribution by recording states from the chain. The more steps that are included, the more closely the distribution of the sample matches the actual desired distribution. Various algorithms exist for constructing chains, including the Metropolis–Hastings algorithm.

Global optimization is a branch of applied mathematics and numerical analysis that attempts to find the global minima or maxima of a function or a set of functions on a given set. It is usually described as a minimization problem because the maximization of the real-valued function is equivalent to the minimization of the function .

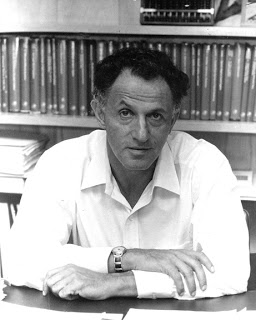

Richard Ernest Bellman was an American applied mathematician, who introduced dynamic programming in 1953, and made important contributions in other fields of mathematics, such as biomathematics. He founded the leading biomathematical journal Mathematical Biosciences.

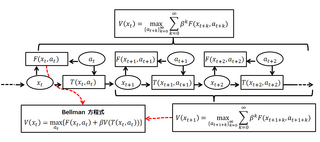

A Bellman equation, named after Richard E. Bellman, is a necessary condition for optimality associated with the mathematical optimization method known as dynamic programming. It writes the "value" of a decision problem at a certain point in time in terms of the payoff from some initial choices and the "value" of the remaining decision problem that results from those initial choices. This breaks a dynamic optimization problem into a sequence of simpler subproblems, as Bellman's “principle of optimality" prescribes. The equation applies to algebraic structures with a total ordering; for algebraic structures with a partial ordering, the generic Bellman's equation can be used.

Stochastic optimization (SO) methods are optimization methods that generate and use random variables. For stochastic problems, the random variables appear in the formulation of the optimization problem itself, which involves random objective functions or random constraints. Stochastic optimization methods also include methods with random iterates. Some stochastic optimization methods use random iterates to solve stochastic problems, combining both meanings of stochastic optimization. Stochastic optimization methods generalize deterministic methods for deterministic problems.

Stochastic approximation methods are a family of iterative methods typically used for root-finding problems or for optimization problems. The recursive update rules of stochastic approximation methods can be used, among other things, for solving linear systems when the collected data is corrupted by noise, or for approximating extreme values of functions which cannot be computed directly, but only estimated via noisy observations.

Pseudospectral optimal control is a joint theoretical-computational method for solving optimal control problems. It combines pseudospectral (PS) theory with optimal control theory to produce PS optimal control theory. PS optimal control theory has been used in ground and flight systems in military and industrial applications. The techniques have been extensively used to solve a wide range of problems such as those arising in UAV trajectory generation, missile guidance, control of robotic arms, vibration damping, lunar guidance, magnetic control, swing-up and stabilization of an inverted pendulum, orbit transfers, tether libration control, ascent guidance and quantum control.

The Richard E. Bellman Control Heritage Award is an annual award given by the American Automatic Control Council (AACC) for achievements in control theory, named after the applied mathematician Richard E. Bellman. The award is given for "distinguished career contributions to the theory or applications of automatic control", and it is the "highest recognition of professional achievement for U.S. control systems engineers and scientists".

Yu-Chi "Larry" Ho is a Chinese-American mathematician, control theorist, and a professor at the School of Engineering and Applied Sciences, Harvard University.

Kumpati S. Narendra is an American control theorist, who currently holds the Harold W. Cheel Professorship of Electrical Engineering at Yale University. He received the Richard E. Bellman Control Heritage Award in 2003. He is noted "for pioneering contributions to stability theory, adaptive and learning systems theory". He is also well recognized for his research work towards learning including Neural Networks and Learning Automata.

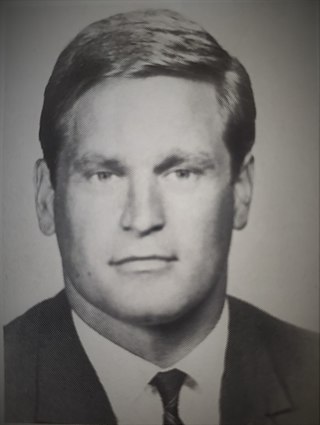

Arthur Earl Bryson Jr. is the Paul Pigott Professor of Engineering Emeritus at Stanford University and the "father of modern optimal control theory". With Henry J. Kelley, he also pioneered an early version of the backpropagation procedure, now widely used for machine learning and artificial neural networks.

Mustafa Tamer Başar is a control and game theorist who is the Swanlund Endowed Chair and Center for Advanced Study Professor of Electrical and Computer Engineering at the University of Illinois at Urbana-Champaign, USA. He is also the Director of the Center for Advanced Study.

Miroslav Krstić is a Serbian-born American control theorist and Distinguished Professor of Mechanical and Aerospace Engineering at the University of California, San Diego (UCSD). Krstić is also the director of the Center for Control Systems and Dynamics at UCSD and a Senior Associate Vice Chancellor for Research.

Moshe Zakai was a Distinguished Professor at the Technion, Israel in electrical engineering, member of the Israel Academy of Sciences and Humanities and Rothschild Prize winner.

Dimitri Panteli Bertsekas is an applied mathematician, electrical engineer, and computer scientist, a McAfee Professor at the Department of Electrical Engineering and Computer Science in School of Engineering at the Massachusetts Institute of Technology (MIT), Cambridge, Massachusetts, and also a Fulton Professor of Computational Decision Making at Arizona State University, Tempe.

Dragoslav D. Šiljak is Professor Emeritus of Electrical Engineering at Santa Clara University, where he held the title of Benjamin and Mae Swig University Professor. He is best known for developing the mathematical theory and methods for control of complex dynamic systems characterized by large-scale, information structure constraints and uncertainty.

Mean-field game theory is the study of strategic decision making by small interacting agents in very large populations. It lies at the intersection of game theory with stochastic analysis and control theory. The use of the term "mean field" is inspired by mean-field theory in physics, which considers the behavior of systems of large numbers of particles where individual particles have negligible impacts upon the system. In other words, each agent acts according to his minimization or maximization problem taking into account other agents’ decisions and because their population is large we can assume the number of agents goes to infinity and a representative agent exists.

In numerical methods for stochastic differential equations, the Markov chain approximation method (MCAM) belongs to the several numerical (schemes) approaches used in stochastic control theory. Regrettably the simple adaptation of the deterministic schemes for matching up to stochastic models such as the Runge–Kutta method does not work at all.

Vivek Shripad Borkar is an Indian electrical engineer, mathematician and an Institute chair professor at the Indian Institute of Technology, Mumbai. He is known for introducing analytical paradigm in stochastic optimal control processes and is an elected fellow of all the three major Indian science academies viz. the Indian Academy of Sciences, Indian National Science Academy and the National Academy of Sciences, India. He also holds elected fellowships of The World Academy of Sciences, Institute of Electrical and Electronics Engineers, Indian National Academy of Engineering and the American Mathematical Society. The Council of Scientific and Industrial Research, the apex agency of the Government of India for scientific research, awarded him the Shanti Swarup Bhatnagar Prize for Science and Technology, one of the highest Indian science awards for his contributions to Engineering Sciences in 1992. He received the TWAS Prize of the World Academy of Sciences in 2009.

References

- ↑ Brochu, Eric; Cora, Vlad M.; de Freitas, Nando (12 December 2010). "A Tutorial on Bayesian Optimization of Expensive Cost Functions, with Application to Active User Modeling and Hierarchical Reinforcement Learning". arXiv: 1012.2599 [cs.LG].

- ↑ Frazier, Peter I.; Wang, Jialei (13 December 2015). Bayesian Optimization for Materials Design. Information Science for Materials Discovery and Design. Springer Series in Materials Science. Vol. 225. pp. 45–75. arXiv: 1506.01349 . doi:10.1007/978-3-319-23871-5_3. ISBN 978-3-319-23870-8. S2CID 61416981.

- ↑ Kushner, H. J. (1 March 1964). "A New Method of Locating the Maximum Point of an Arbitrary Multipeak Curve in the Presence of Noise". Journal of Basic Engineering. 86 (1): 97–106. doi:10.1115/1.3653121. ISSN 0098-2202 . Retrieved 19 July 2018.

- ↑ "IEEE Control Systems Award Recipients" (PDF). IEEE . Retrieved January 15, 2011.

- ↑ "IEEE Control Systems Award". IEEE Control Systems Society. Archived from the original on 2010-12-29. Retrieved January 15, 2011.

- ↑ "Franklin Laureate Database - Harold J. Kushner". The Franklin Institute. Archived from the original on 2011-06-29. Retrieved January 22, 2011.

- ↑ "Franklin Laureate Database - Louis E. Levy Medal Laureates". Franklin Institute. Archived from the original on 2011-06-29. Retrieved January 22, 2011.

- ↑ "Richard E. Bellman Control Heritage Award". American Automatic Control Council . Retrieved February 10, 2013.