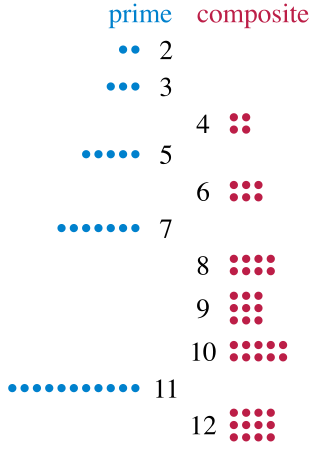

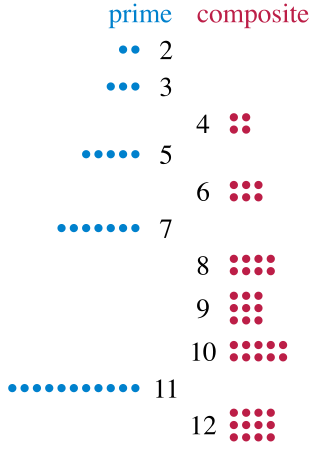

In number theory, integer factorization is the decomposition of a positive integer into a product of integers. Every positive integer greater than 1 is either the product of two or more integer factors greater than 1, in which case it is called a composite number, or it is not, in which case it is called a prime number. For example, 15 is a composite number because 15 = 3 · 5, but 7 is a prime number because it cannot be decomposed in this way. If one of the factors is composite, it can in turn be written as a product of smaller factors, for example 60 = 3 · 20 = 3 · (5 · 4). Continuing this process until every factor is prime is called prime factorization; the result is always unique up to the order of the factors by the prime factorization theorem.

A prime number is a natural number greater than 1 that is not a product of two smaller natural numbers. A natural number greater than 1 that is not prime is called a composite number. For example, 5 is prime because the only ways of writing it as a product, 1 × 5 or 5 × 1, involve 5 itself. However, 4 is composite because it is a product (2 × 2) in which both numbers are smaller than 4. Primes are central in number theory because of the fundamental theorem of arithmetic: every natural number greater than 1 is either a prime itself or can be factorized as a product of primes that is unique up to their order.

A primality test is an algorithm for determining whether an input number is prime. Among other fields of mathematics, it is used for cryptography. Unlike integer factorization, primality tests do not generally give prime factors, only stating whether the input number is prime or not. Factorization is thought to be a computationally difficult problem, whereas primality testing is comparatively easy. Some primality tests prove that a number is prime, while others like Miller–Rabin prove that a number is composite. Therefore, the latter might more accurately be called compositeness tests instead of primality tests.

The Miller–Rabin primality test or Rabin–Miller primality test is a probabilistic primality test: an algorithm which determines whether a given number is likely to be prime, similar to the Fermat primality test and the Solovay–Strassen primality test.

Leonard Adleman is an American computer scientist. He is one of the creators of the RSA encryption algorithm, for which he received the 2002 Turing Award. He is also known for the creation of the field of DNA computing.

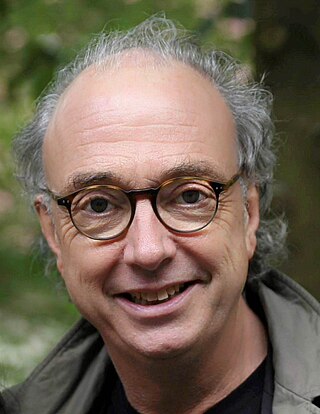

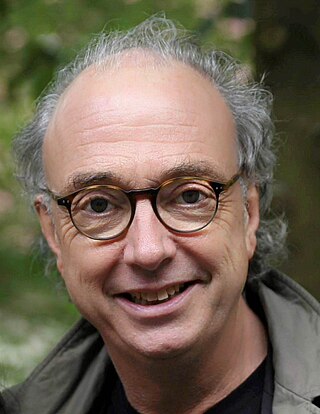

Michael Oser Rabin is an Israeli mathematician, computer scientist, and recipient of the Turing Award.

A randomized algorithm is an algorithm that employs a degree of randomness as part of its logic or procedure. The algorithm typically uses uniformly random bits as an auxiliary input to guide its behavior, in the hope of achieving good performance in the "average case" over all possible choices of random determined by the random bits; thus either the running time, or the output are random variables.

In mathematics and computer science, computational number theory, also known as algorithmic number theory, is the study of computational methods for investigating and solving problems in number theory and arithmetic geometry, including algorithms for primality testing and integer factorization, finding solutions to diophantine equations, and explicit methods in arithmetic geometry. Computational number theory has applications to cryptography, including RSA, elliptic curve cryptography and post-quantum cryptography, and is used to investigate conjectures and open problems in number theory, including the Riemann hypothesis, the Birch and Swinnerton-Dyer conjecture, the ABC conjecture, the modularity conjecture, the Sato-Tate conjecture, and explicit aspects of the Langlands program.

Trial division is the most laborious but easiest to understand of the integer factorization algorithms. The essential idea behind trial division tests to see if an integer n, the integer to be factored, can be divided by each number in turn that is less than the square root of n.

Samuel Standfield Wagstaff Jr. is an American mathematician and computer scientist, whose research interests are in the areas of cryptography, parallel computation, and analysis of algorithms, especially number theoretic algorithms. He is currently a professor of computer science and mathematics at Purdue University who coordinates the Cunningham project, a project to factor numbers of the form bn ± 1, since 1983. He has authored/coauthored over 50 research papers and four books. He has an Erdős number of 1.

In computational number theory, a variety of algorithms make it possible to generate prime numbers efficiently. These are used in various applications, for example hashing, public-key cryptography, and search of prime factors in large numbers.

In mathematics and computer science, a primality certificate or primality proof is a succinct, formal proof that a number is prime. Primality certificates allow the primality of a number to be rapidly checked without having to run an expensive or unreliable primality test. "Succinct" usually means that the proof should be at most polynomially larger than the number of digits in the number itself.

Arthur Oliver Lonsdale Atkin, who published under the name A. O. L. Atkin, was a British mathematician.

In theoretical computer science, a computational problem is a problem that may be solved by an algorithm. For example, the problem of factoring

Hendrik Willem Lenstra Jr. is a Dutch mathematician.

The following tables list the computational complexity of various algorithms for common mathematical operations.

In computational number theory, the Adleman–Pomerance–Rumely primality test is an algorithm for determining whether a number is prime. Unlike other, more efficient algorithms for this purpose, it avoids the use of random numbers, so it is a deterministic primality test. It is named after its discoverers, Leonard Adleman, Carl Pomerance, and Robert Rumely. The test involves arithmetic in cyclotomic fields.

In mathematics, elliptic curve primality testing techniques, or elliptic curve primality proving (ECPP), are among the quickest and most widely used methods in primality proving. It is an idea put forward by Shafi Goldwasser and Joe Kilian in 1986 and turned into an algorithm by A. O. L. Atkin the same year. The algorithm was altered and improved by several collaborators subsequently, and notably by Atkin and François Morain, in 1993. The concept of using elliptic curves in factorization had been developed by H. W. Lenstra in 1985, and the implications for its use in primality testing followed quickly.

Johannes Alfred Buchmann is a German computer scientist, mathematician and professor emeritus at the department of computer science of the Technische Universität Darmstadt.

Primality Testing for Beginners is an undergraduate-level mathematics book on primality tests, methods for testing whether a given number is a prime number, centered on the AKS primality test, the first method to solve this problem in polynomial time. It was written by Lasse Rempe-Gillen and Rebecca Waldecker, and originally published in German as Primzahltests für Einsteiger: Zahlentheorie, Algorithmik, Kryptographie. It was translated into English as Primality Testing for Beginners and published in 2014 by the American Mathematical Society, as volume 70 of their Student Mathematical Library book series. A second German-language edition was publisher by Springer in 2016.