Related Research Articles

A pointing device is a human interface device that allows a user to input spatial data to a computer. CAD systems and graphical user interfaces (GUI) allow the user to control and provide data to the computer using physical gestures by moving a hand-held mouse or similar device across the surface of the physical desktop and activating switches on the mouse. Movements of the pointing device are echoed on the screen by movements of the pointer and other visual changes. Common gestures are point and click and drag and drop.

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine from the human end, while the machine simultaneously feeds back information that aids the operators' decision-making process. Examples of this broad concept of user interfaces include the interactive aspects of computer operating systems, hand tools, heavy machinery operator controls and process controls. The design considerations applicable when creating user interfaces are related to, or involve such disciplines as, ergonomics and psychology.

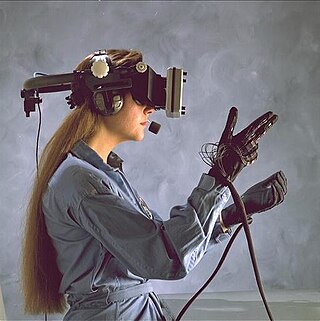

Haptic technology is technology that can create an experience of touch by applying forces, vibrations, or motions to the user. These technologies can be used to create virtual objects in a computer simulation, to control virtual objects, and to enhance remote control of machines and devices (telerobotics). Haptic devices may incorporate tactile sensors that measure forces exerted by the user on the interface. The word haptic, from the Greek: ἁπτικός (haptikos), means "tactile, pertaining to the sense of touch". Simple haptic devices are common in the form of game controllers, joysticks, and steering wheels.

In human–computer interaction, WIMP stands for "windows, icons, menus, pointer", denoting a style of interaction using these elements of the user interface. Other expansions are sometimes used, such as substituting "mouse" and "mice" for menus, or "pull-down menu" and "pointing" for pointer.

The following outline is provided as an overview of and topical guide to human–computer interaction:

Gesture recognition is an area of research and development in computer science and language technology concerned with the recognition and interpretation of human gestures. A subdiscipline of computer vision, it employs mathematical algorithms to interpret gestures. Gestures can originate from any bodily motion or state, but commonly originate from the face or hand. One area of the field is emotion recognition derived from facial expressions and hand gestures. Users can make simple gestures to control or interact with devices without physically touching them. Many approaches have been made using cameras and computer vision algorithms to interpret sign language, however, the identification and recognition of posture, gait, proxemics, and human behaviors is also the subject of gesture recognition techniques. Gesture recognition is a path for computers to begin to better understand and interpret human body language, previously not possible through text or unenhanced graphical (GUI) user interfaces.

In computing, multi-touch is technology that enables a surface to recognize the presence of more than one point of contact with the surface at the same time. The origins of multitouch began at CERN, MIT, University of Toronto, Carnegie Mellon University and Bell Labs in the 1970s. CERN started using multi-touch screens as early as 1976 for the controls of the Super Proton Synchrotron. A form of gesture recognition, capacitive multi-touch displays were popularized by Apple's iPhone in 2007. Plural-point awareness may be used to implement additional functionality, such as pinch to zoom or to activate certain subroutines attached to predefined gestures.

An interaction technique, user interface technique or input technique is a combination of hardware and software elements that provides a way for computer users to accomplish a single task. For example, one can go back to the previously visited page on a Web browser by either clicking a button, pressing a key, performing a mouse gesture or uttering a speech command. It is a widely used term in human-computer interaction. In particular, the term "new interaction technique" is frequently used to introduce a novel user interface design idea.

In human–computer interaction, an organic user interface (OUI) is defined as a user interface with a non-flat display. After Engelbart and Sutherland's graphical user interface (GUI), which was based on the cathode ray tube (CRT), and Kay and Weiser's ubiquitous computing, which is based on the flat panel liquid-crystal display (LCD), OUI represents one possible third wave of display interaction paradigms, pertaining to multi-shaped and flexible displays. In an OUI, the display surface is always the focus of interaction, and may actively or passively change shape upon analog inputs. These inputs are provided through direct physical gestures, rather than through indirect point-and-click control. Note that the term "Organic" in OUI was derived from organic architecture, referring to the adoption of natural form to design a better fit with human ecology. The term also alludes to the use of organic electronics for this purpose.

Hands-on computing is a branch of human-computer interaction research which focuses on computer interfaces that respond to human touch or expression, allowing the machine and the user to interact physically. Hands-on computing can make complicated computer tasks more natural to users by attempting to respond to motions and interactions that are natural to human behavior. Thus hands-on computing is a component of user-centered design, focusing on how users physically respond to virtual environments.

In computing, 3D interaction is a form of human-machine interaction where users are able to move and perform interaction in 3D space. Both human and machine process information where the physical position of elements in the 3D space is relevant.

In computing, a natural user interface (NUI) or natural interface is a user interface that is effectively invisible, and remains invisible as the user continuously learns increasingly complex interactions. The word "natural" is used because most computer interfaces use artificial control devices whose operation has to be learned. Examples include voice assistants, such as Alexa and Siri, touch and multitouch interactions on today's mobile phones and tablets, but also touch interfaces invisibly integrated into the textiles furnitures.

The DiamondTouch table is a multi-touch, interactive PC interface product from Circle Twelve Inc. It is a human interface device that has the capability of allowing multiple people to interact simultaneously while identifying which person is touching where. The technology was originally developed at Mitsubishi Electric Research Laboratories (MERL) in 2001 and later licensed to Circle Twelve Inc in 2008. The DiamondTouch table is used to facilitate face-to-face collaboration, brainstorming, and decision-making, and users include construction management company Parsons Brinckerhoff, the Methodist Hospital, and the US National Geospatial-Intelligence Agency (NGA).

Skinput is an input technology that uses bio-acoustic sensing to localize finger taps on the skin. When augmented with a pico-projector, the device can provide a direct manipulation, graphical user interface on the body. The technology was developed by |Chris Harrison], [[Desne Tanl ]] and Dan Morris, at Microsoft Research's Computational User Experiences Group. Skinput represents one way to decouple input from electronic devices with the aim of allowing devices to become smaller without simultaneously shrinking the surface area on which input can be performed. While other systems, like SixthSense have attempted this with computer vision, Skinput employs acoustics, which take advantage of the human body's natural sound conductive properties. This allows the body to be annexed as an input surface without the need for the skin to be invasively instrumented with sensors, tracking markers, or other items.

The Human Media Lab(HML) is a research laboratory in Human-Computer Interaction at Queen's University's School of Computing in Kingston, Ontario. Its goals are to advance user interface design by creating and empirically evaluating disruptive new user interface technologies, and educate graduate students in this process. The Human Media Lab was founded in 2000 by Prof. Roel Vertegaal and employs an average of 12 graduate students.

Chris Harrison is a British-born, American computer scientist and entrepreneur, working in the fields of human–computer interaction, machine learning and sensor-driven interactive systems. He is a professor at Carnegie Mellon University and director of the Future Interfaces Group within the Human–Computer Interaction Institute. He has previously conducted research at AT&T Labs, Microsoft Research, IBM Research and Disney Research. He is also the CTO and co-founder of Qeexo, a machine learning and interaction technology startup.

Jacob O. Wobbrock is a Professor in the University of Washington Information School and, by courtesy, in the Paul G. Allen School of Computer Science & Engineering at the University of Washington. He is Director of the ACE Lab, Associate Director and founding Co-Director Emeritus of the CREATE research center, and a founding member of the DUB Group and the MHCI+D degree program.

Patrick Baudisch is a computer science professor and the chair of the Human Computer Interaction Lab at Hasso Plattner Institute, Potsdam University. While his early research interests revolved around natural user interfaces and interactive devices, his research focus shifted to virtual reality and haptics in the late 2000s and to digital fabrication, such as 3D Printing and Laser cutting in the 2010s. Prior to teaching and researching at Hasso Plattner Institute, Patrick Baudisch was a research scientist at Microsoft Research and Xerox PARC. He has been a member of CHI Academy since 2013, and an ACM distinguished scientist since 2014. He holds a PhD degree in Computer Science from the Department of Computer Science of the Technische Universität Darmstadt, Germany.

Yves Guiard is a French cognitive neuroscientist and researcher best known for his work in human laterality and stimulus-response compatibility in the field of human-computer interaction. He is the director of research at French National Center for Scientific Research and a member of CHI Academy since 2016. He is also an associate editor of ACM Transactions on Computer-Human Interaction and member of the advisory council of the International Association for the Study of Attention and Performance.

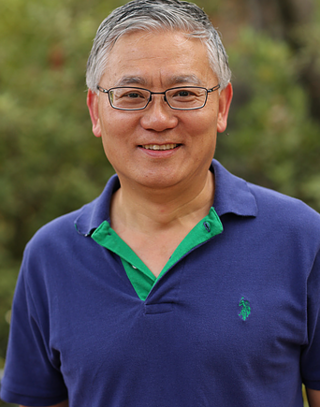

Shumin Zhai is a Chinese-born American Canadian Human–computer interaction (HCI) research scientist and inventor. He is known for his research specifically on input devices and interaction methods, swipe-gesture-based touchscreen keyboards, eye-tracking interfaces, and models of human performance in human-computer interaction. His studies have contributed to both foundational models and understandings of HCI and practical user interface designs and flagship products. He previously worked at IBM where he invented the ShapeWriter text entry method for smartphones, which is a predecessor to the modern Swype keyboard. Dr. Zhai's publications have won the ACM UIST Lasting Impact Award and the IEEE Computer Society Best Paper Award, among others, and he is most known for his research specifically on input devices and interaction methods, swipe-gesture-based touchscreen keyboards, eye-tracking interfaces, and models of human performance in human-computer interaction. Dr. Zhai is currently a Principal Scientist at Google where he leads and directs research, design, and development of human-device input methods and haptics systems.

References

- 1 2 3 4 5 Ken Hinckley's page at Microsoft Research.

- 1 2 Ken Hinckley's biography at 2014 SIGCHI Awards. Retrieved on 24 March 2018.

- ↑ Ken Hinckley's page at Google Scholar.

- ↑ Hinckley, K., Haptic Issues for Virtual Manipulation. Doctoral Thesis. UMI Order Number: GAX97‐24701, University of Virginia, Charlottesville, VA, January 1997. Advisor: Randy Pausch.

- ↑ Ken Hinckley's CV at Microsoft Research.

- ↑ Hinckley, K., Pausch, R., and Proffitt, D., Attention and Visual Feedback: The Bimanual Frame of Reference. In Proc. I3D 1997 Symp. on interactive 3D Graphics, Providence, RI, April 27 ‐ 30, 1997, pp. 121‐126.

- 1 2 Hinckley, K., Pierce, J., Sinclair, M., and Horvitz, E. Sensing techniques for mobile interaction. In ACM UIST 2000 Symp. on User interface Software and Technology, San Diego, California, pp. 91‐100.

- 1 2 3 "UIST 2000: The 13th Annual ACM Symposium on User Interface Software and Technology", UIST.

- ↑ Hinckley, K., and Song, H., Sensor Synaesthesia: Touch in Motion, and Motion in Touch, In Proc. CHI 2011 Conf. on Human Factors in Computing Systems.

- 1 2 3 Hinckley, K., Pahud, M., Benko, H., Irani, P., Gavriliu, M., Guimbretiere, F., Chen, X. 'A.', Matulic, F., Buxton, B., and Wilson, A. D., Sensing Techniques for Tablet+Stylus Interaction. UIST 2014.

- ↑ Liszewski, Andrew. "Microsoft Made a Better Stylus That Knows How You're Holding It", Gizmodo , 06 October 2014. Retrieved on 24 March 2018.

- ↑ Brownlee, John. "Microsoft Research Invents A Stylus That Can Read Your Mind", FastCo. Design, 10 October 2014. Retrieved on 24 March 2018.

- ↑ "2016 Product Design Technical Group - Stanley Caplan User-Centered Product Design Award", Proceedings of the Human Factors and Ergonomics Society 2016 Annual Meeting. Retrieved on 24 March 2018.

- ↑ Knies, Rob. "Hinckley Paper Makes Lasting Impact", Microsoft Research Blog, 4 November 2011. Retrieved on 24 March 2018.

- ↑ "UIST 2014: 27th ACM User Interface Software and Technology Symposium", UIST.