In fluid mechanics, the Rayleigh number (Ra, after Lord Rayleigh) for a fluid is a dimensionless number associated with buoyancy-driven flow, also known as free (or natural) convection. It characterises the fluid's flow regime: a value in a certain lower range denotes laminar flow; a value in a higher range, turbulent flow. Below a certain critical value, there is no fluid motion and heat transfer is by conduction rather than convection. For most engineering purposes, the Rayleigh number is large, somewhere around 106 to 108.

In probability theory and statistics, the gamma distribution is a two-parameter family of continuous probability distributions. The exponential distribution, Erlang distribution, and chi-squared distribution are special cases of the gamma distribution. There are two equivalent parameterizations in common use:

- With a shape parameter and a scale parameter .

- With a shape parameter and an inverse scale parameter , called a rate parameter.

The bin packing problem is an optimization problem, in which items of different sizes must be packed into a finite number of bins or containers, each of a fixed given capacity, in a way that minimizes the number of bins used. The problem has many applications, such as filling up containers, loading trucks with weight capacity constraints, creating file backups in media, and technology mapping in FPGA semiconductor chip design.

In physics, the S-matrix or scattering matrix relates the initial state and the final state of a physical system undergoing a scattering process. It is used in quantum mechanics, scattering theory and quantum field theory (QFT).

In mathematics and computing, the Levenberg–Marquardt algorithm, also known as the damped least-squares (DLS) method, is used to solve non-linear least squares problems. These minimization problems arise especially in least squares curve fitting. The LMA interpolates between the Gauss–Newton algorithm (GNA) and the method of gradient descent. The LMA is more robust than the GNA, which means that in many cases it finds a solution even if it starts very far off the final minimum. For well-behaved functions and reasonable starting parameters, the LMA tends to be slower than the GNA. LMA can also be viewed as Gauss–Newton using a trust region approach.

A vibration in a string is a wave. Resonance causes a vibrating string to produce a sound with constant frequency, i.e. constant pitch. If the length or tension of the string is correctly adjusted, the sound produced is a musical tone. Vibrating strings are the basis of string instruments such as guitars, cellos, and pianos.

The equilibrium constant of a chemical reaction is the value of its reaction quotient at chemical equilibrium, a state approached by a dynamic chemical system after sufficient time has elapsed at which its composition has no measurable tendency towards further change. For a given set of reaction conditions, the equilibrium constant is independent of the initial analytical concentrations of the reactant and product species in the mixture. Thus, given the initial composition of a system, known equilibrium constant values can be used to determine the composition of the system at equilibrium. However, reaction parameters like temperature, solvent, and ionic strength may all influence the value of the equilibrium constant.

In quantum field theory, the Lehmann–Symanzik–Zimmerman (LSZ) reduction formula is a method to calculate S-matrix elements from the time-ordered correlation functions of a quantum field theory. It is a step of the path that starts from the Lagrangian of some quantum field theory and leads to prediction of measurable quantities. It is named after the three German physicists Harry Lehmann, Kurt Symanzik and Wolfhart Zimmermann.

The Clausius–Clapeyron relation, in chemical thermodynamics specifies the temperature dependence of pressure, most importantly vapor pressure, at a discontinuous phase transition between two phases of matter of a single constituent. It's named after Rudolf Clausius and Benoît Paul Émile Clapeyron. Its relevance to meteorology and climatology is the increase of the water-holding capacity of the atmosphere by about 7% for every 1 °C (1.8 °F) rise in temperature.

In electromagnetism, the electromagnetic tensor or electromagnetic field tensor is a mathematical object that describes the electromagnetic field in spacetime. The field tensor was first used after the four-dimensional tensor formulation of special relativity was introduced by Hermann Minkowski. The tensor allows related physical laws to be written very concisely, and allows for the quantization of the electromagnetic field by Lagrangian formulation described below.

In general relativity, the Gibbons–Hawking–York boundary term is a term that needs to be added to the Einstein–Hilbert action when the underlying spacetime manifold has a boundary.

The Duffing equation, named after Georg Duffing (1861–1944), is a non-linear second-order differential equation used to model certain damped and driven oscillators. The equation is given by

Local-density approximations (LDA) are a class of approximations to the exchange–correlation (XC) energy functional in density functional theory (DFT) that depend solely upon the value of the electronic density at each point in space. Many approaches can yield local approximations to the XC energy. However, overwhelmingly successful local approximations are those that have been derived from the homogeneous electron gas (HEG) model. In this regard, LDA is generally synonymous with functionals based on the HEG approximation, which are then applied to realistic systems.

The covariant formulation of classical electromagnetism refers to ways of writing the laws of classical electromagnetism in a form that is manifestly invariant under Lorentz transformations, in the formalism of special relativity using rectilinear inertial coordinate systems. These expressions both make it simple to prove that the laws of classical electromagnetism take the same form in any inertial coordinate system, and also provide a way to translate the fields and forces from one frame to another. However, this is not as general as Maxwell's equations in curved spacetime or non-rectilinear coordinate systems.

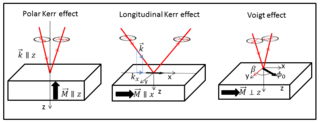

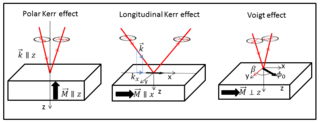

The Voigt effect is a magneto-optical phenomenon which rotates and elliptizes linearly polarised light sent into an optically active medium. Unlike many other magneto-optical effects such as the Kerr or Faraday effect which are linearly proportional to the magnetization, the Voigt effect is proportional to the square of the magnetization and can be seen experimentally at normal incidence. There are several denominations for this effect in the literature: the Cotton–Mouton effect, the Voigt effect, and magnetic-linear birefringence. This last denomination is closer in the physical sense, where the Voigt effect is a magnetic birefringence of the material with an index of refraction parallel and perpendicular ) to the magnetization vector or to the applied magnetic field.

A ratio distribution is a probability distribution constructed as the distribution of the ratio of random variables having two other known distributions. Given two random variables X and Y, the distribution of the random variable Z that is formed as the ratio Z = X/Y is a ratio distribution.

In mathematics, Montgomery's pair correlation conjecture is a conjecture made by Hugh Montgomery (1973) that the pair correlation between pairs of zeros of the Riemann zeta function is

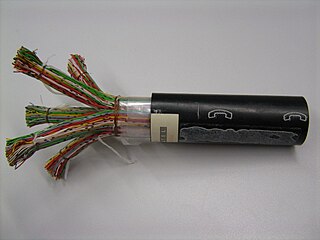

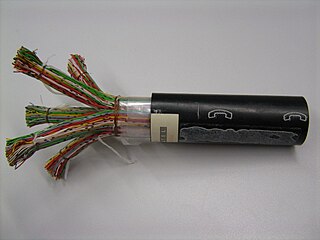

The primary line constants are parameters that describe the characteristics of conductive transmission lines, such as pairs of copper wires, in terms of the physical electrical properties of the line. The primary line constants are only relevant to transmission lines and are to be contrasted with the secondary line constants, which can be derived from them, and are more generally applicable. The secondary line constants can be used, for instance, to compare the characteristics of a waveguide to a copper line, whereas the primary constants have no meaning for a waveguide.

The table of chords, created by the Greek astronomer, geometer, and geographer Ptolemy in Egypt during the 2nd century AD, is a trigonometric table in Book I, chapter 11 of Ptolemy's Almagest, a treatise on mathematical astronomy. It is essentially equivalent to a table of values of the sine function. It was the earliest trigonometric table extensive enough for many practical purposes, including those of astronomy. Since the 8th and 9th centuries, the sine and other trigonometric functions have been used in Islamic mathematics and astronomy, reforming the production of sine tables. Khwarizmi and Habash al-Hasib later produced a set of trigonometric tables.

In computability theory and computational complexity theory, especially the study of approximation algorithms, an approximation-preserving reduction is an algorithm for transforming one optimization problem into another problem, such that the distance of solutions from optimal is preserved to some degree. Approximation-preserving reductions are a subset of more general reductions in complexity theory; the difference is that approximation-preserving reductions usually make statements on approximation problems or optimization problems, as opposed to decision problems.