Perception is the organization, identification, and interpretation of sensory information in order to represent and understand the presented information or environment. All perception involves signals that go through the nervous system, which in turn result from physical or chemical stimulation of the sensory system. Vision involves light striking the retina of the eye; smell is mediated by odor molecules; and hearing involves pressure waves.

The N400 is a component of time-locked EEG signals known as event-related potentials (ERP). It is a negative-going deflection that peaks around 400 milliseconds post-stimulus onset, although it can extend from 250-500 ms, and is typically maximal over centro-parietal electrode sites. The N400 is part of the normal brain response to words and other meaningful stimuli, including visual and auditory words, sign language signs, pictures, faces, environmental sounds, and smells.

The lexical decision task (LDT) is a procedure used in many psychology and psycholinguistics experiments. The basic procedure involves measuring how quickly people classify stimuli as words or nonwords.

In cognitive psychology, the word superiority effect (WSE) refers to the phenomenon that people have better recognition of letters presented within words as compared to isolated letters and to letters presented within nonword strings. Studies have also found a WSE when letter identification within words is compared to letter identification within pseudowords and pseudohomophones.

Tip of the tongue is the phenomenon of failing to retrieve a word or term from memory, combined with partial recall and the feeling that retrieval is imminent. The phenomenon's name comes from the saying, "It's on the tip of my tongue." The tip of the tongue phenomenon reveals that lexical access occurs in stages.

In psychology and cognitive neuroscience, pattern recognition describes a cognitive process that matches information from a stimulus with information retrieved from memory.

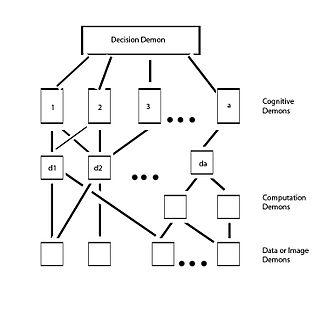

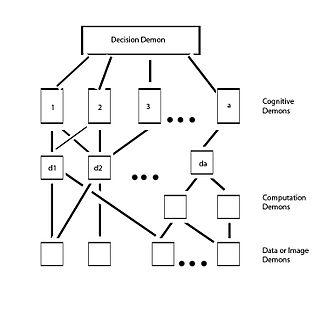

Pandemonium architecture is a theory in cognitive science that describes how visual images are processed by the brain. It has applications in artificial intelligence and pattern recognition. The theory was developed by the artificial intelligence pioneer Oliver Selfridge in 1959. It describes the process of object recognition as a hierarchical system of detection and association by a metaphorical set of "demons" sending signals to each other. This model is now recognized as the basis of visual perception in cognitive science.

TRACE is a connectionist model of speech perception, proposed by James McClelland and Jeffrey Elman in 1986. It is based on a structure called "the Trace," a dynamic processing structure made up of a network of units, which performs as the system's working memory as well as the perceptual processing mechanism. TRACE was made into a working computer program for running perceptual simulations. These simulations are predictions about how a human mind/brain processes speech sounds and words as they are heard in real time.

The cohort model in psycholinguistics and neurolinguistics is a model of lexical retrieval first proposed by William Marslen-Wilson in the late 1970s. It attempts to describe how visual or auditory input is mapped onto a word in a hearer's lexicon. According to the model, when a person hears speech segments real-time, each speech segment "activates" every word in the lexicon that begins with that segment, and as more segments are added, more words are ruled out, until only one word is left that still matches the input.

Indirect memory tests assess the retention of information without direct reference to the source of information. Participants are given tasks designed to elicit knowledge that was acquired incidentally or unconsciously and is evident when performance shows greater inclination towards items initially presented than new items. Performance on indirect tests may reflect contributions of implicit memory, the effects of priming, a preference to respond to previously experienced stimuli over novel stimuli. Types of indirect memory tests include the implicit association test, the lexical decision task, the word stem completion task, artificial grammar learning, word fragment completion, and the serial reaction time task.

Priming is the idea that exposure to one stimulus may influence a response to a subsequent stimulus, without conscious guidance or intention. The priming effect refers to the positive or negative effect of a rapidly presented stimulus on the processing of a second stimulus that appears shortly after. Generally speaking, the generation of priming effect depends on the existence of some positive or negative relationship between priming and target stimuli. For example, the word nurse might be recognized more quickly following the word doctor than following the word bread. Priming can be perceptual, associative, repetitive, positive, negative, affective, semantic, or conceptual. Priming effects involve word recognition, semantic processing, attention, unconscious processing, and many other issues, and are related to differences in various writing systems. Research, however, has yet to firmly establish the duration of priming effects, yet their onset can be almost instantaneous.

There is evidence suggesting that different processes are involved in remembering something versus knowing whether it is familiar. It appears that "remembering" and "knowing" represent relatively different characteristics of memory as well as reflect different ways of using memory.

Feature detection is a process by which the nervous system sorts or filters complex natural stimuli in order to extract behaviorally relevant cues that have a high probability of being associated with important objects or organisms in their environment, as opposed to irrelevant background or noise.

Bilingual interactive activation plus (BIA+) is a model for understanding the process of bilingual language comprehension and consists of two interactive subsystems: the word identification subsystem and task/decision subsystem. It is the successor of the Bilingual Interactive Activation (BIA) model which was updated in 2002 to include phonologic and semantic lexical representations, revise the role of language nodes, and specify the purely bottom-up nature of bilingual language processing.

Word recognition, according to Literacy Information and Communication System (LINCS) is "the ability of a reader to recognize written words correctly and virtually effortlessly". It is sometimes referred to as "isolated word recognition" because it involves a reader's ability to recognize words individually from a list without needing similar words for contextual help. LINCS continues to say that "rapid and effortless word recognition is the main component of fluent reading" and explains that these skills can be improved by "practic[ing] with flashcards, lists, and word grids".

The mental lexicon is defined as a mental dictionary that contains information regarding the word store of a language user, such as their meanings, pronunciations, and syntactic characteristics. The mental lexicon is used in linguistics and psycholinguistics to refer to individual speakers' lexical, or word, representations. However, there is some disagreement as to the utility of the mental lexicon as a scientific construct.

Attenuation theory is a model of selective attention proposed by Anne Treisman, and can be seen as a revision of Donald Broadbent's filter model. Treisman proposed attenuation theory as a means to explain how unattended stimuli sometimes came to be processed in a more rigorous manner than what Broadbent's filter model could account for. As a result, attenuation theory added layers of sophistication to Broadbent's original idea of how selective attention might operate: claiming that instead of a filter which barred unattended inputs from ever entering awareness, it was a process of attenuation. Thus, the attenuation of unattended stimuli would make it difficult, but not impossible to extract meaningful content from irrelevant inputs, so long as stimuli still possessed sufficient "strength" after attenuation to make it through a hierarchical analysis process.

The dual-route theory of reading aloud was first described in the early 1970s. This theory suggests that two separate mental mechanisms, or cognitive routes, are involved in reading aloud, with output of both mechanisms contributing to the pronunciation of a written stimulus.

Bilingual lexical access is an area of psycholinguistics that studies the activation or retrieval process of the mental lexicon for bilingual people.

In psychology, the transposed letter effect is a test of how a word is processed when two letters within the word are switched.