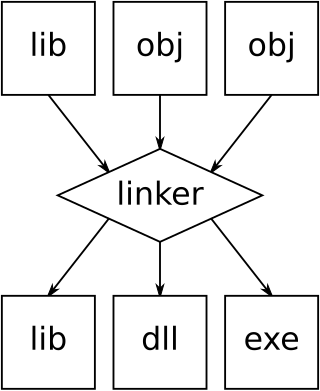

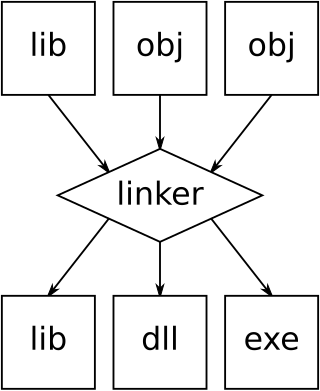

In computing, a linker or link editor is a computer system program that takes one or more object files and combines them into a single executable file, library file, or another "object" file.

In software engineering, multitier architecture is a client–server architecture in which presentation, application processing and data management functions are physically separated. The most widespread use of multitier architecture is the three-tier architecture.

In computer science, a library is a collection of read-only resources that is leveraged during software development to implement a computer program.

Db2 is a family of data management products, including database servers, developed by IBM. It initially supported the relational model, but was extended to support object–relational features and non-relational structures like JSON and XML. The brand name was originally styled as DB2 until 2017, when it changed to its present form.

In computer science, inter-process communication (IPC), also spelled interprocess communication, are the mechanisms provided by an operating system for processes to manage shared data. Typically, applications can use IPC, categorized as clients and servers, where the client requests data and the server responds to client requests. Many applications are both clients and servers, as commonly seen in distributed computing.

In computer systems a loader is the part of an operating system that is responsible for loading programs and libraries. It is one of the essential stages in the process of starting a program, as it places programs into memory and prepares them for execution. Loading a program involves either memory-mapping or copying the contents of the executable file containing the program instructions into memory, and then carrying out other required preparatory tasks to prepare the executable for running. Once loading is complete, the operating system starts the program by passing control to the loaded program code.

In software engineering, service-oriented architecture (SOA) is an architectural style that focuses on discrete services instead of a monolithic design. SOA is a good choice for system integration. By consequence, it is also applied in the field of software design where services are provided to the other components by application components, through a communication protocol over a network. A service is a discrete unit of functionality that can be accessed remotely and acted upon and updated independently, such as retrieving a credit card statement online. SOA is also intended to be independent of vendors, products and technologies.

In computer science, a static library or statically linked library is a set of routines, external functions and variables which are resolved in a caller at compile-time and copied into a target application by a compiler, linker, or binder, producing an object file and a stand-alone executable. This executable and the process of compiling it are both known as a static build of the program. Historically, libraries could only be static. Static libraries are either merged with other static libraries and object files during building/linking to form a single executable or loaded at run-time into the address space of their corresponding executable at a static memory offset determined at compile-time/link-time.

A hybrid kernel is an operating system kernel architecture that attempts to combine aspects and benefits of microkernel and monolithic kernel architectures used in operating systems.

An architectural pattern is a general, reusable resolution to a commonly occurring problem in software architecture within a given context. The architectural patterns address various issues in software engineering, such as computer hardware performance limitations, high availability and minimization of a business risk. Some architectural patterns have been implemented within software frameworks. There are two main categories of architectural patterns: monolithic and distributed.

Legacy modernization, also known as software modernization or platform modernization, refers to the conversion, rewriting or porting of a legacy system to modern computer programming languages, architectures, software libraries, protocols or hardware platforms. Legacy transformation aims to retain and extend the value of the legacy investment through migration to new platforms to benefit from the advantage of the new technologies.

Domain-driven design (DDD) is a major software design approach, focusing on modeling software to match a domain according to input from that domain's experts. DDD is against the idea of having a single unified model; instead it divides a large system into bounded contexts, each of which have their own model.

The kernel is a computer program at the core of a computer's operating system and generally has complete control over everything in the system. The kernel is also responsible for preventing and mitigating conflicts between different processes. It is the portion of the operating system code that is always resident in memory and facilitates interactions between hardware and software components. A full kernel controls all hardware resources via device drivers, arbitrates conflicts between processes concerning such resources, and optimizes the utilization of common resources e.g. CPU & cache usage, file systems, and network sockets. On most systems, the kernel is one of the first programs loaded on startup. It handles the rest of startup as well as memory, peripherals, and input/output (I/O) requests from software, translating them into data-processing instructions for the central processing unit.

An application programming interface (API) is a way for two or more computer programs or components to communicate with each other. It is a type of software interface, offering a service to other pieces of software. A document or standard that describes how to build or use such a connection or interface is called an API specification. A computer system that meets this standard is said to implement or expose an API. The term API may refer either to the specification or to the implementation. Whereas a system's user interface dictates how its end-users interact with the system in question, its API dictates how to write code that takes advantage of that system's capabilities.

In software engineering, service virtualization or service virtualisation is a method to emulate the behavior of specific components in heterogeneous component-based applications such as API-driven applications, cloud-based applications and service-oriented architectures. It is used to provide software development and QA/testing teams access to dependent system components that are needed to exercise an application under test (AUT), but are unavailable or difficult-to-access for development and testing purposes. With the behavior of the dependent components "virtualized", testing and development can proceed without accessing the actual live components. Service virtualization is recognized by vendors, industry analysts, and industry publications as being different than mocking. See here for a Comparison of API simulation tools.

In software engineering, a microservice architecture is an architectural pattern that arranges an application as a collection of loosely coupled, fine-grained services, communicating through lightweight protocols. One of its goals is that teams can develop and deploy their services independently of others. This is achieved by the reduction of several dependencies in the code base, allowing developers to evolve their services with limited restrictions from users, and for additional complexity to be hidden from users. As a consequence, organizations are able to develop software with fast growth and size, as well as use off-the-shelf services more easily. Communication requirements are reduced. These benefits come at a cost to maintaining the decoupling. So, microservice architecture can be a good choice only if the application is too complex to manage as a monolith. Interfaces need to be designed carefully and treated as a public API. One technique that is used is having multiple interfaces on the same service, or multiple versions of the same service, so as to not disrupt existing users of the code.

Serverless computing is a cloud computing execution model in which the cloud provider allocates machine resources on demand, taking care of the servers on behalf of their customers. "Serverless" is a misnomer in the sense that servers are still used by cloud service providers to execute code for developers. However, developers of serverless applications are not concerned with capacity planning, configuration, management, maintenance, fault tolerance, or scaling of containers, virtual machines, or physical servers. When an app is not in use, there are no computing resources allocated to the app. Pricing is based on the actual amount of resources consumed by an application. It can be a form of utility computing.

A Micro-app is a super-specialized application designed to perform one task or use case with the only objective of doing it well. They follow the single responsibility principle, which states that "a class should have one and only one reason to change." Micro applications help developers create less complex applications while reducing costs by breaking down monolithic systems into groups of independent services acting as one system.

Cloud native computing is an approach in software development that utilizes cloud computing to "build and run scalable applications in modern, dynamic environments such as public, private, and hybrid clouds". These technologies, such as containers, microservices, serverless functions, cloud native processors and immutable infrastructure, deployed via declarative code are common elements of this architectural style. Cloud native technologies focus on minimizing users' operational burden.

In programming, the strangler fig pattern or strangler pattern is an architectural pattern that involves wrapping old code, with the intent of redirecting it to newer code or to log uses of the old code. Created by Martin Fowler, its name derives from the strangler fig plant, which tends to grow on trees and eventually kill them.