Related Research Articles

In computer science, an array is a data structure consisting of a collection of elements, of same memory size, each identified by at least one array index or key. An array is stored such that the position of each element can be computed from its index tuple by a mathematical formula. The simplest type of data structure is a linear array, also called a one-dimensional array.

In statistics, a central tendency is a central or typical value for a probability distribution.

In computing, a data warehouse, also known as an enterprise data warehouse (EDW), is a system used for reporting and data analysis and is a core component of business intelligence. Data warehouses are central repositories of data integrated from disparate sources. They store current and historical data organized so as to make it easy to create reports, query and get insights from the data. Unlike databases, they are intended to be used by analysts and managers to help make organizational decisions.

In computing, online analytical processing, or OLAP, is an approach to quickly answer multi-dimensional analytical (MDA) queries. The term OLAP was created as a slight modification of the traditional database term online transaction processing (OLTP). OLAP is part of the broader category of business intelligence, which also encompasses relational databases, report writing and data mining. Typical applications of OLAP include business reporting for sales, marketing, management reporting, business process management (BPM), budgeting and forecasting, financial reporting and similar areas, with new applications emerging, such as agriculture.

A table is an arrangement of information or data, typically in rows and columns, or possibly in a more complex structure. Tables are widely used in communication, research, and data analysis. Tables appear in print media, handwritten notes, computer software, architectural ornamentation, traffic signs, and many other places. The precise conventions and terminology for describing tables vary depending on the context. Further, tables differ significantly in variety, structure, flexibility, notation, representation and use. Information or data conveyed in table form is said to be in tabular format. In books and technical articles, tables are typically presented apart from the main text in numbered and captioned floating blocks.

In mathematics, a time series is a series of data points indexed in time order. Most commonly, a time series is a sequence taken at successive equally spaced points in time. Thus it is a sequence of discrete-time data. Examples of time series are heights of ocean tides, counts of sunspots, and the daily closing value of the Dow Jones Industrial Average.

An OLAP cube is a multi-dimensional array of data. Online analytical processing (OLAP) is a computer-based technique of analyzing data to look for insights. The term cube here refers to a multi-dimensional dataset, which is also sometimes called a hypercube if the number of dimensions is greater than three.

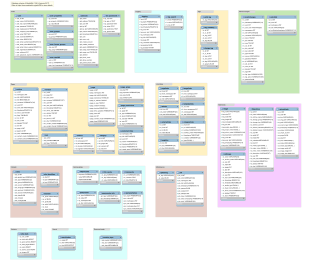

In computing, a snowflake schema or snowflake model is a logical arrangement of tables in a multidimensional database such that the entity relationship diagram resembles a snowflake shape. The snowflake schema is represented by centralized fact tables which are connected to multiple dimensions. "Snowflaking" is a method of normalizing the dimension tables in a star schema. When it is completely normalized along all the dimension tables, the resultant structure resembles a snowflake with the fact table in the middle. The principle behind snowflaking is normalization of the dimension tables by removing low cardinality attributes and forming separate tables.

Essbase is a multidimensional database management system (MDBMS) that provides a platform upon which to build analytic applications. Essbase began as a product from Arbor Software, which merged with Hyperion Software in 1998. Oracle Corporation acquired Hyperion Solutions Corporation in 2007. Until late 2005 IBM also marketed an OEM version of Essbase as DB2 OLAP Server.

In computer programming contexts, a data cube is a multi-dimensional ("n-D") array of values. Typically, the term data cube is applied in contexts where these arrays are massively larger than the hosting computer's main memory; examples include multi-terabyte/petabyte data warehouses and time series of image data.

A dimension is a structure that categorizes facts and measures in order to enable users to answer business questions. Commonly used dimensions are people, products, place and time.

A database model is a type of data model that determines the logical structure of a database. It fundamentally determines in which manner data can be stored, organized and manipulated. The most popular example of a database model is the relational model, which uses a table-based format.

In econometrics, a multidimensional panel data is data of a phenomenon observed over three or more dimensions. This comes in contrast with panel data, observed over two dimensions. An example is a data set containing forecasts of one or multiple macroeconomic variables produced by multiple individuals, in multiple series at multiple times periods and for multiple horizons.

The methodology of econometrics is the study of the range of differing approaches to undertaking econometric analysis.

The dimensional fact model (DFM) is an ad hoc and graphical formalism specifically devised to support the conceptual modeling phase in a data warehouse project. DFM can be used by analysts and non-technical users as well. A short-term working is sufficient to realize a clear and exhaustive representation of multidimensional concepts. It can be used from the initial data warehouse life-cycle steps, to rapidly devise a conceptual model to share with customers.

The following is provided as an overview of and topical guide to databases:

An array database management system or array DBMS provides database services specifically for arrays, that is: homogeneous collections of data items, sitting on a regular grid of one, two, or more dimensions. Often arrays are used to represent sensor, simulation, image, or statistics data. Such arrays tend to be Big Data, with single objects frequently ranging into Terabyte and soon Petabyte sizes; for example, today's earth and space observation archives typically grow by Terabytes a day. Array databases aim at offering flexible, scalable storage and retrieval on this information category.

Multiple factor analysis (MFA) is a factorial method devoted to the study of tables in which a group of individuals is described by a set of variables (quantitative and / or qualitative) structured in groups. It is a multivariate method from the field of ordination used to simplify multidimensional data structures. MFA treats all involved tables in the same way (symmetrical analysis). It may be seen as an extension of:

In signal processing, multidimensional signal processing covers all signal processing done using multidimensional signals and systems. While multidimensional signal processing is a subset of signal processing, it is unique in the sense that it deals specifically with data that can only be adequately detailed using more than one dimension. In m-D digital signal processing, useful data is sampled in more than one dimension. Examples of this are image processing and multi-sensor radar detection. Both of these examples use multiple sensors to sample signals and form images based on the manipulation of these multiple signals. Processing in multi-dimension (m-D) requires more complex algorithms, compared to the 1-D case, to handle calculations such as the fast Fourier transform due to more degrees of freedom. In some cases, m-D signals and systems can be simplified into single dimension signal processing methods, if the considered systems are separable.

The functional database model is used to support analytics applications such as financial planning and performance management. The functional database model, or the functional model for short, is different from but complementary to the relational model. The functional model is also distinct from other similarly named concepts, including the DAPLEX functional database model and functional language databases.

References

- ↑ Maddala, G.S. (2001). Introduction to Econometrics (3rd ed.). Wiley. ISBN 0471497282.

- ↑ Davies, A.; Lahiri, K. (1995). "A new framework for testing rationality and measuring aggregate shocks using panel data". Journal of Econometrics. 68 (1): 205–227. doi:10.1016/0304-4076(94)01649-K.