In telecommunications, RS-232 or Recommended Standard 232 is a standard originally introduced in 1960 for serial communication transmission of data. It formally defines signals connecting between a DTE such as a computer terminal, and a DCE, such as a modem. The standard defines the electrical characteristics and timing of signals, the meaning of signals, and the physical size and pinout of connectors. The current version of the standard is TIA-232-F Interface Between Data Terminal Equipment and Data Circuit-Terminating Equipment Employing Serial Binary Data Interchange, issued in 1997. The RS-232 standard had been commonly used in computer serial ports and is still widely used in industrial communication devices.

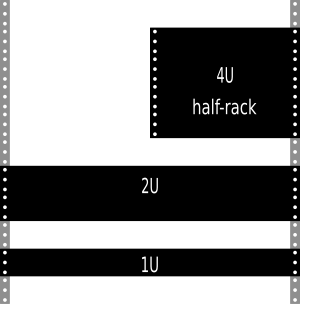

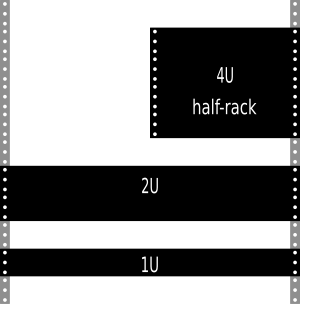

A 19-inch rack is a standardized frame or enclosure for mounting multiple electronic equipment modules. Each module has a front panel that is 19 inches (482.6 mm) wide. The 19 inch dimension includes the edges or ears that protrude from each side of the equipment, allowing the module to be fastened to the rack frame with screws or bolts. Common uses include computer servers, telecommunications equipment and networking hardware, audiovisual production gear, music production equipment, and scientific equipment.

In computing, a server is a piece of computer hardware or software that provides functionality for other programs or devices, called "clients". This architecture is called the client–server model. Servers can provide various functionalities, often called "services", such as sharing data or resources among multiple clients or performing computations for a client. A single server can serve multiple clients, and a single client can use multiple servers. A client process may run on the same device or may connect over a network to a server on a different device. Typical servers are database servers, file servers, mail servers, print servers, web servers, game servers, and application servers.

A data center or data centre is a building, a dedicated space within a building, or a group of buildings used to house computer systems and associated components, such as telecommunications and storage systems.

Computer cooling is required to remove the waste heat produced by computer components, to keep components within permissible operating temperature limits. Components that are susceptible to temporary malfunction or permanent failure if overheated include integrated circuits such as central processing units (CPUs), chipsets, graphics cards, and hard disk drives.

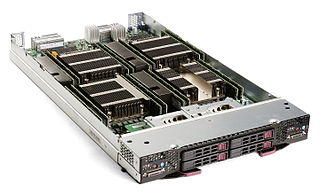

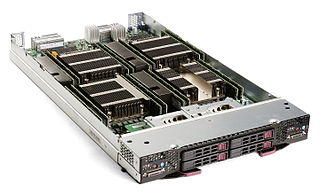

A blade server is a stripped-down server computer with a modular design optimized to minimize the use of physical space and energy. Blade servers have many components removed to save space, minimize power consumption and other considerations, while still having all the functional components to be considered a computer. Unlike a rack-mount server, a blade server fits inside a blade enclosure, which can hold multiple blade servers, providing services such as power, cooling, networking, various interconnects and management. Together, blades and the blade enclosure form a blade system, which may itself be rack-mounted. Different blade providers have differing principles regarding what to include in the blade itself, and in the blade system as a whole.

TURBOchannel is an open computer bus developed by DEC by during the late 1980s and early 1990s. Although it is open for any vendor to implement in their own systems, it was mostly used in Digital's own systems such as the MIPS-based DECstation and DECsystem systems, in the VAXstation 4000, and in the Alpha-based DEC 3000 AXP. Digital abandoned the use of TURBOchannel in favor of the EISA and PCI buses in late 1994, with the introduction of their AlphaStation and AlphaServer systems.

A rack unit is a unit of measure defined as 1+3⁄4 inches (44.45 mm). It is most frequently used as a measurement of the overall height of 19-inch and 23-inch rack frames, as well as the height of equipment that mounts in these frames, whereby the height of the frame or equipment is expressed as multiples of rack units. For example, a typical full-size rack cage is 42U high, while equipment is typically 1U, 2U, 3U, or 4U high.

All electronic devices and circuitry generate excess heat and thus require thermal management to improve reliability and prevent premature failure. The amount of heat output is equal to the power input, if there are no other energy interactions. There are several techniques for cooling including various styles of heat sinks, thermoelectric coolers, forced air systems and fans, heat pipes, and others. In cases of extreme low environmental temperatures, it may actually be necessary to heat the electronic components to achieve satisfactory operation.

A power distribution unit (PDU) is a device fitted with multiple outputs designed to distribute electric power, especially to racks of computers and networking equipment located within a data center. Data centers face challenges in power protection and management solutions. This is why many data centers rely on PDU monitoring to improve efficiency, uptime, and growth. For data center applications, the power requirement is typically much larger than a home or office style power strips with power inputs as large as 22 kVA or even greater. Most large data centers utilize PDUs with 3-phase power input and 1-phase power output. There are two main categories of PDUs: Basic PDUs and Intelligent (networked) PDUs or iPDUs. Basic PDUs simply provide a means of distributing power from the input to a plurality of outlets. Intelligent PDUs normally have an intelligence module which allow the PDU for remote management of power metering information, power outlet on/off control, and/or alarms. Some advanced PDUs allow users to manage external sensors such as temperature, humidity, airflow, etc.

A server room is a room, usually air-conditioned, devoted to the continuous operation of computer servers. An entire building or station devoted to this purpose is a data center.

A riser card is a printed circuit board that gives a computer motherboard the option for additional expansion cards to be added to the computer.

Power usage effectiveness (PUE) is a ratio that describes how efficiently a computer data center uses energy; specifically, how much energy is used by the computing equipment.

The Open Compute Project (OCP) is an organization that shares designs of data center products and best practices among companies, including Arm, Meta, IBM, Wiwynn, Intel, Nokia, Google, Microsoft, Seagate Technology, Dell, Rackspace, Hewlett Packard Enterprise, NVIDIA, Cisco, Goldman Sachs, Fidelity, Lenovo and Alibaba Group.

The HP Performance Optimized Datacenter (POD) is a range of three modular data centers manufactured by HP.

Energy Logic is a vendor-neutral approach to achieving energy efficiency in data centers. Developed and initially released in 2007, the Energy Logic efficiency model suggests ten holistic actions – encompassing IT equipment as well as traditional data center infrastructure – guided by the principles dictated by the "Cascade Effect."

Modular crate electronics are a general type of electronics and support infrastructure commonly used for trigger electronics and data acquisition in particle detectors. These types of electronics are common in such detectors because all the electronic pathways are made by discrete physical cables connecting together logic blocks on the fronts of modules. This allows circuits to be designed, built, tested, and deployed very quickly as an experiment is being put together. Then the modules can all be removed and used again when the experiment is done.

Immersion cooling, also known as "direct liquid cooling", is a technique used for computer cooling, battery cooling, and motor cooling in which electrical and electronic components, including complete servers and storage devices, are mostly or fully submerged in a thermally conductive but electrically insulating liquid coolant. Heat is removed from a system by putting the coolant in direct contact with hot components, and circulating the heated liquid through heat exchangers. This practice is highly effective because liquid coolants can absorb more heat from the system, and are more easily circulated through the system, than air.

A Wireless Data center is a type of data center that uses wireless communication technology instead of cables to store, process and retrieve data for enterprises. The development of Wireless Data centers arose as a solution to growing cabling complexity and hotspots. The wireless technology was introduced by Shin et al., who replaced all cables with 60 GHz wireless connections at the Cayley data center.