A 19-inch rack is a standardized frame or enclosure for mounting multiple electronic equipment modules. Each module has a front panel that is 19 inches (482.6 mm) wide. The 19 inch dimension includes the edges or ears that protrude from each side of the equipment, allowing the module to be fastened to the rack frame with screws or bolts. Common uses include computer servers, telecommunications equipment and networking hardware, audiovisual production gear, music production equipment, and scientific equipment.

A colocation center or "carrier hotel", is a type of data centre where equipment, space, and bandwidth are available for rental to retail customers. Colocation facilities provide space, power, cooling, and physical security for the server, storage, and networking equipment of other firms and also connect them to a variety of telecommunications and network service providers with a minimum of cost and complexity.

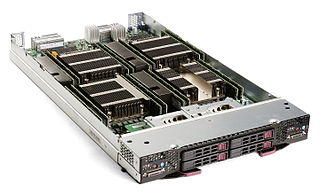

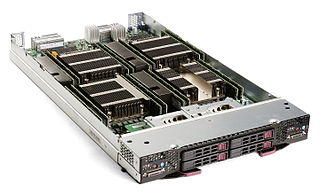

A server farm or server cluster is a collection of computer servers, usually maintained by an organization to supply server functionality far beyond the capability of a single machine. They often consist of thousands of computers which require a large amount of power to run and to keep cool. At the optimum performance level, a server farm has enormous financial and environmental costs. They often include backup servers that can take over the functions of primary servers that may fail. Server farms are typically collocated with the network switches and/or routers that enable communication between different parts of the cluster and the cluster's users. Server "farmers" typically mount computers, routers, power supplies and related electronics on 19-inch racks in a server room or data center.

A data center or data centre is a building, a dedicated space within a building, or a group of buildings used to house computer systems and associated components, such as telecommunications and storage systems.

A central heating system provides warmth to a number of spaces within a building from one main source of heat. It is a component of heating, ventilation, and air conditioning systems, which can both cool and warm interior spaces.

Failover is switching to a redundant or standby computer server, system, hardware component or network upon the failure or abnormal termination of the previously active application, server, system, hardware component, or network in a computer network. Failover and switchover are essentially the same operation, except that failover is automatic and usually operates without warning, while switchover requires human intervention.

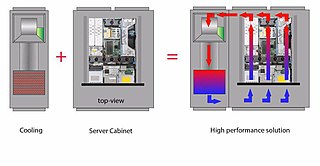

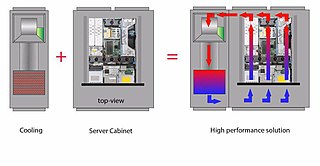

Computer cooling is required to remove the waste heat produced by computer components, to keep components within permissible operating temperature limits. Components that are susceptible to temporary malfunction or permanent failure if overheated include integrated circuits such as central processing units (CPUs), chipsets, graphics cards, hard disk drives, and solid state drives.

A blade server is a stripped-down server computer with a modular design optimized to minimize the use of physical space and energy. Blade servers have many components removed to save space, minimize power consumption and other considerations, while still having all the functional components to be considered a computer. Unlike a rack-mount server, a blade server fits inside a blade enclosure, which can hold multiple blade servers, providing services such as power, cooling, networking, various interconnects and management. Together, blades and the blade enclosure form a blade system, which may itself be rack-mounted. Different blade providers have differing principles regarding what to include in the blade itself, and in the blade system as a whole.

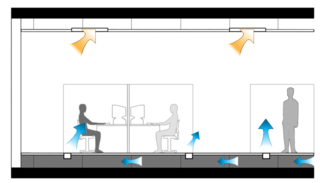

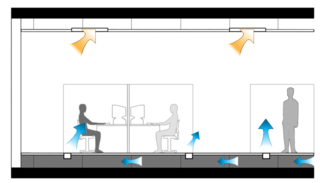

A raised floor provides an elevated structural floor above a solid substrate to create a hidden void for the passage of mechanical and electrical services. Raised floors are widely used in modern office buildings, and in specialized areas such as command centers, Information technology data centers and computer rooms, where there is a requirement to route mechanical services and cables, wiring, and electrical supply. Such flooring can be installed at varying heights from 2 inches (51 mm) to heights above 4 feet (1.2 m) to suit services that may be accommodated beneath. Additional structural support and lighting are often provided when a floor is raised enough for a person to crawl or even walk beneath.

A technical room or equipment room is a room where technical equipment has been installed, for example for controlling a building's climate, electricity, water and wastewater. The equipment can include electric panels, central heating, heat network, machinery for ventilation systems, air conditioning, various types of pumps and boilers, as well as telecommunications equipment. It can serve one or more housing units or buildings.

All electronic devices and circuitry generate excess heat and thus require thermal management to improve reliability and prevent premature failure. The amount of heat output is equal to the power input, if there are no other energy interactions. There are several techniques for cooling including various styles of heat sinks, thermoelectric coolers, forced air systems and fans, heat pipes, and others. In cases of extreme low environmental temperatures, it may actually be necessary to heat the electronic components to achieve satisfactory operation.

A fan coil unit (FCU), also known as a Vertical Fan Coil Unit (VFC), is a device consisting of a heat exchanger (coil) and a fan. FCUs are commonly used in HVAC systems of residential, commercial, and industrial buildings that use ducted split air conditioning or central plant cooling. FCUs are typically connected to ductwork and a thermostat to regulate the temperature of one or more spaces and to assist the main air handling unit for each space if used with chillers. The thermostat controls the fan speed and/or the flow of water or refrigerant to the heat exchanger using a control valve.

Liebert Corporation was a global manufacturer of power, precision cooling and infrastructure management systems for mainframe computer, server racks, and critical process systems headquartered in Westerville, Ohio. Founded in 1965, the company employed more than 1,800 people across 12 manufacturing plants worldwide. Since 2016, Liebert has been a subsidiary of Vertiv.

HVAC is a major sub discipline of mechanical engineering. The goal of HVAC design is to balance indoor environmental comfort with other factors such as installation cost, ease of maintenance, and energy efficiency. The discipline of HVAC includes a large number of specialized terms and acronyms, many of which are summarized in this glossary.

Underfloor air distribution (UFAD) is an air distribution strategy for providing ventilation and space conditioning in buildings as part of the design of a HVAC system. UFAD systems use an underfloor supply plenum located between the structural concrete slab and a raised floor system to supply conditioned air to supply outlets, located at or near floor level within the occupied space. Air returns from the room at ceiling level or the maximum allowable height above the occupied zone.

The HP Performance Optimized Datacenter (POD) is a range of three modular data centers manufactured by HP.

A register is a grille with moving parts, capable of being opened and closed and the air flow directed, which is part of a building's heating, ventilation, and air conditioning (HVAC) system. The placement and size of registers is critical to HVAC efficiency. Register dampers are also important, and can serve a safety function.

Immersion cooling is an IT cooling practice by which complete servers are immersed in a dielectric, electrically non-conductive fluid that has significantly higher thermal conductivity than air. Heat is removed from a system by putting the coolant in direct contact with hot components, and circulating the heated liquid through heat exchangers. This practice is highly effective because liquid coolants can absorb more heat from the system, and are more easily circulated through the system, than air. Immersion cooling has many benefits, including but not limited to: sustainability, performance, reliability and cost.

Close Coupled Cooling is a last generation cooling system particularly used in data centers. The goal of close coupled cooling is to bring heat transfer closest to its source: the equipment rack. By moving the air conditioner closer to the equipment rack a more precise delivery of inlet air and a more immediate capture of exhaust air is ensured.

A green data center, or sustainable data center, is a service facility which utilizes energy-efficient technologies. They do not contain obsolete systems, and take advantage of newer, more efficient technologies.