Computer data storage or digital data storage is a technology consisting of computer components and recording media that are used to retain digital data. It is a core function and fundamental component of computers.

In computing, a cache is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A cache hit occurs when the requested data can be found in a cache, while a cache miss occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs.

Direct memory access (DMA) is a feature of computer systems that allows certain hardware subsystems to access main system memory independently of the central processing unit (CPU).

In computer operating systems, memory paging is a memory management scheme by which a computer stores and retrieves data from secondary storage for use in main memory. In this scheme, the operating system retrieves data from secondary storage in same-size blocks called pages. Paging is an important part of virtual memory implementations in modern operating systems, using secondary storage to let programs exceed the size of available physical memory.

A page table is a data structure used by a virtual memory system in a computer to store mappings between virtual addresses and physical addresses. Virtual addresses are used by the program executed by the accessing process, while physical addresses are used by the hardware, or more specifically, by the random-access memory (RAM) subsystem. The page table is a key component of virtual address translation that is necessary to access data in memory. The page table is set up by the computer's operating system, and may be read and written during the virtual address translation process by the memory management unit or by low-level system software or firmware.

The Write Anywhere File Layout (WAFL) is a proprietary file system that supports large, high-performance RAID arrays, quick restarts without lengthy consistency checks in the event of a crash or power failure, and growing the filesystems size quickly. It was designed by NetApp for use in its storage appliances like NetApp FAS, AFF, Cloud Volumes ONTAP and ONTAP Select.

A diskless node is a workstation or personal computer without disk drives, which employs network booting to load its operating system from a server.

In computer science, a data buffer is a region of memory used to store data temporarily while it is being moved from one place to another. Typically, the data is stored in a buffer as it is retrieved from an input device or just before it is sent to an output device ; however, a buffer may be used when data is moved between processes within a computer, comparable to buffers in telecommunication. Buffers can be implemented in a fixed memory location in hardware or by using a virtual data buffer in software that points at a location in the physical memory.

In computer storage, fragmentation is a phenomenon in which storage space, such as computer memory or a hard drive, is used inefficiently, reducing capacity or performance and often both. The exact consequences of fragmentation depend on the specific system of storage allocation in use and the particular form of fragmentation. In many cases, fragmentation leads to storage space being "wasted", and programs will tend to run inefficiently due to the shortage of memory.

sync is a standard system call in the Unix operating system, which commits all data from the kernel filesystem buffers to non-volatile storage, i.e., data which has been scheduled for writing via low-level I/O system calls. Higher-level I/O layers such as stdio may maintain separate buffers of their own.

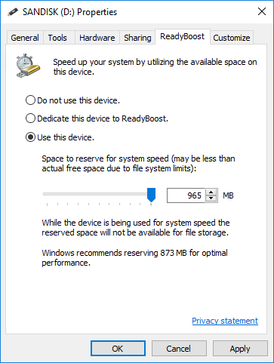

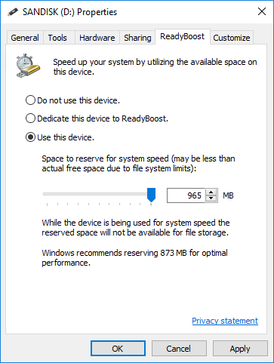

ReadyBoost is a disk caching software component developed by Microsoft for Windows Vista and included in later versions of Windows. ReadyBoost enables NAND memory mass storage CompactFlash, SD card, and USB flash drive devices to be used as a cache between the hard drive and random access memory in an effort to increase computing performance. ReadyBoost relies on the SuperFetch and also adjusts its cache based on user activity. ReadyDrive for hybrid drives is implemented in a manner similar to ReadyBoost.

A memory-mapped file is a segment of virtual memory that has been assigned a direct byte-for-byte correlation with some portion of a file or file-like resource. This resource is typically a file that is physically present on disk, but can also be a device, shared memory object, or other resource that an operating system can reference through a file descriptor. Once present, this correlation between the file and the memory space permits applications to treat the mapped portion as if it were primary memory.

In computer storage, a disk buffer is the embedded memory in a hard disk drive (HDD) or solid-state drive (SSD) acting as a buffer between the rest of the computer and the physical hard disk platter or flash memory that is used for storage. Modern hard disk drives come with 8 to 256 MiB of such memory, and solid-state drives come with up to 4 GB of cache memory.

A forensic disk controller or hardware write-block device is a specialized type of computer hard disk controller made for the purpose of gaining read-only access to computer hard drives without the risk of damaging the drive's contents. The device is named forensic because its most common application is for use in investigations where a computer hard drive may contain evidence. Such a controller historically has been made in the form of a dongle that fits between a computer and an IDE or SCSI hard drive, but with the advent of USB and SATA, forensic disk controllers supporting these newer technologies have become widespread. Steve Bress and Mark Menz invented hard drive write blocking.

SpartaDOS X is a disk operating system for the Atari 8-bit computers that closely resembles MS-DOS. It was developed and sold by ICD in 1987-1993, and many years later picked up by the third-party community SpartaDOS X Upgrade Project, which still maintains the software.

libtorrent is an open-source implementation of the BitTorrent protocol. It is written in and has its main library interface in C++. Its most notable features are support for Mainline DHT, IPv6, HTTP seeds and μTorrent's peer exchange. libtorrent uses Boost, specifically Boost.Asio to gain its platform independence. It is known to build on Windows and most Unix-like operating systems.

In computer science, memory virtualization decouples volatile random access memory (RAM) resources from individual systems in the data center, and then aggregates those resources into a virtualized memory pool available to any computer in the cluster. The memory pool is accessed by the operating system or applications running on top of the operating system. The distributed memory pool can then be utilized as a high-speed cache, a messaging layer, or a large, shared memory resource for a CPU or a GPU application.

bcache is a cache mechanism in the Linux kernel's block layer, which is used for accessing secondary storage devices. It allows one or more fast storage devices, such as flash-based solid-state drives (SSDs), to act as a cache for one or more slower storage devices, such as hard disk drives (HDDs); this effectively creates hybrid volumes and provides performance improvements.

dm-cache is a component of the Linux kernel's device mapper, which is a framework for mapping block devices onto higher-level virtual block devices. It allows one or more fast storage devices, such as flash-based solid-state drives (SSDs), to act as a cache for one or more slower storage devices such as hard disk drives (HDDs); this effectively creates hybrid volumes and provides secondary storage performance improvements.

Virtual memory compression is a memory management technique that utilizes data compression to reduce the size or number of paging requests to and from the auxiliary storage. In a virtual memory compression system, pages to be paged out of virtual memory are compressed and stored in physical memory, which is usually random-access memory (RAM), or sent as compressed to auxiliary storage such as a hard disk drive (HDD) or solid-state drive (SSD). In both cases the virtual memory range, whose contents has been compressed, is marked inaccessible so that attempts to access compressed pages can trigger page faults and reversal of the process. The footprint of the data being paged is reduced by the compression process; in the first instance, the freed RAM is returned to the available physical memory pool, while the compressed portion is kept in RAM. In the second instance, the compressed data is sent to auxiliary storage but the resulting I/O operation is smaller and therefore takes less time.