The Hahn–Banach theorem is a central tool in functional analysis. It allows the extension of bounded linear functionals defined on a subspace of some vector space to the whole space, and it also shows that there are "enough" continuous linear functionals defined on every normed vector space to make the study of the dual space "interesting". Another version of the Hahn–Banach theorem is known as the Hahn–Banach separation theorem or the hyperplane separation theorem, and has numerous uses in convex geometry.

In mathematics, the infimum of a subset of a partially ordered set is a greatest element in that is less than or equal to each element of if such an element exists. Consequently, the term greatest lower bound is also commonly used.

In mathematics, a real-valued function is called convex if the line segment between any two points on the graph of the function does not lie below the graph between the two points. Equivalently, a function is convex if its epigraph is a convex set. A twice-differentiable function of a single variable is convex if and only if its second derivative is nonnegative on its entire domain. Well-known examples of convex functions of a single variable include the quadratic function and the exponential function . In simple terms, a convex function refers to a function whose graph is shaped like a cup , while a concave function's graph is shaped like a cap .

In mathematics, Jensen's inequality, named after the Danish mathematician Johan Jensen, relates the value of a convex function of an integral to the integral of the convex function. It was proved by Jensen in 1906, building on an earlier proof of the same inequality for doubly-differentiable functions by Otto Hölder in 1889. Given its generality, the inequality appears in many forms depending on the context, some of which are presented below. In its simplest form the inequality states that the convex transformation of a mean is less than or equal to the mean applied after convex transformation; it is a simple corollary that the opposite is true of concave transformations.

In mathematics, the arguments of the maxima are the points, or elements, of the domain of some function at which the function values are maximized. In contrast to global maxima, which refers to the largest outputs of a function, arg max refers to the inputs, or arguments, at which the function outputs are as large as possible.

In mathematics, the Legendre transformation, named after Adrien-Marie Legendre, is an involutive transformation on real-valued convex functions of one real variable. In physical problems, it is used to convert functions of one quantity into functions of the conjugate quantity. In this way, it is commonly used in classical mechanics to derive the Hamiltonian formalism out of the Lagrangian formalism and in thermodynamics to derive the thermodynamic potentials, as well as in the solution of differential equations of several variables.

In functional analysis and related areas of mathematics, locally convex topological vector spaces (LCTVS) or locally convex spaces are examples of topological vector spaces (TVS) that generalize normed spaces. They can be defined as topological vector spaces whose topology is generated by translations of balanced, absorbent, convex sets. Alternatively they can be defined as a vector space with a family of seminorms, and a topology can be defined in terms of that family. Although in general such spaces are not necessarily normable, the existence of a convex local base for the zero vector is strong enough for the Hahn–Banach theorem to hold, yielding a sufficiently rich theory of continuous linear functionals.

In mathematics and mathematical optimization, the convex conjugate of a function is a generalization of the Legendre transformation which applies to non-convex functions. It is also known as Legendre–Fenchel transformation, Fenchel transformation, or Fenchel conjugate. It allows in particular for a far reaching generalization of Lagrangian duality.

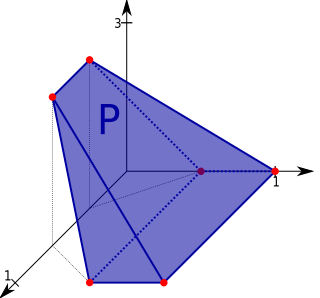

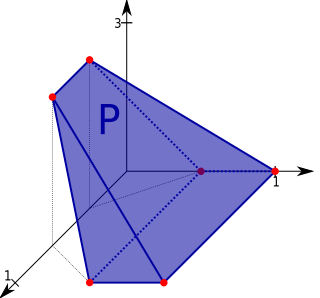

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets. Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard.

In functional analysis and related areas of mathematics, a sequence space is a vector space whose elements are infinite sequences of real or complex numbers. Equivalently, it is a function space whose elements are functions from the natural numbers to the field K of real or complex numbers. The set of all such functions is naturally identified with the set of all possible infinite sequences with elements in K, and can be turned into a vector space under the operations of pointwise addition of functions and pointwise scalar multiplication. All sequence spaces are linear subspaces of this space. Sequence spaces are typically equipped with a norm, or at least the structure of a topological vector space.

In mathematical optimization, the Karush–Kuhn–Tucker (KKT) conditions, also known as the Kuhn–Tucker conditions, are first derivative tests for a solution in nonlinear programming to be optimal, provided that some regularity conditions are satisfied.

In mathematics, Fenchel's duality theorem is a result in the theory of convex functions named after Werner Fenchel.

Convex analysis is the branch of mathematics devoted to the study of properties of convex functions and convex sets, often with applications in convex minimization, a subdomain of optimization theory.

In mathematical optimization theory, duality or the duality principle is the principle that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem. If the primal is a minimization problem then the dual is a maximization problem. Any feasible solution to the primal (minimization) problem is at least as large as any feasible solution to the dual (maximization) problem. Therefore, the solution to the primal is an upper bound to the solution of the dual, and the solution of the dual is a lower bound to the solution of the primal. This fact is called weak duality.

In mathematics, the concepts of essential infimum and essential supremum are related to the notions of infimum and supremum, but adapted to measure theory and functional analysis, where one often deals with statements that are not valid for all elements in a set, but rather almost everywhere, i.e., except on a set of measure zero.

In mathematics, a quasiconvex function is a real-valued function defined on an interval or on a convex subset of a real vector space such that the inverse image of any set of the form is a convex set. For a function of a single variable, along any stretch of the curve the highest point is one of the endpoints. The negative of a quasiconvex function is said to be quasiconcave.

In mathematics, the Wasserstein distance or Kantorovich–Rubinstein metric is a distance function defined between probability distributions on a given metric space . It is named after Leonid Vaseršteĭn.

In functional analysis, the dual norm is a measure of size for a continuous linear function defined on a normed vector space.

In optimization problems in applied mathematics, the duality gap is the difference between the primal and dual solutions. If is the optimal dual value and is the optimal primal value then the duality gap is equal to . This value is always greater than or equal to 0. The duality gap is zero if and only if strong duality holds. Otherwise the gap is strictly positive and weak duality holds.

In mathematical analysis, epi-convergence is a type of convergence for real-valued and extended real-valued functions.