Related Research Articles

In computing, a computer file is a resource for recording data on a computer storage device, primarily identified by its filename. Just as words can be written on paper, so can data be written to a computer file. Files can be shared with and transferred between computers and mobile devices via removable media, networks, or the Internet.

JPEG is a commonly used method of lossy compression for digital images, particularly for those images produced by digital photography. The degree of compression can be adjusted, allowing a selectable tradeoff between storage size and image quality. JPEG typically achieves 10:1 compression with little perceptible loss in image quality. Since its introduction in 1992, JPEG has been the most widely used image compression standard in the world, and the most widely used digital image format, with several billion JPEG images produced every day as of 2015.

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and partial data discarding to represent the content. These techniques are used to reduce data size for storing, handling, and transmitting content. The different versions of the photo of the cat on this page show how higher degrees of approximation create coarser images as more details are removed. This is opposed to lossless data compression which does not degrade the data. The amount of data reduction possible using lossy compression is much higher than using lossless techniques.

A compression artifact is a noticeable distortion of media caused by the application of lossy compression. Lossy data compression involves discarding some of the media's data so that it becomes small enough to be stored within the desired disk space or transmitted (streamed) within the available bandwidth. If the compressor cannot store enough data in the compressed version, the result is a loss of quality, or introduction of artifacts. The compression algorithm may not be intelligent enough to discriminate between distortions of little subjective importance and those objectionable to the user.

In computer programming, a magic number is any of the following:

File verification is the process of using an algorithm for verifying the integrity of a computer file, usually by checksum. This can be done by comparing two files bit-by-bit, but requires two copies of the same file, and may miss systematic corruptions which might occur to both files. A more popular approach is to generate a hash of the copied file and comparing that to the hash of the original file.

Computer forensics is a branch of digital forensic science pertaining to evidence found in computers and digital storage media. The goal of computer forensics is to examine digital media in a forensically sound manner with the aim of identifying, preserving, recovering, analyzing and presenting facts and opinions about the digital information.

Utility software is a program specifically designed to help manage and tune system or application software. It is used to support the computer infrastructure - in contrast to application software, which is aimed at directly performing tasks that benefit ordinary users. However, utilities often form part of the application systems. For example, a batch job may run user-written code to update a database and may then include a step that runs a utility to back up the database, or a job may run a utility to compress a disk before copying files..

A binary file is a computer file that is not a text file. The term "binary file" is often used as a term meaning "non-text file". Many binary file formats contain parts that can be interpreted as text; for example, some computer document files containing formatted text, such as older Microsoft Word document files, contain the text of the document but also contain formatting information in binary form.

A container format or metafile is a file format that allows multiple data streams to be embedded into a single file, usually along with metadata for identifying and further detailing those streams. Notable examples of container formats include archive files and formats used for multimedia playback. Among the earliest cross-platform container formats were Distinguished Encoding Rules and the 1985 Interchange File Format.

The Gutmann method is an algorithm for securely erasing the contents of computer hard disk drives, such as files. Devised by Peter Gutmann and Colin Plumb and presented in the paper Secure Deletion of Data from Magnetic and Solid-State Memory in July 1996, it involved writing a series of 35 patterns over the region to be erased.

Undeletion is a feature for restoring computer files which have been removed from a file system by file deletion. Deleted data can be recovered on many file systems, but not all file systems provide an undeletion feature. Recovering data without an undeletion facility is usually called data recovery, rather than undeletion. Undeletion can both help prevent users from accidentally losing data, or can pose a computer security risk, since users may not be aware that deleted files remain accessible.

In computing, data recovery is a process of retrieving deleted, inaccessible, lost, corrupted, damaged, or formatted data from secondary storage, removable media or files, when the data stored in them cannot be accessed in a usual way. The data is most often salvaged from storage media such as internal or external hard disk drives (HDDs), solid-state drives (SSDs), USB flash drives, magnetic tapes, CDs, DVDs, RAID subsystems, and other electronic devices. Recovery may be required due to physical damage to the storage devices or logical damage to the file system that prevents it from being mounted by the host operating system (OS).

NILFS or NILFS2 is a log-structured file system implementation for the Linux kernel. It was developed by Nippon Telegraph and Telephone Corporation (NTT) CyberSpace Laboratories and a community from all over the world. NILFS was released under the terms of the GNU General Public License (GPL).

TestDisk is a free and open-source data recovery utility that helps users recover lost partitions or repair corrupted filesystems. TestDisk can collect detailed information about a corrupted drive, which can then be sent to a technician for further analysis. TestDisk supports DOS, Microsoft Windows, Linux, FreeBSD, NetBSD, OpenBSD, SunOS, and MacOS. TestDisk handles non-partitioned and partitioned media. In particular, it recognizes the GUID Partition Table (GPT), Apple partition map, PC/Intel BIOS partition tables, Sun Solaris slice and Xbox fixed partitioning scheme. TestDisk uses a command line user interface. TestDisk can recover deleted files with 97% accuracy.

PhotoRec is a free and open-source utility software for data recovery with text-based user interface using data carving techniques, designed to recover lost files from various digital camera memory, hard disk and CD-ROM. It can recover the files with more than 480 file extensions . It is also possible to add custom file signature to detect less known files.

File carving is the process of reassembling computer files from fragments in the absence of filesystem metadata.

ZPAQ is an open source command line archiver for Windows and Linux. It uses a journaling or append-only format which can be rolled back to an earlier state to retrieve older versions of files and directories. It supports fast incremental update by adding only files whose last-modified date has changed since the previous update. It compresses using deduplication and several algorithms depending on the data type and the selected compression level. To preserve forward and backward compatibility between versions as the compression algorithm is improved, it stores the decompression algorithm in the archive. The ZPAQ source code includes a public domain API, libzpaq, which provides compression and decompression services to C++ applications. The format is believed to be unencumbered by patents.

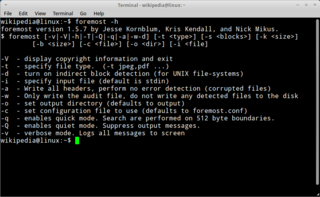

Foremost is a forensic data recovery program for Linux. Foremost is used to recover files using their headers, footers, and data structures through a process known as file carving. Although written for law enforcement use, the program and its source code are freely available and can be used as a general data recovery tool.

Optimistic decompression is a digital forensics technique in which each byte of an input buffer is examined for the possibility of compressed data. If data is found that might be compressed, a decompression algorithm is invoked to perform a trial decompression. If the decompressor does not produce an error, the decompressed data is processed. The decompressor is thus called optimistically---that is, with the hope that it might be successful.

References

- 1 2 Simson Garfinkel, Carving Contiguous and Fragmented Files with Fast Object Validation, in Proceedings of the 2007 digital forensics research workshop, DFRWS, Pittsburgh, PA, August 2007