Conditions for positivity

A continuous-time linear system is positive if and only if A is a Metzler matrix. [1]

A discrete-time linear system is positive if and only if A is a nonnegative matrix. [1]

Positive systems [1] [2] constitute a class of systems that has the important property that its state variables are never negative, given a positive initial state. These systems appear frequently in practical applications, [3] [4] as these variables represent physical quantities, with positive sign (levels, heights, concentrations, etc.).

The fact that a system is positive has important implications in the control system design. [5] For instance, an asymptotically stable positive linear time-invariant system always admits a diagonal quadratic Lyapunov function, which makes these systems more numerical tractable in the context of Lyapunov analysis. [6]

It is also important to take this positivity into account for state observer design, as standard observers (for example Luenberger observers) might give illogical negative values. [7]

A continuous-time linear system is positive if and only if A is a Metzler matrix. [1]

A discrete-time linear system is positive if and only if A is a nonnegative matrix. [1]

Control theory is a field of mathematics that deals with the control of dynamical systems in engineered processes and machines. The objective is to develop a model or algorithm governing the application of system inputs to drive the system to a desired state, while minimizing any delay, overshoot, or steady-state error and ensuring a level of control stability; often with the aim to achieve a degree of optimality.

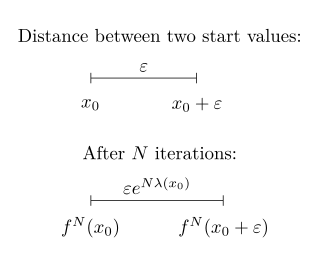

In mathematics, the Lyapunov exponent or Lyapunov characteristic exponent of a dynamical system is a quantity that characterizes the rate of separation of infinitesimally close trajectories. Quantitatively, two trajectories in phase space with initial separation vector diverge at a rate given by

In the theory of ordinary differential equations (ODEs), Lyapunov functions, named after Aleksandr Lyapunov, are scalar functions that may be used to prove the stability of an equilibrium of an ODE. Lyapunov functions are important to stability theory of dynamical systems and control theory. A similar concept appears in the theory of general state space Markov chains, usually under the name Foster–Lyapunov functions.

Various types of stability may be discussed for the solutions of differential equations or difference equations describing dynamical systems. The most important type is that concerning the stability of solutions near to a point of equilibrium. This may be discussed by the theory of Aleksandr Lyapunov. In simple terms, if the solutions that start out near an equilibrium point stay near forever, then is Lyapunov stable. More strongly, if is Lyapunov stable and all solutions that start out near converge to , then is asymptotically stable. The notion of exponential stability guarantees a minimal rate of decay, i.e., an estimate of how quickly the solutions converge. The idea of Lyapunov stability can be extended to infinite-dimensional manifolds, where it is known as structural stability, which concerns the behavior of different but "nearby" solutions to differential equations. Input-to-state stability (ISS) applies Lyapunov notions to systems with inputs.

Observability is a measure of how well internal states of a system can be inferred from knowledge of its external outputs.

In control theory, the discrete Lyapunov equation is of the form

In control theory, a state observer or state estimator is a system that provides an estimate of the internal state of a given real system, from measurements of the input and output of the real system. It is typically computer-implemented, and provides the basis of many practical applications.

In the theory of dynamical systems and control theory, a linear time-invariant system is marginally stable if it is neither asymptotically stable nor unstable. Roughly speaking, a system is stable if it always returns to and stays near a particular state, and is unstable if it goes farther and farther away from any state, without being bounded. A marginal system, sometimes referred to as having neutral stability, is between these two types: when displaced, it does not return to near a common steady state, nor does it go away from where it started without limit.

In mathematics, a Hurwitz matrix, or Routh–Hurwitz matrix, in engineering stability matrix, is a structured real square matrix constructed with coefficients of a real polynomial.

Nonlinear control theory is the area of control theory which deals with systems that are nonlinear, time-variant, or both. Control theory is an interdisciplinary branch of engineering and mathematics that is concerned with the behavior of dynamical systems with inputs, and how to modify the output by changes in the input using feedback, feedforward, or signal filtering. The system to be controlled is called the "plant". One way to make the output of a system follow a desired reference signal is to compare the output of the plant to the desired output, and provide feedback to the plant to modify the output to bring it closer to the desired output.

Non-negative matrix factorization, also non-negative matrix approximation is a group of algorithms in multivariate analysis and linear algebra where a matrix V is factorized into (usually) two matrices W and H, with the property that all three matrices have no negative elements. This non-negativity makes the resulting matrices easier to inspect. Also, in applications such as processing of audio spectrograms or muscular activity, non-negativity is inherent to the data being considered. Since the problem is not exactly solvable in general, it is commonly approximated numerically.

Semidefinite programming (SDP) is a subfield of convex optimization concerned with the optimization of a linear objective function over the intersection of the cone of positive semidefinite matrices with an affine space, i.e., a spectrahedron.

In mathematics, stability theory addresses the stability of solutions of differential equations and of trajectories of dynamical systems under small perturbations of initial conditions. The heat equation, for example, is a stable partial differential equation because small perturbations of initial data lead to small variations in temperature at a later time as a result of the maximum principle. In partial differential equations one may measure the distances between functions using Lp norms or the sup norm, while in differential geometry one may measure the distance between spaces using the Gromov–Hausdorff distance.

In control theory, a control-Lyapunov function (CLF) is an extension of the idea of Lyapunov function to systems with control inputs. The ordinary Lyapunov function is used to test whether a dynamical system is (Lyapunov) stable or asymptotically stable. Lyapunov stability means that if the system starts in a state in some domain D, then the state will remain in D for all time. For asymptotic stability, the state is also required to converge to . A control-Lyapunov function is used to test whether a system is asymptotically stabilizable, that is whether for any state x there exists a control such that the system can be brought to the zero state asymptotically by applying the control u.

In mathematics, a Metzler matrix is a matrix in which all the off-diagonal components are nonnegative :

In mathematics, especially linear algebra, an M-matrix is a Z-matrix with eigenvalues whose real parts are nonnegative. The set of non-singular M-matrices are a subset of the class of P-matrices, and also of the class of inverse-positive matrices. The name M-matrix was seemingly originally chosen by Alexander Ostrowski in reference to Hermann Minkowski, who proved that if a Z-matrix has all of its row sums positive, then the determinant of that matrix is positive.

In numerical linear algebra, the alternating-direction implicit (ADI) method is an iterative method used to solve Sylvester matrix equations. It is a popular method for solving the large matrix equations that arise in systems theory and control, and can be formulated to construct solutions in a memory-efficient, factored form. It is also used to numerically solve parabolic and elliptic partial differential equations, and is a classic method used for modeling heat conduction and solving the diffusion equation in two or more dimensions. It is an example of an operator splitting method.

The Lyapunov–Malkin theorem is a mathematical theorem detailing stability of nonlinear systems.

A matrix difference equation is a difference equation in which the value of a vector of variables at one point in time is related to its own value at one or more previous points in time, using matrices. The order of the equation is the maximum time gap between any two indicated values of the variable vector. For example,

In control theory, the cross Gramian is a Gramian matrix used to determine how controllable and observable a linear system is.