Related Research Articles

Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a scene file containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, texture, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output.

Global illumination (GI), or indirect illumination, is a group of algorithms used in 3D computer graphics that are meant to add more realistic lighting to 3D scenes. Such algorithms take into account not only the light that comes directly from a light source, but also subsequent cases in which light rays from the same source are reflected by other surfaces in the scene, whether reflective or not.

The painter’s algorithm is an algorithm for visible surface determination in 3D computer graphics that works on a polygon-by-polygon basis rather than a pixel-by-pixel, row by row, or area by area basis of other Hidden-Surface Removal algorithms. The painter’s algorithm creates images by sorting the polygons within the image by their depth and placing each polygon in order from the farthest to the closest object.

In computer science, binary space partitioning (BSP) is a method for space partitioning which recursively subdivides a Euclidean space into two convex sets by using hyperplanes as partitions. This process of subdividing gives rise to a representation of objects within the space in the form of a tree data structure known as a BSP tree.

A depth buffer, also known as a z-buffer, is a type of data buffer used in computer graphics to represent depth information of objects in 3D space from a particular perspective. Depth buffers are an aid to rendering a scene to ensure that the correct polygons properly occlude other polygons. Z-buffering was first described in 1974 by Wolfgang Straßer in his PhD thesis on fast algorithms for rendering occluded objects. A similar solution to determining overlapping polygons is the painter's algorithm, which is capable of handling non-opaque scene elements, though at the cost of efficiency and incorrect results.

Shadow volume is a technique used in 3D computer graphics to add shadows to a rendered scene. They were first proposed by Frank Crow in 1977 as the geometry describing the 3D shape of the region occluded from a light source. A shadow volume divides the virtual world in two: areas that are in shadow and areas that are not.

In 3D computer graphics, hidden-surface determination is the process of identifying what surfaces and parts of surfaces can be seen from a particular viewing angle. A hidden-surface determination algorithm is a solution to the visibility problem, which was one of the first major problems in the field of 3D computer graphics. The process of hidden-surface determination is sometimes called hiding, and such an algorithm is sometimes called a hider. When referring to line rendering it is known as hidden-line removal. Hidden-surface determination is necessary to render a scene correctly, so that one may not view features hidden behind the model itself, allowing only the naturally viewable portion of the graphic to be visible.

In 3D computer graphics, solid objects are usually modeled by polyhedra. A face of a polyhedron is a planar polygon bounded by straight line segments, called edges. Curved surfaces are usually approximated by a polygon mesh. Computer programs for line drawings of opaque objects must be able to decide which edges or which parts of the edges are hidden by an object itself or by other objects, so that those edges can be clipped during rendering. This problem is known as hidden-line removal.

In scientific visualization and computer graphics, volume rendering is a set of techniques used to display a 2D projection of a 3D discretely sampled data set, typically a 3D scalar field.

In computer graphics, level of detail (LOD) refers to the complexity of a 3D model representation. LOD can be decreased as the model moves away from the viewer or according to other metrics such as object importance, viewpoint-relative speed or position. LOD techniques increase the efficiency of rendering by decreasing the workload on graphics pipeline stages, usually vertex transformations. The reduced visual quality of the model is often unnoticed because of the small effect on object appearance when distant or moving fast.

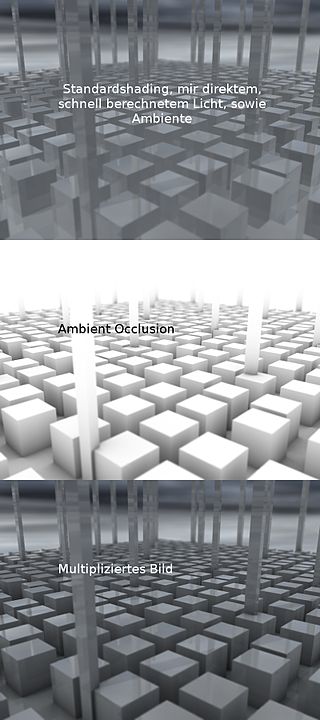

In 3D computer graphics, modeling, and animation, ambient occlusion is a shading and rendering technique used to calculate how exposed each point in a scene is to ambient lighting. For example, the interior of a tube is typically more occluded than the exposed outer surfaces, and becomes darker the deeper inside the tube one goes.

Clipping, in the context of computer graphics, is a method to selectively enable or disable rendering operations within a defined region of interest. Mathematically, clipping can be described using the terminology of constructive geometry. A rendering algorithm only draws pixels in the intersection between the clip region and the scene model. Lines and surfaces outside the view volume are removed.

In computer-generated imagery and real-time 3D computer graphics, portal rendering is an algorithm for visibility determination. For example, consider a 3D computer game environment, which may contain many polygons, only a few of which may be visible on screen at a given time. By determining which polygons are currently not visible, and not rendering those objects, significant performance improvements can be achieved.

Computer graphics lighting is the collection of techniques used to simulate light in computer graphics scenes. While lighting techniques offer flexibility in the level of detail and functionality available, they also operate at different levels of computational demand and complexity. Graphics artists can choose from a variety of light sources, models, shading techniques, and effects to suit the needs of each application.

Umbra is a graphics software technology company founded 2007 in Helsinki, Finland. Umbra specializes in occlusion culling, visibility solution technology and provides middleware for video games running on Windows, Linux, iOS, PlayStation 4, Xbox One, PlayStation 3, Xbox 360, Wii U, handheld consoles, and other platforms. In 2021, Amazon acquired Umbra.

Order-independent transparency (OIT) is a class of techniques in rasterisational computer graphics for rendering transparency in a 3D scene, which do not require rendering geometry in sorted order for alpha compositing.

Computer graphics is a sub-field of computer science which studies methods for digitally synthesizing and manipulating visual content. Although the term often refers to the study of three-dimensional computer graphics, it also encompasses two-dimensional graphics and image processing.

This is a glossary of terms relating to computer graphics.

Bedrich Benes is a computer scientist and a researcher in computer graphics.

The GigaMesh Software Framework is a free and open-source software for display, editing and visualization of 3D-data typically acquired with structured light or structure from motion.

References

- 1 2 S. Nirenstein, E. Blake, and J. Gain. Exact from-region visibility culling , In Proceedings of the 13th workshop on Rendering, pages 191–202. Eurographics Association, June 2002.

- 1 2 Cohen-Or, D.; Chrysanthou, Y. L.; Silva, C. T.; Durand, F. (2003). "A survey of visibility for walkthrough applications". IEEE Transactions on Visualization and Computer Graphics. 9 (3): 412–431. CiteSeerX 10.1.1.148.4589 . doi:10.1109/TVCG.2003.1207447.

- 1 2 3D Visibility: Analytical study and Applications , Frédo Durand, PhD thesis, Université Joseph Fourier, Grenoble, France, July 1999. is strongly related to exact visibility computations.

- ↑ Shaun Nirenstein and Edwin Blake, Hardware Accelerated Visibility Preprocessing using Adaptive Sampling , Rendering Techniques 2004: Proceedings of the 15th Eurographics Symposium on Rendering, 207- 216, Norrköping, Sweden, June 2004.

- ↑ Wonka, P.; Wimmer, M.; Zhou, K.; Maierhofer, S.; Hesina, G.; Reshetov, A. (July 2006). Guided visibility sampling. Proceedings of ACM SIGGRAPH 2006. Vol. 25. pp. 494–502. doi:10.1145/1179352.1141914. ISBN 978-1595933645. S2CID 9218485.

{{cite book}}:|journal=ignored (help) - ↑ Gotsman, C.; Sudarsky, O.; Fayman, J. A. (October 1999). "Optimized occlusion culling using five-dimensional subdivision" (PDF). Computers & Graphics. 23 (5): 645–654. doi:10.1016/S0097-8493(99)00088-6.

- 1 2 Seth Teller, Visibility Computations in Densely Occluded Polyhedral Environments (Part 2 of 3) (PhD dissertation, Berkeley, 1992)

- ↑ Jiri Bittner. Hierarchical Techniques for Visibility Computations , PhD Dissertation. Department of Computer Science and Engineering. Czech Technical University in Prague. Submitted October 2002, defended March 2003.

- ↑ Denis Haumont, Otso Mäkinen & Shaun Nirenstein (June 2005). A Low Dimensional Framework for Exact Polygon-to-Polygon Occlusion Queries. Rendering Techniques 2005: Proceedings of the 16th Eurographics Symposium on Rendering, Konstanz, Germany. pp. 211–222. CiteSeerX 10.1.1.66.6371 . doi:10.2312/EGWR/EGSR05/211-222.

- ↑ Jiri Bittner; Peter Wonka & Michael Wimmer (2005). "Fast Exact From-Region Visibility in Urban Scenes" (PDF). In Proceedings of Eurographics Symposium on Rendering: 223–230. doi:10.2312/EGWR/EGSR05/223-230. S2CID 9126258.

- ↑ D. Haumont, O. Debeir & F. Sillion (September 2003). "Volumetric Cell-and-Portal Generation". Graphics Forum. 22 (3): 303–312. CiteSeerX 10.1.1.163.6834 . doi:10.1111/1467-8659.00677. S2CID 14281909.

- ↑ Oliver Mattausch; Jiri Bittner; Michael Wimmer (2006). "Adaptive Visibility-Driven View Cell Construction". Proceedings of Eurographics Symposium on Rendering: 195–205. CiteSeerX 10.1.1.67.6705 . doi:10.2312/EGWR/EGSR06/195-205. S2CID 17919019.

- ↑ Michiel van de Panne & A. James Stewart (June 1999). "Effective Compression Techniques for Precomputed Visibility". Eurographics Workshop on Rendering: 305–316. CiteSeerX 10.1.1.116.8940 .