In mathematics, the determinant is a scalar value that is a function of the entries of a square matrix. The determinant of a matrix A is commonly denoted det(A), det A, or |A|. Its value characterizes some properties of the matrix and the linear map represented by the matrix. In particular, the determinant is nonzero if and only if the matrix is invertible and the linear map represented by the matrix is an isomorphism. The determinant of a product of matrices is the product of their determinants (which follows directly from the above properties).

In mathematics, Gaussian elimination, also known as row reduction, is an algorithm for solving systems of linear equations. It consists of a sequence of operations performed on the corresponding matrix of coefficients. This method can also be used to compute the rank of a matrix, the determinant of a square matrix, and the inverse of an invertible matrix. The method is named after Carl Friedrich Gauss (1777–1855). To perform row reduction on a matrix, one uses a sequence of elementary row operations to modify the matrix until the lower left-hand corner of the matrix is filled with zeros, as much as possible. There are three types of elementary row operations:

In mathematics, particularly in linear algebra, matrix multiplication is a binary operation that produces a matrix from two matrices. For matrix multiplication, the number of columns in the first matrix must be equal to the number of rows in the second matrix. The resulting matrix, known as the matrix product, has the number of rows of the first and the number of columns of the second matrix. The product of matrices A and B is denoted as AB.

In linear algebra, the Cholesky decomposition or Cholesky factorization is a decomposition of a Hermitian, positive-definite matrix into the product of a lower triangular matrix and its conjugate transpose, which is useful for efficient numerical solutions, e.g., Monte Carlo simulations. It was discovered by André-Louis Cholesky for real matrices, and posthumously published in 1924. When it is applicable, the Cholesky decomposition is roughly twice as efficient as the LU decomposition for solving systems of linear equations.

In linear algebra, the permanent of a square matrix is a function of the matrix similar to the determinant. The permanent, as well as the determinant, is a polynomial in the entries of the matrix. Both are special cases of a more general function of a matrix called the immanant.

In linear algebra, an n-by-n square matrix A is called invertible, if there exists an n-by-n square matrix B such that

In linear algebra, a minor of a matrix A is the determinant of some smaller square matrix, cut down from A by removing one or more of its rows and columns. Minors obtained by removing just one row and one column from square matrices are required for calculating matrix cofactors, which in turn are useful for computing both the determinant and inverse of square matrices. The requirement that the square matrix be smaller than the original matrix is often omitted in the definition.

In linear algebra, the Strassen algorithm, named after Volker Strassen, is an algorithm for matrix multiplication. It is faster than the standard matrix multiplication algorithm for large matrices, with a better asymptotic complexity, although the naive algorithm is often better for smaller matrices. The Strassen algorithm is slower than the fastest known algorithms for extremely large matrices, but such galactic algorithms are not useful in practice, as they are much slower for matrices of practical size. For small matrices even faster algorithms exist.

The Needleman–Wunsch algorithm is an algorithm used in bioinformatics to align protein or nucleotide sequences. It was one of the first applications of dynamic programming to compare biological sequences. The algorithm was developed by Saul B. Needleman and Christian D. Wunsch and published in 1970. The algorithm essentially divides a large problem into a series of smaller problems, and it uses the solutions to the smaller problems to find an optimal solution to the larger problem. It is also sometimes referred to as the optimal matching algorithm and the global alignment technique. The Needleman–Wunsch algorithm is still widely used for optimal global alignment, particularly when the quality of the global alignment is of the utmost importance. The algorithm assigns a score to every possible alignment, and the purpose of the algorithm is to find all possible alignments having the highest score.

In mathematics, the Smith normal form is a normal form that can be defined for any matrix with entries in a principal ideal domain (PID). The Smith normal form of a matrix is diagonal, and can be obtained from the original matrix by multiplying on the left and right by invertible square matrices. In particular, the integers are a PID, so one can always calculate the Smith normal form of an integer matrix. The Smith normal form is very useful for working with finitely generated modules over a PID, and in particular for deducing the structure of a quotient of a free module. It is named after the Irish mathematician Henry John Stephen Smith.

The Gauss–Newton algorithm is used to solve non-linear least squares problems, which is equivalent to minimizing a sum of squared function values. It is an extension of Newton's method for finding a minimum of a non-linear function. Since a sum of squares must be nonnegative, the algorithm can be viewed as using Newton's method to iteratively approximate zeroes of the components of the sum, and thus minimizing the sum. In this sense, the algorithm is also an effective method for solving overdetermined systems of equations. It has the advantage that second derivatives, which can be challenging to compute, are not required.

A logical matrix, binary matrix, relation matrix, Boolean matrix, or (0, 1)-matrix is a matrix with entries from the Boolean domain B = {0, 1}. Such a matrix can be used to represent a binary relation between a pair of finite sets. It is an important tool in combinatorial mathematics and theoretical computer science.

In numerical linear algebra, the Jacobi eigenvalue algorithm is an iterative method for the calculation of the eigenvalues and eigenvectors of a real symmetric matrix. It is named after Carl Gustav Jacob Jacobi, who first proposed the method in 1846, but only became widely used in the 1950s with the advent of computers.

Fleiss' kappa is a statistical measure for assessing the reliability of agreement between a fixed number of raters when assigning categorical ratings to a number of items or classifying items. This contrasts with other kappas such as Cohen's kappa, which only work when assessing the agreement between not more than two raters or the intra-rater reliability. The measure calculates the degree of agreement in classification over that which would be expected by chance.

In algebra, the greatest common divisor of two polynomials is a polynomial, of the highest possible degree, that is a factor of both the two original polynomials. This concept is analogous to the greatest common divisor of two integers.

In computer science, Cannon's algorithm is a distributed algorithm for matrix multiplication for two-dimensional meshes first described in 1969 by Lynn Elliot Cannon.

In numerical linear algebra, the alternating-direction implicit (ADI) method is an iterative method used to solve Sylvester matrix equations. It is a popular method for solving the large matrix equations that arise in systems theory and control, and can be formulated to construct solutions in a memory-efficient, factored form. It is also used to numerically solve parabolic and elliptic partial differential equations, and is a classic method used for modeling heat conduction and solving the diffusion equation in two or more dimensions. It is an example of an operator splitting method.

Because matrix multiplication is such a central operation in many numerical algorithms, much work has been invested in making matrix multiplication algorithms efficient. Applications of matrix multiplication in computational problems are found in many fields including scientific computing and pattern recognition and in seemingly unrelated problems such as counting the paths through a graph. Many different algorithms have been designed for multiplying matrices on different types of hardware, including parallel and distributed systems, where the computational work is spread over multiple processors.

The iterative proportional fitting procedure is the operation of finding the fitted matrix which is the closest to an initial matrix but with the row and column totals of a target matrix . The fitted matrix being of the form , where and are diagonal matrices such that has the margins of . Some algorithms can be chosen to perform biproportion. We have also the entropy maximization, information loss minimization or RAS which consists of factoring the matrix rows to match the specified row totals, then factoring its columns to match the specified column totals; each step usually disturbs the previous step’s match, so these steps are repeated in cycles, re-adjusting the rows and columns in turn, until all specified marginal totals are satisfactorily approximated. However, all algorithms give the same solution. In three- or more-dimensional cases, adjustment steps are applied for the marginals of each dimension in turn, the steps likewise repeated in cycles.

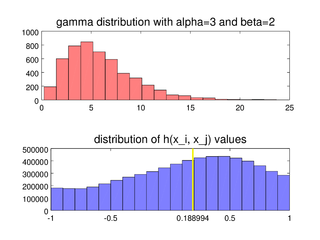

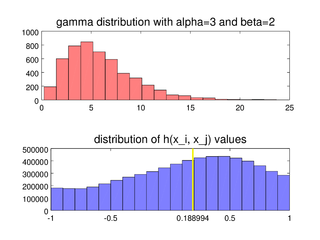

In statistics, the medcouple is a robust statistic that measures the skewness of a univariate distribution. It is defined as a scaled median difference between the left and right half of a distribution. Its robustness makes it suitable for identifying outliers in adjusted boxplots. Ordinary box plots do not fare well with skew distributions, since they label the longer unsymmetrical tails as outliers. Using the medcouple, the whiskers of a boxplot can be adjusted for skew distributions and thus have a more accurate identification of outliers for non-symmetrical distributions.