A minimum spanning tree (MST) or minimum weight spanning tree is a subset of the edges of a connected, edge-weighted undirected graph that connects all the vertices together, without any cycles and with the minimum possible total edge weight. That is, it is a spanning tree whose sum of edge weights is as small as possible. More generally, any edge-weighted undirected graph has a minimum spanning forest, which is a union of the minimum spanning trees for its connected components.

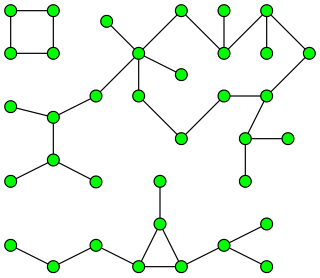

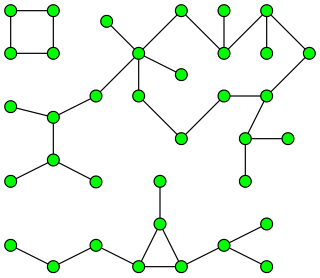

In graph theory, a tree is an undirected graph in which any two vertices are connected by exactly one path, or equivalently a connected acyclic undirected graph. A forest is an undirected graph in which any two vertices are connected by at most one path, or equivalently an acyclic undirected graph, or equivalently a disjoint union of trees.

Breadth-first search (BFS) is an algorithm for searching a tree data structure for a node that satisfies a given property. It starts at the tree root and explores all nodes at the present depth prior to moving on to the nodes at the next depth level. Extra memory, usually a queue, is needed to keep track of the child nodes that were encountered but not yet explored.

Depth-first search (DFS) is an algorithm for traversing or searching tree or graph data structures. The algorithm starts at the root node and explores as far as possible along each branch before backtracking. Extra memory, usually a stack, is needed to keep track of the nodes discovered so far along a specified branch which helps in backtracking of the graph.

In graph theory, a component of an undirected graph is a connected subgraph that is not part of any larger connected subgraph. The components of any graph partition its vertices into disjoint sets, and are the induced subgraphs of those sets. A graph that is itself connected has exactly one component, consisting of the whole graph. Components are sometimes called connected components.

In computational complexity theory, Savitch's theorem, proved by Walter Savitch in 1970, gives a relationship between deterministic and non-deterministic space complexity. It states that for any function ,

A Euclidean minimum spanning tree of a finite set of points in the Euclidean plane or higher-dimensional Euclidean space connects the points by a system of line segments with the points as endpoints, minimizing the total length of the segments. In it, any two points can reach each other along a path through the line segments. It can be found as the minimum spanning tree of a complete graph with the points as vertices and the Euclidean distances between points as edge weights.

In mathematics, loop-erased random walk is a model for a random simple path with important applications in combinatorics, physics and quantum field theory. It is intimately connected to the uniform spanning tree, a model for a random tree. See also random walk for more general treatment of this topic.

A tournament is a directed graph (digraph) obtained by assigning a direction for each edge in an undirected complete graph. That is, it is an orientation of a complete graph, or equivalently a directed graph in which every pair of distinct vertices is connected by a directed edge with any one of the two possible orientations.

In network theory, a giant component is a connected component of a given random graph that contains a significant fraction of the entire graph's vertices.

In computer science, the Hopcroft–Karp algorithm is an algorithm that takes a bipartite graph as input and produces a maximum-cardinality matching as output — a set of as many edges as possible with the property that no two edges share an endpoint. It runs in time in the worst case, where is set of edges in the graph, is set of vertices of the graph, and it is assumed that . In the case of dense graphs the time bound becomes , and for sparse random graphs it runs in time with high probability.

In computer science, a range tree is an ordered tree data structure to hold a list of points. It allows all points within a given range to be reported efficiently, and is typically used in two or higher dimensions. Range trees were introduced by Jon Louis Bentley in 1979. Similar data structures were discovered independently by Lueker, Lee and Wong, and Willard. The range tree is an alternative to the k-d tree. Compared to k-d trees, range trees offer faster query times of but worse storage of , where n is the number of points stored in the tree, d is the dimension of each point and k is the number of points reported by a given query.

In graph theory and theoretical computer science, the longest path problem is the problem of finding a simple path of maximum length in a given graph. A path is called simple if it does not have any repeated vertices; the length of a path may either be measured by its number of edges, or by the sum of the weights of its edges. In contrast to the shortest path problem, which can be solved in polynomial time in graphs without negative-weight cycles, the longest path problem is NP-hard and the decision version of the problem, which asks whether a path exists of at least some given length, is NP-complete. This means that the decision problem cannot be solved in polynomial time for arbitrary graphs unless P = NP. Stronger hardness results are also known showing that it is difficult to approximate. However, it has a linear time solution for directed acyclic graphs, which has important applications in finding the critical path in scheduling problems.

In graph theory, the planar separator theorem is a form of isoperimetric inequality for planar graphs, that states that any planar graph can be split into smaller pieces by removing a small number of vertices. Specifically, the removal of vertices from an n-vertex graph can partition the graph into disjoint subgraphs each of which has at most vertices.

In computer science and graph theory, the term color-coding refers to an algorithmic technique which is useful in the discovery of network motifs. For example, it can be used to detect a simple path of length k in a given graph. The traditional color-coding algorithm is probabilistic, but it can be derandomized without much overhead in the running time.

A top tree is a data structure based on a binary tree for unrooted dynamic trees that is used mainly for various path-related operations. It allows simple divide-and-conquer algorithms. It has since been augmented to maintain dynamically various properties of a tree such as diameter, center and median.

The expected linear time MST algorithm is a randomized algorithm for computing the minimum spanning forest of a weighted graph with no isolated vertices. It was developed by David Karger, Philip Klein, and Robert Tarjan. The algorithm relies on techniques from Borůvka's algorithm along with an algorithm for verifying a minimum spanning tree in linear time. It combines the design paradigms of divide and conquer algorithms, greedy algorithms, and randomized algorithms to achieve expected linear performance.

In computational complexity theory, a planted clique or hidden clique in an undirected graph is a clique formed from another graph by selecting a subset of vertices and adding edges between each pair of vertices in the subset. The planted clique problem is the algorithmic problem of distinguishing random graphs from graphs that have a planted clique. This is a variation of the clique problem; it may be solved in quasi-polynomial time but is conjectured not to be solvable in polynomial time for intermediate values of the clique size. The conjecture that no polynomial time solution exists is called the planted clique conjecture; it has been used as a computational hardness assumption.

Maximal entropy random walk (MERW) is a popular type of biased random walk on a graph, in which transition probabilities are chosen accordingly to the principle of maximum entropy, which says that the probability distribution which best represents the current state of knowledge is the one with largest entropy. While standard random walk chooses for every vertex uniform probability distribution among its outgoing edges, locally maximizing entropy rate, MERW maximizes it globally by assuming uniform probability distribution among all paths in a given graph.

Sidorenko's conjecture is a conjecture in the field of graph theory, posed by Alexander Sidorenko in 1986. Roughly speaking, the conjecture states that for any bipartite graph and graph on vertices with average degree , there are at least labeled copies of in , up to a small error term. Formally, it provides an intuitive inequality about graph homomorphism densities in graphons. The conjectured inequality can be interpreted as a statement that the density of copies of in a graph is asymptotically minimized by a random graph, as one would expect a fraction of possible subgraphs to be a copy of if each edge exists with probability .