In technology, response time is the time a system or functional unit takes to react to a given input.

In technology, response time is the time a system or functional unit takes to react to a given input.

In computing, the responsiveness of a service, how long a system takes to respond to a request for service, is measured through the response time. That service can be anything from a[ citation needed ] memory fetch, to a disk IO, to a complex database query, or loading a full web page. Ignoring transmission time for a moment, the response time is the sum of the service time and wait time. The service time is the time it takes to do the work you requested. For a given request the service time varies little as the workload increases – to do X amount of work it always takes X amount of time. The wait time is how long the request had to wait in a queue before being serviced and it varies from zero, when no waiting is required, to a large multiple of the service time, as many requests are already in the queue and have to be serviced first.[ original research? ]

With basic queueing theory math [1] you can calculate how the average wait time increases as the device providing the service goes from 0-100% busy. As the device becomes busier, the average wait time increases in a non-linear fashion. The busier the device is, the more dramatic the response time increases will seem as you approach 100% busy; all of that increase is caused by increases in wait time, which is the result of all the requests waiting in queue that have to run first.

Transmission time gets added to response time when your request and the resulting response has to travel over a network and it can be very significant. [2] Transmission time can include propagation delays due to distance (the speed of light is finite), delays due to transmission errors, and data communication bandwidth limits (especially at the last mile) slowing the transmission speed of the request or the reply.

Developers can reduce the response time of a system (for end users or not) using program optimization techniques.

In real-time systems the response time of a task or thread is defined as the time elapsed between the dispatch (time when task is ready to execute) to the time when it finishes its job (one dispatch). Response time is different from WCET which is the maximum time the task would take if it were to execute without interference. It is also different from deadline which is the length of time during which the task's output would be valid in the context of the specific system. And it has a relation to the TTFB, which is the time between the dispatch and the time when the response starts.

Response time is the amount of time a pixel in a display takes to change. It is measured in milliseconds (ms). Lower numbers mean faster transitions and therefore fewer visible image artifacts. Display monitors with long response times would create display motion blur around moving objects, making them unacceptable for rapidly moving images. Response times are usually measured from grey-to-grey transitions, based on a VESA industry standard from the 10% to the 90% points in the pixel response curve. [3] [4]

In fast paced competitive games such as Counter-Strike, the response time of a display is crucial for optimal performance. Displays that have a lower response time are more responsive to player input and produce less visual errors when displaying a rapidly changing image, making low response time important for competitive gaming. Most modern monitors that are marketed for gaming have a response time of 1ms, although it is not uncommon to see <1ms response time in high end monitors, and >1ms response time on less expensive monitors or monitors that have a higher resolution. [5]

In computing, a context switch is the process of storing the state of a process or thread, so that it can be restored and resume execution at a later point, and then restoring a different, previously saved, state. This allows multiple processes to share a single central processing unit (CPU), and is an essential feature of a multiprogramming or multitasking operating system. In a traditional CPU, each process - a program in execution - utilizes the various CPU registers to store data and hold the current state of the running process. However, in a multitasking operating system, the operating system switches between processes or threads to allow the execution of multiple processes simultaneously. For every switch, the operating system must save the state of the currently running process, followed by loading the next process state, which will run on the CPU. This sequence of operations that stores the state of the running process and the loading of the following running process is called a context switch.

A computer monitor is an output device that displays information in pictorial or textual form. A discrete monitor comprises a visual display, support electronics, power supply, housing, electrical connectors, and external user controls.

A liquid-crystal display (LCD) is a flat-panel display or other electronically modulated optical device that uses the light-modulating properties of liquid crystals combined with polarizers. Liquid crystals do not emit light directly but instead use a backlight or reflector to produce images in color or monochrome.

Latency, from a general point of view, is a time delay between the cause and the effect of some physical change in the system being observed. Lag, as it is known in gaming circles, refers to the latency between the input to a simulation and the visual or auditory response, often occurring because of network delay in online games.

A real-time operating system (RTOS) is an operating system (OS) for real-time computing applications that processes data and events that have critically defined time constraints. An RTOS is distinct from a time-sharing operating system, such as Unix, which manages the sharing of system resources with a scheduler, data buffers, or fixed task prioritization in a multitasking or multiprogramming environments. Processing time requirements need to be fully understood and bound rather than just kept as a minimum. All processing must occur within the defined constraints. Real-time operating systems are event-driven and preemptive, meaning the OS can monitor the relevant priority of competing tasks, and make changes to the task priority. Event-driven systems switch between tasks based on their priorities, while time-sharing systems switch the task based on clock interrupts.

Network throughput refers to the rate of message delivery over a communication channel, such as Ethernet or packet radio, in a communication network. The data that these messages contain may be delivered over physical or logical links, or through network nodes. Throughput is usually measured in bits per second, and sometimes in data packets per second or data packets per time slot.

In telecommunication and computer engineering, the queuing delay or queueing delay is the time a job waits in a queue until it can be executed. It is a key component of network delay. In a switched network, queuing delay is the time between the completion of signaling by the call originator and the arrival of a ringing signal at the call receiver. Queuing delay may be caused by delays at the originating switch, intermediate switches, or the call receiver servicing switch. In a data network, queuing delay is the sum of the delays between the request for service and the establishment of a circuit to the called data terminal equipment (DTE). In a packet-switched network, queuing delay is the sum of the delays encountered by a packet between the time of insertion into the network and the time of delivery to the address.

In computing, scheduling is the action of assigning resources to perform tasks. The resources may be processors, network links or expansion cards. The tasks may be threads, processes or data flows.

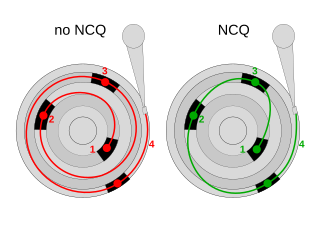

In computing, Native Command Queuing (NCQ) is an extension of the Serial ATA protocol allowing hard disk drives to internally optimize the order in which received read and write commands are executed. This can reduce the amount of unnecessary drive head movement, resulting in increased performance for workloads where multiple simultaneous read/write requests are outstanding, most often occurring in server-type applications.

In concurrent programming, a monitor is a synchronization construct that prevents threads from concurrently accessing a shared object's state and allows them to wait for the state to change. They provide a mechanism for threads to temporarily give up exclusive access in order to wait for some condition to be met, before regaining exclusive access and resuming their task. A monitor consists of a mutex (lock) and at least one condition variable. A condition variable is explicitly 'signalled' when the object's state is modified, temporarily passing the mutex to another thread 'waiting' on the conditional variable.

The event dispatching thread (EDT) is a background thread used in Java to process events from the Abstract Window Toolkit (AWT) graphical user interface event queue. It is an example of the generic concept of event-driven programming, that is popular in many other contexts than Java, for example, web browsers, or web servers.

Packet loss occurs when one or more packets of data travelling across a computer network fail to reach their destination. Packet loss is either caused by errors in data transmission, typically across wireless networks, or network congestion. Packet loss is measured as a percentage of packets lost with respect to packets sent.

Tagged Command Queuing (TCQ) is a technology built into certain ATA and SCSI hard drives. It allows the operating system to send multiple read and write requests to a hard drive. ATA TCQ is not identical in function to the more efficient Native Command Queuing (NCQ) used by SATA drives. SCSI TCQ does not suffer from the same limitations as ATA TCQ.

Latency refers to a short period of delay between when an audio signal enters a system and when it emerges. Potential contributors to latency in an audio system include analog-to-digital conversion, buffering, digital signal processing, transmission time, digital-to-analog conversion and the speed of sound in the transmission medium.

In computing, computer performance is the amount of useful work accomplished by a computer system. Outside of specific contexts, computer performance is estimated in terms of accuracy, efficiency and speed of executing computer program instructions. When it comes to high computer performance, one or more of the following factors might be involved:

Display motion blur, also called HDTV blur and LCD motion blur, refers to several visual artifacts that are frequently found on modern consumer high-definition television sets and flat panel displays for computers.

Display lag is a phenomenon associated with most types of liquid crystal displays (LCDs) like smartphones and computers and nearly all types of high-definition televisions (HDTVs). It refers to latency, or lag between when the signal is sent to the display and when the display starts to show that signal. This lag time has been measured as high as 68 ms, or the equivalent of 3-4 frames on a 60 Hz display. Display lag is not to be confused with pixel response time, which is the amount of time it takes for a pixel to change from one brightness value to another. Currently the majority of manufacturers quote the pixel response time, but neglect to report display lag.

Web performance refers to the speed in which web pages are downloaded and displayed on the user's web browser. Web performance optimization (WPO), or website optimization is the field of knowledge about increasing web performance.

Input lag or input latency is the amount of time that passes between sending an electrical signal and the occurrence of a corresponding action.

Time-Sensitive Networking (TSN) is a set of standards under development by the Time-Sensitive Networking task group of the IEEE 802.1 working group. The TSN task group was formed in November 2012 by renaming the existing Audio Video Bridging Task Group and continuing its work. The name changed as a result of the extension of the working area of the standardization group. The standards define mechanisms for the time-sensitive transmission of data over deterministic Ethernet networks.