Related Research Articles

Systems engineering is an interdisciplinary field of engineering and engineering management that focuses on how to design, integrate, and manage complex systems over their life cycles. At its core, systems engineering utilizes systems thinking principles to organize this body of knowledge. The individual outcome of such efforts, an engineered system, can be defined as a combination of components that work in synergy to collectively perform a useful function.

In computer science, formal methods are mathematically rigorous techniques for the specification, development, analysis, and verification of software and hardware systems. The use of formal methods for software and hardware design is motivated by the expectation that, as in other engineering disciplines, performing appropriate mathematical analysis can contribute to the reliability and robustness of a design.

In computing, stress testing can be applied to either hardware or software. It is used to determine the maximum capability of a computer system and is often used for purposes such as scaling for production use and ensuring reliability and stability. Stress tests typically involve running a large amount of resource-intensive processes until the system either crashes or nearly does

Electronic design automation (EDA), also referred to as electronic computer-aided design (ECAD), is a category of software tools for designing electronic systems such as integrated circuits and printed circuit boards. The tools work together in a design flow that chip designers use to design and analyze entire semiconductor chips. Since a modern semiconductor chip can have billions of components, EDA tools are essential for their design; this article in particular describes EDA specifically with respect to integrated circuits (ICs).

Software design is the process of conceptualizing how a software system will work before it is implemented or modified. Software design also refers to the direct result of the design process – the concepts of how the software will work which consists of both design documentation and undocumented concepts.

Computer-aided engineering (CAE) is the general usage of technology to aid in tasks related to engineering analysis. Any use of technology to solve or assist engineering issues falls under this umbrella.

In systems engineering, dependability is a measure of a system's availability, reliability, maintainability, and in some cases, other characteristics such as durability, safety and security. In real-time computing, dependability is the ability to provide services that can be trusted within a time-period. The service guarantees must hold even when the system is subject to attacks or natural failures.

In software project management, software testing, and software engineering, verification and validation is the process of checking that a software engineer system meets specifications and requirements so that it fulfills its intended purpose. It may also be referred to as software quality control. It is normally the responsibility of software testers as part of the software development lifecycle. In simple terms, software verification is: "Assuming we should build X, does our software achieve its goals without any bugs or gaps?" On the other hand, software validation is: "Was X what we should have built? Does X meet the high-level requirements?"

Design for Six Sigma (DFSS) is a collection of best-practices for the development of new products and processes. It is sometimes deployed as an engineering design process or business process management method. DFSS originated at General Electric to build on the success they had with traditional Six Sigma; but instead of process improvement, DFSS was made to target new product development. It is used in many industries, like finance, marketing, basic engineering, process industries, waste management, and electronics. It is based on the use of statistical tools like linear regression and enables empirical research similar to that performed in other fields, such as social science. While the tools and order used in Six Sigma require a process to be in place and functioning, DFSS has the objective of determining the needs of customers and the business, and driving those needs into the product solution so created. It is used for product or process design in contrast with process improvement. Measurement is the most important part of most Six Sigma or DFSS tools, but whereas in Six Sigma measurements are made from an existing process, DFSS focuses on gaining a deep insight into customer needs and using these to inform every design decision and trade-off.

System testing, a.k.a. end-to-end (E2E) testing, is testing conducted on a complete software system.

Reliability engineering is a sub-discipline of systems engineering that emphasizes the ability of equipment to function without failure. Reliability is defined as the probability that a product, system, or service will perform its intended function adequately for a specified period of time, OR will operate in a defined environment without failure. Reliability is closely related to availability, which is typically described as the ability of a component or system to function at a specified moment or interval of time.

Fault tolerance is the ability of a system to maintain proper operation despite failures or faults in one or more of its components. This capability is essential for high-availability, mission-critical, or even life-critical systems.

Mutation testing is used to design new software tests and evaluate the quality of existing software tests. Mutation testing involves modifying a program in small ways. Each mutated version is called a mutant and tests detect and reject mutants by causing the behaviour of the original version to differ from the mutant. This is called killing the mutant. Test suites are measured by the percentage of mutants that they kill. New tests can be designed to kill additional mutants. Mutants are based on well-defined mutation operators that either mimic typical programming errors or force the creation of valuable tests. The purpose is to help the tester develop effective tests or locate weaknesses in the test data used for the program or in sections of the code that are seldom or never accessed during execution. Mutation testing is a form of white-box testing.

PLECS is a software tool for system-level simulations of electrical circuits developed by Plexim. It is especially designed for power electronics but can be used for any electrical network. PLECS includes the possibility to model controls and different physical domains besides the electrical system.

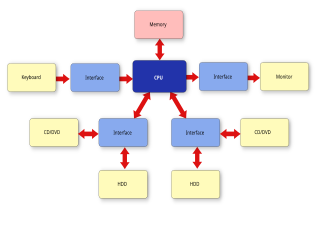

A system architecture is the conceptual model that defines the structure, behavior, and views of a system. An architecture description is a formal description and representation of a system, organized in a way that supports reasoning about the structures and behaviors of the system.

In computer science, fault injection is a testing technique for understanding how computing systems behave when stressed in unusual ways. This can be achieved using physical- or software-based means, or using a hybrid approach. Widely studied physical fault injections include the application of high voltages, extreme temperatures and electromagnetic pulses on electronic components, such as computer memory and central processing units. By exposing components to conditions beyond their intended operating limits, computing systems can be coerced into mis-executing instructions and corrupting critical data.

Verification and validation are independent procedures that are used together for checking that a product, service, or system meets requirements and specifications and that it fulfills its intended purpose. These are critical components of a quality management system such as ISO 9000. The words "verification" and "validation" are sometimes preceded with "independent", indicating that the verification and validation is to be performed by a disinterested third party. "Independent verification and validation" can be abbreviated as "IV&V".

The Oulu University Secure Programming Group (OUSPG) is a research group at the University of Oulu that studies, evaluates and develops methods of implementing and testing application and system software in order to prevent, discover and eliminate implementation level security vulnerabilities in a pro-active fashion. The focus is on implementation level security issues and software security testing.

In computer science, robustness is the ability of a computer system to cope with errors during execution and cope with erroneous input. Robustness can encompass many areas of computer science, such as robust programming, robust machine learning, and Robust Security Network. Formal techniques, such as fuzz testing, are essential to showing robustness since this type of testing involves invalid or unexpected inputs. Alternatively, fault injection can be used to test robustness. Various commercial products perform robustness testing of software analysis.

Chaos engineering is the discipline of experimenting on a system in order to build confidence in the system's capability to withstand turbulent conditions in production.

References

- ↑ "Standard Glossary of Software Engineering Terminology (ANSI)". The Institute of Electrical and Electronics Engineers Inc. 1991.

- ↑ Kropp, Koopman, Siewiorek. 1998. Automated Robustness Testing of Off-the_Shelf Software Components. Proceedings of FTCS'98. http://www.ece.cmu.edu/~koopman/ballista/ftcs98/ftcs98.pdf

- ↑ Kaksonen, Rauli. 2001. A Functional Method for Assessing Protocol Implementation Security (Licentiate thesis). Espoo. Technical Research Centre of Finland, VTT Publications 448. 128 p. + app. 15 p. ISBN 951-38-5873-1 (soft back ed.) ISBN 951-38-5874-X (on-line ed.). https://www.ee.oulu.fi/research/ouspg/PROTOS_VTT2001-functional

- ↑ Moradi, Mehrdad; Van Acker, Bert; Vanherpen, Ken; Denil, Joachim (2019). "Model-Implemented Hybrid Fault Injection for Simulink (Tool Demonstrations)". In Chamberlain, Roger; Taha, Walid; Törngren, Martin (eds.). Cyber Physical Systems. Model-Based Design. Lecture Notes in Computer Science. Vol. 11615. Cham: Springer International Publishing. pp. 71–90. doi:10.1007/978-3-030-23703-5_4. ISBN 978-3-030-23703-5. S2CID 195769468.

- ↑ "Optimizing fault injection in FMI co-simulation through sensitivity partitioning | Proceedings of the 2019 Summer Simulation Conference". dl.acm.org: 1–12. 22 July 2019. Retrieved 2020-06-15.

- ↑ Moradi, Mehrdad, Bentley James Oakes, Mustafa Saraoglu, Andrey Morozov, Klaus Janschek, and Joachim Denil. "Exploring Fault Parameter Space Using Reinforcement Learning-based Fault Injection." (2020).