Related Research Articles

Personal information management (PIM) is the study and implementation of the activities that people perform in order to acquire or create, store, organize, maintain, retrieve, and use informational items such as documents, web pages, and email messages for everyday use to complete tasks and fulfill a person's various roles ; it is information management with intrapersonal scope.

Computer-supported cooperative work (CSCW) is the study of how people utilize technology collaboratively, often towards a shared goal. CSCW addresses how computer systems can support collaborative activity and coordination. More specifically, the field of CSCW seeks to analyze and draw connections between currently understood human psychological and social behaviors and available collaborative tools, or groupware. Often the goal of CSCW is to help promote and utilize technology in a collaborative way, and help create new tools to succeed in that goal. These parallels allow CSCW research to inform future design patterns or assist in the development of entirely new tools.

In human–computer interaction, WIMP stands for "windows, icons, menus, pointer", denoting a style of interaction using these elements of the user interface. Other expansions are sometimes used, such as substituting "mouse" and "mice" for menus, or "pull-down menu" and "pointing" for pointer.

Gesture recognition is a topic in computer science and language technology with the goal of interpreting human gestures via mathematical algorithms. It is a subdiscipline of computer vision. Gestures can originate from any bodily motion or state, but commonly originate from the face or hand. Focuses in the field include emotion recognition from face and hand gesture recognition since they are all expressions. Users can make simple gestures to control or interact with devices without physically touching them. Many approaches have been made using cameras and computer vision algorithms to interpret sign language, however, the identification and recognition of posture, gait, proxemics, and human behaviors is also the subject of gesture recognition techniques. Gesture recognition can be seen as a way for computers to begin to understand human body language, thus building a better bridge between machines and humans than older text user interfaces or even GUIs, which still limit the majority of input to keyboard and mouse and interact naturally without any mechanical devices.

Ben Shneiderman is an American computer scientist, a Distinguished University Professor in the University of Maryland Department of Computer Science, which is part of the University of Maryland College of Computer, Mathematical, and Natural Sciences at the University of Maryland, College Park, and the founding director (1983-2000) of the University of Maryland Human-Computer Interaction Lab. He conducted fundamental research in the field of human–computer interaction, developing new ideas, methods, and tools such as the direct manipulation interface, and his eight rules of design.

In the field of human–computer interaction, a Wizard of Oz experiment is a research experiment in which subjects interact with a computer system that subjects believe to be autonomous, but which is actually being operated or partially operated by an unseen human being.

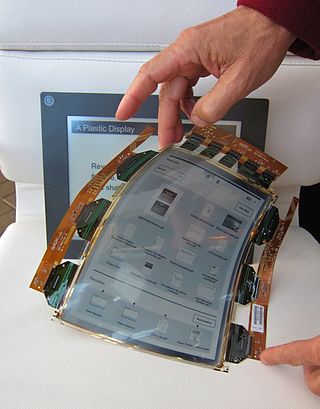

A flexible display or rollable display is an electronic visual display which is flexible in nature, as opposed to the traditional flat screen displays used in most electronic devices. In recent years there has been a growing interest from numerous consumer electronics manufacturers to apply this display technology in e-readers, mobile phones and other consumer electronics. Such screens can be rolled up like a scroll without the image or text being distorted. Technologies involved in building a rollable display include electronic ink, Gyricon, Organic LCD, and OLED.

In computing, post-WIMP comprises work on user interfaces, mostly graphical user interfaces, which attempt to go beyond the paradigm of windows, icons, menus and a pointing device, i.e. WIMP interfaces.

A projection augmented model is an element sometimes employed in virtual reality systems. It consists of a physical three-dimensional model onto which a computer image is projected to create a realistic looking object. Importantly, the physical model is the same geometric shape as the object that the PA model depicts.

In human–computer interaction, an organic user interface (OUI) is defined as a user interface with a non-flat display. After Engelbart and Sutherland's graphical user interface (GUI), which was based on the cathode ray tube (CRT), and Kay and Weiser's ubiquitous computing, which is based on the flat panel liquid-crystal display (LCD), OUI represents one possible third wave of display interaction paradigms, pertaining to multi-shaped and flexible displays. In an OUI, the display surface is always the focus of interaction, and may actively or passively change shape upon analog inputs. These inputs are provided through direct physical gestures, rather than through indirect point-and-click control. Note that the term "Organic" in OUI was derived from organic architecture, referring to the adoption of natural form to design a better fit with human ecology. The term also alludes to the use of organic electronics for this purpose.

The DiamondTouch table is a multi-touch, interactive PC interface product from Circle Twelve Inc. It is a human interface device that has the capability of allowing multiple people to interact simultaneously while identifying which person is touching where. The technology was originally developed at Mitsubishi Electric Research Laboratories (MERL) in 2001 and later licensed to Circle Twelve Inc in 2008. The DiamondTouch table is used to facilitate face-to-face collaboration, brainstorming, and decision-making, and users include construction management company Parsons Brinckerhoff, the Methodist Hospital, and the US National Geospatial-Intelligence Agency (NGA).

Ed Huai-Hsin Chi is a Taiwanese American computer scientist and research scientist at Google, known for his early work in applying the theory of information scent to predict usability of websites.

The Human Media Lab(HML) is a research laboratory in Human-Computer Interaction at Queen's University's School of Computing in Kingston, Ontario. Its goals are to advance user interface design by creating and empirically evaluating disruptive new user interface technologies, and educate graduate students in this process. The Human Media Lab was founded in 2000 by Prof. Roel Vertegaal and employs an average of 12 graduate students.

Alice Jane Bernheim Brush is an American computer scientist known for her research in human-computer interaction, ubiquitous computing and computer supported collaborative work (CSCW). She is particularly known for her research studying and building technology for homes as well as expertise conducting field studies of technology. She is the Co-Chair of CRA-W from 2014–2017.

Animal–Computer Interaction (ACI) is a field of research for the design and use of technology with, for and by animals covering different kinds of animals from wildlife, zoo and domesticated animals in different roles. It emerged from, and was heavily influenced by, the discipline of Human–computer interaction (HCI). As the field expanded, it has become increasingly multi-disciplinary, incorporating techniques and research from disciplines such as artificial intelligence (AI), requirements engineering (RE), and veterinary science.

Jacob O. Wobbrock is a Professor in the University of Washington Information School and, by courtesy, in the Paul G. Allen School of Computer Science & Engineering at the University of Washington. He is Director of the ACE Lab, founding Co-Director of the CREATE center, and a founding member of the DUB Group and the MHCI+D degree program.

Wendy Elizabeth Mackay is a Canadian researcher specializing in human-computer interaction. She has served in all of the roles on the SIGCHI committee, including Chair. She is a member of the CHI Academy and a recipient of a European Research Council Advanced grant. She has been a visiting professor in Stanford University between 2010 and 2012, and received the ACM SIGCHI Lifetime Service Award in 2014.

Yves Guiard is a French cognitive neuroscientist and researcher best known for his work in human laterality and stimulus-response compatibility in the field of human-computer interaction. He is the director of research at French National Center for Scientific Research and a member of CHI Academy since 2016. He is also an associate editor of ACM Transactions on Computer-Human Interaction and member of the advisory council of the International Association for the Study of Attention and Performance.

Andrew Cockburn is currently working as a Professor in the Department of Computer Science and Software Engineering at the University of Canterbury in Christchurch, New Zealand. He is in charge of the Human Computer Interactions Lab where he conducts research focused on designing and testing user interfaces that integrate with inherent human factors.

Shumin Zhai is an American-Canadian-Chinese Human–computer interaction (HCI) research scientist and inventor. He is known for his research specifically on input devices and interaction methods, swipe-gesture-based touchscreen keyboards, eye-tracking interfaces, and models of human performance in human-computer interaction. His studies have contributed to both foundational models and understandings of HCI and practical user interface designs and flagship products. He previously worked at IBM where he invented the ShapeWriter text entry method for smartphones, which is a predecessor to the modern Swype keyboard. Dr. Zhai's publications have won the ACM UIST Lasting Impact Award and the IEEE Computer Society Best Paper Award, among others, and he is most known for his research specifically on input devices and interaction methods, swipe-gesture-based touchscreen keyboards, eye-tracking interfaces, and models of human performance in human-computer interaction. Dr. Zhai is currently a Principal Scientist at Google where he leads and directs research, design, and development of human-device input methods and haptics systems.

References

- ↑ "Bendable phones 'right around the corner,' says Samsung exec".

- ↑ Holman, D., Vertegaal, R. and Troje, N. (2005). PaperWindows: Interaction Techniques for Digital Paper. In Proceedings of ACM CHI 2005 Conference on Human Factors in Computing Systems. ACM Press, 591–599.

- ↑ Lahey, B., Girouard, A., Burleson, W. and R. Vertegaal. (2011). PaperPhone: Understanding the Use of Bend Gestures in Mobile Devices with Flexible Electronic Paper Displays. In Proceedings of ACM CHI’11 Conference on Human Factors in Computing Systems, ACM Press, 1303–1312.

- ↑ Warner, B. (2013). PaperTab a Fold-Up, Roll-Up Tablet Computer. Bloomberg Businessweek, May 2013.