Related Research Articles

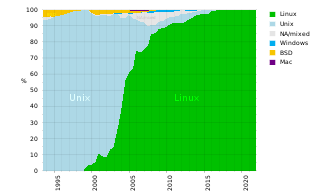

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2022, supercomputers have existed which can perform over 1018 FLOPS, so called exascale supercomputers. For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.

UNICOS is a range of Unix and later Linux operating system (OS) variants developed by Cray for its supercomputers. UNICOS is the successor of the Cray Operating System (COS). It provides network clustering and source code compatibility layers for some other Unixes. UNICOS was originally introduced in 1985 with the Cray-2 system and later ported to other Cray models. The original UNICOS was based on UNIX System V Release 2, and had many Berkeley Software Distribution (BSD) features added to it.

nCUBE was a series of parallel computing computers from the company of the same name. Early generations of the hardware used a custom microprocessor. With its final generations of servers, nCUBE no longer designed custom microprocessors for machines, but used server-class chips manufactured by a third party in massively parallel hardware deployments, primarily for the purposes of on-demand video.

In computer architecture, 64-bit integers, memory addresses, or other data units are those that are 64 bits wide. Also, 64-bit central processing units (CPU) and arithmetic logic units (ALU) are those that are based on processor registers, address buses, or data buses of that size. A computer that uses such a processor is a 64-bit computer.

OSF/1 is a variant of the Unix operating system developed by the Open Software Foundation during the late 1980s and early 1990s. OSF/1 is one of the first operating systems to have used the Mach kernel developed at Carnegie Mellon University, and is probably best known as the native Unix operating system for DEC Alpha architecture systems.

In DOS memory management, extended memory refers to memory above the first megabyte (220 bytes) of address space in an IBM PC or compatible with an 80286 or later processor. The term is mainly used under the DOS and Windows operating systems. DOS programs, running in real mode or virtual x86 mode, cannot directly access this memory, but are able to do so through an application programming interface (API) called the Extended Memory Specification (XMS). This API is implemented by a driver (such as HIMEM.SYS) or the operating system kernel, which takes care of memory management and copying memory between conventional and extended memory, by temporarily switching the processor into protected mode. In this context, the term "extended memory" may refer to either the whole of the extended memory or only the portion available through this API.

ASCI Red was the first computer built under the Accelerated Strategic Computing Initiative (ASCI), the supercomputing initiative of the United States government created to help the maintenance of the United States nuclear arsenal after the 1992 moratorium on nuclear testing.

Red Storm was a supercomputer architecture designed for the US Department of Energy’s National Nuclear Security Administration Advanced Simulation and Computing Program. Cray, Inc developed it in 2004 based on the contracted architectural specifications provided by Sandia National Laboratories. The architecture was later commercially produced as the Cray XT3.

The Cray XT3, also known by codename Red Storm, is a distributed memory massively parallel MIMD supercomputer designed by Cray Inc.. Cray collaborated with and delivered to Sandia National Laboratories in 2004. The XT3 derives much of its architecture from the previous Cray T3E system, and also from the Intel ASCI Red supercomputer.

The Intel Paragon is a discontinued series of massively parallel supercomputers that was produced by Intel in the 1990s. The Paragon XP/S is a productized version of the experimental Touchstone Delta system that was built at Caltech, launched in 1992. The Paragon superseded Intel's earlier iPSC/860 system, to which it is closely related.

The Intel Personal SuperComputer was a product line of parallel computers in the 1980s and 1990s. The iPSC/1 was superseded by the Intel iPSC/2, and then the Intel iPSC/860.

The Cray CX1 is a deskside workstation designed by Cray Inc., based on the x86-64 processor architecture. It was launched on September 16, 2008, and was discontinued in early 2012. It comprises a single chassis blade server design that supports a maximum of eight modular single-width blades, giving up to 96 processor cores. Computational load can be run independently on each blade and/or combined using clustering techniques.

Locus Computing Corporation was formed in 1982 by Gerald J. Popek, Charles S. Kline and Gregory I. Thiel to commercialize the technologies developed for the LOCUS distributed operating system at UCLA. Locus was notable for commercializing single-system image software and producing the Merge package which allowed the use of DOS and Windows 3.1 software on Unix systems.

The National Center for Computational Sciences (NCCS) is a United States Department of Energy (DOE) Leadership Computing Facility that houses the Oak Ridge Leadership Computing Facility (OLCF), a DOE Office of Science User Facility charged with helping researchers solve challenging scientific problems of global interest with a combination of leading high-performance computing (HPC) resources and international expertise in scientific computing.

Intel oneAPI Math Kernel Library, formerly known as Intel Math Kernel Library, is a library of optimized math routines for science, engineering, and financial applications. Core math functions include BLAS, LAPACK, ScaLAPACK, sparse solvers, fast Fourier transforms, and vector math.

Portals is a low-level network API for high-performance networking on high-performance computing systems developed by Sandia National Laboratories and the University of New Mexico. Portals is currently the lowest-level network programming interface on the commercially successful XT line of supercomputers from Cray.

A lightweight kernel (LWK) operating system is one used in a large computer with many processor cores, termed a parallel computer.

Compute Node Kernel (CNK) is the node level operating system for the IBM Blue Gene series of supercomputers.

Catamount is an operating system for supercomputers.

A supercomputer operating system is an operating system intended for supercomputers. Since the end of the 20th century, supercomputer operating systems have undergone major transformations, as fundamental changes have occurred in supercomputer architecture. While early operating systems were custom tailored to each supercomputer to gain speed, the trend has been moving away from in-house operating systems and toward some form of Linux, with it running all the supercomputers on the TOP500 list in November 2017. In 2021, top 10 computers run for instance Red Hat Enterprise Linux (RHEL), or some variant of it or other Linux distribution e.g. Ubuntu.

References

- ↑ Rolf Riesen, Lee Ann Fisk; et al. "SUNMOS?" . Retrieved 2006-05-19.—a paper that explains what SUNMOS is (CiteSeer cached copy)

- ↑ Rolf Riesen; et al. "Designing and implementing lightweight kernels for capability computing". Archived from the original on 2013-01-05. Retrieved 2009-10-12.

- ↑ "Operating system boosts high performance computing". R&D Magazine. 2009-07-30. Archived from the original on 2013-02-01. Retrieved 2009-11-10.

- ↑ "Sandia wins five R&D 100 awards, plays role in sixth" (Press release). Sandia National Laboratories. 2009-07-20. Retrieved 2009-11-10.