In digital image processing, sub-pixel resolution can be obtained in images constructed from sources with information exceeding the nominal pixel resolution of said images.[ citation needed ]

In digital image processing, sub-pixel resolution can be obtained in images constructed from sources with information exceeding the nominal pixel resolution of said images.[ citation needed ]

| | This section needs expansion. You can help by adding to it. (January 2011) |

For example, if the image of a ship of length 50 metres (160 ft), viewed side-on, is 500 pixels long, the nominal resolution (pixel size) on the side of the ship facing the camera is 0.1 metres (3.9 in). Now sub-pixel resolution of well resolved features can measure ship movements which are an order of magnitude (10×) smaller. Movement is specifically mentioned here because measuring absolute positions requires an accurate lens model and known reference points within the image to achieve sub-pixel position accuracy. Small movements can however be measured (down to 1 cm) with simple calibration procedures.[ citation needed ] Specific fit functions often suffer specific bias with respect to image pixel boundaries. Users should therefore take care to avoid these "pixel locking" (or "peak locking") effects. [1]

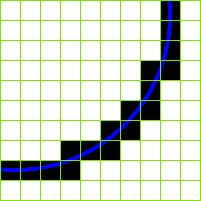

Whether features in a digital image are sharp enough to achieve sub-pixel resolution can be quantified by measuring the point spread function (PSF) of an isolated point in the image. If the image does not contain isolated points, similar methods can be applied to edges in the image. It is also important when attempting sub-pixel resolution to keep image noise to a minimum. This, in the case of a stationary scene, can be measured from a time series of images. Appropriate pixel averaging, through both time (for stationary images) and space (for uniform regions of the image) is often used to prepare the image for sub-pixel resolution measurements.[ citation needed ]