Digital image transformations

Filtering

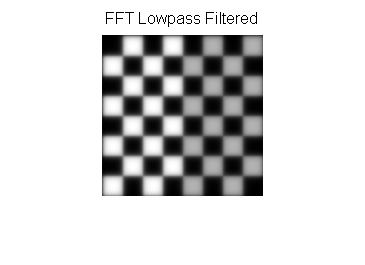

Digital filters are used to blur and sharpen digital images. Filtering can be performed by:

- convolution with specifically designed kernels (filter array) in the spatial domain [39]

- masking specific frequency regions in the frequency (Fourier) domain

The following examples show both methods: [40]

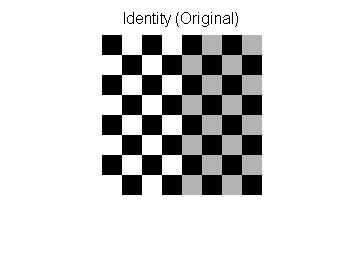

| Filter type | Kernel or mask | Example |

|---|---|---|

| Original Image |  | |

| Spatial Lowpass |  | |

| Spatial Highpass |  | |

| Fourier Representation | Pseudo-code: image = checkerboard F = Fourier Transform of image Show Image: log(1+Absolute Value(F)) |  |

| Fourier Lowpass |  |  |

| Fourier Highpass |  |  |

Image padding in Fourier domain filtering

Images are typically padded before being transformed to the Fourier space, the highpass filtered images below illustrate the consequences of different padding techniques:

| Zero padded | Repeated edge padded |

|---|---|

|  |

Notice that the highpass filter shows extra edges when zero padded compared to the repeated edge padding.

Filtering code examples

MATLAB example for spatial domain highpass filtering.

img=checkerboard(20);% generate checkerboard% ************************** SPATIAL DOMAIN ***************************klaplace=[0-10;-15-1;0-10];% Laplacian filter kernelX=conv2(img,klaplace);% convolve test img with% 3x3 Laplacian kernelfigure()imshow(X,[])% show Laplacian filteredtitle('Laplacian Edge Detection')Affine transformations

Affine transformations enable basic image transformations including scale, rotate, translate, mirror and shear as is shown in the following examples: [40]

| Transformation Name | Affine Matrix | Example |

|---|---|---|

| Identity |  | |

| Reflection |  | |

| Scale |  | |

| Rotate |  where θ = π/6 =30° where θ = π/6 =30° | |

| Shear |  | |

To apply the affine matrix to an image, the image is converted to a matrix in which each entry corresponds to the pixel intensity at that location. Then each pixel's location can be represented as a vector indicating the coordinates of that pixel in the image, [x, y], where x and y are the row and column of a pixel in the image matrix. This allows the coordinate to be multiplied by an affine-transformation matrix, which gives the position that the pixel value will be copied to in the output image.

However, to allow transformations that require translation transformations, 3-dimensional homogeneous coordinates are needed. The third dimension is usually set to a non-zero constant, usually 1, so that the new coordinate is [x, y, 1]. This allows the coordinate vector to be multiplied by a 3×3 matrix, enabling translation shifts. Thus, the third dimension, i.e., the constant 1, allows translation.

Because matrix multiplication is associative, multiple affine transformations can be combined into a single affine transformation by multiplying the matrix of each individual transformation in the order that the transformations are done. This results in a single matrix that, when applied to a point vector, gives the same result as all the individual transformations performed on the vector [x, y, 1] in sequence. Thus, a sequence of affine transformation matrices can be reduced to a single affine transformation matrix.

For example, 2-dimensional coordinates only permit rotation about the origin (0, 0). But 3-dimensional homogeneous coordinates can be used to first translate any point to (0, 0), then perform the rotation, and lastly translate the origin (0, 0) back to the original point (the opposite of the first translation). These three affine transformations can be combined into a single matrix, thus allowing rotation around any point in the image. [41]

Image denoising with mathematical morphology

Mathematical morphology (MM) is a nonlinear image processing framework that analyzes shapes within images by probing local pixel neighborhoods using a small, predefined function called a structuring element. In the context of grayscale images, MM is especially useful for denoising through dilation and erosion—primitive operators that can be combined to build more complex filters.

Suppose we have:

- A discrete grayscale image:

- A structuring element:

Here, defines the neighborhood of relative coordinates over which local operations are computed. The values of bias the image during dilation and erosion.

- Dilation

- Grayscale dilation is defined as:

- For example, the dilation at position (1, 1) is calculated as:

- Erosion

- Grayscale erosion is defined as:

- For example, the erosion at position (1, 1) is calculated as:

Results

After applying dilation to :

After applying erosion to :

Opening and Closing

MM operations, such as opening and closing, are composite processes that utilize both dilation and erosion to modify the structure of an image. These operations are particularly useful for tasks such as noise removal, shape smoothing, and object separation.

- Opening: This operation is performed by applying erosion to an image first, followed by dilation. The purpose of opening is to remove small objects or noise from the foreground while preserving the overall structure of larger objects. It is especially effective in situations where noise appears as isolated bright pixels or small, disconnected features.

For example, applying opening to an image with a structuring element would first reduce small details (through erosion) and then restore the main shapes (through dilation). This ensures that unwanted noise is removed without significantly altering the size or shape of larger objects.

- Closing: This operation is performed by applying dilation first, followed by erosion. Closing is typically used to fill small holes or gaps within objects and to connect broken parts of the foreground. It works by initially expanding the boundaries of objects (through dilation) and then refining the boundaries (through erosion).

For instance, applying closing to the same image would fill in small gaps within objects, such as connecting breaks in thin lines or closing small holes, while ensuring that the surrounding areas are not significantly affected.

Both opening and closing can be visualized as ways of refining the structure of an image: opening simplifies and removes small, unnecessary details, while closing consolidates and connects objects to form more cohesive structures.

| Structuring element | Mask | Code | Example |

|---|---|---|---|

| Original Image | None | Use Matlab to read Original image original=imread('scene.jpg');image=rgb2gray(original);[r,c,channel]=size(image);se=logical([111;111;111]);[p,q]=size(se);halfH=floor(p/2);halfW=floor(q/2);time=3;% denoising 3 times with all method |  |

| Dilation | Use Matlab to dilation imwrite(image,"scene_dil.jpg")extractmax=zeros(size(image),class(image));fori=1:timedil_image=imread('scene_dil.jpg');forcol=(halfW+1):(c-halfW)forrow=(halfH+1):(r-halfH)dpointD=row-halfH;dpointU=row+halfH;dpointL=col-halfW;dpointR=col+halfW;dneighbor=dil_image(dpointD:dpointU,dpointL:dpointR);filter=dneighbor(se);extractmax(row,col)=max(filter);endendimwrite(extractmax,"scene_dil.jpg");end |  | |

| Erosion | Use Matlab to erosion imwrite(image,'scene_ero.jpg');extractmin=zeros(size(image),class(image));fori=1:timeero_image=imread('scene_ero.jpg');forcol=(halfW+1):(c-halfW)forrow=(halfH+1):(r-halfH)pointDown=row-halfH;pointUp=row+halfH;pointLeft=col-halfW;pointRight=col+halfW;neighbor=ero_image(pointDown:pointUp,pointLeft:pointRight);filter=neighbor(se);extractmin(row,col)=min(filter);endendimwrite(extractmin,"scene_ero.jpg");end |  | |

| Opening | Use Matlab to Opening imwrite(extractmin,"scene_opening.jpg")extractopen=zeros(size(image),class(image));fori=1:timedil_image=imread('scene_opening.jpg');forcol=(halfW+1):(c-halfW)forrow=(halfH+1):(r-halfH)dpointD=row-halfH;dpointU=row+halfH;dpointL=col-halfW;dpointR=col+halfW;dneighbor=dil_image(dpointD:dpointU,dpointL:dpointR);filter=dneighbor(se);extractopen(row,col)=max(filter);endendimwrite(extractopen,"scene_opening.jpg");end |  | |

| Closing | Use Matlab to Closing imwrite(extractmax,"scene_closing.jpg")extractclose=zeros(size(image),class(image));fori=1:timeero_image=imread('scene_closing.jpg');forcol=(halfW+1):(c-halfW)forrow=(halfH+1):(r-halfH)dpointD=row-halfH;dpointU=row+halfH;dpointL=col-halfW;dpointR=col+halfW;dneighbor=ero_image(dpointD:dpointU,dpointL:dpointR);filter=dneighbor(se);extractclose(row,col)=min(filter);endendimwrite(extractclose,"scene_closing.jpg");end |  | |