Bandwidth is the difference between the upper and lower frequencies in a continuous band of frequencies. It is typically measured in unit of hertz.

In mathematics, the discrete Fourier transform (DFT) converts a finite sequence of equally-spaced samples of a function into a same-length sequence of equally-spaced samples of the discrete-time Fourier transform (DTFT), which is a complex-valued function of frequency. The interval at which the DTFT is sampled is the reciprocal of the duration of the input sequence. An inverse DFT (IDFT) is a Fourier series, using the DTFT samples as coefficients of complex sinusoids at the corresponding DTFT frequencies. It has the same sample-values as the original input sequence. The DFT is therefore said to be a frequency domain representation of the original input sequence. If the original sequence spans all the non-zero values of a function, its DTFT is continuous, and the DFT provides discrete samples of one cycle. If the original sequence is one cycle of a periodic function, the DFT provides all the non-zero values of one DTFT cycle.

In mathematics, Fourier analysis is the study of the way general functions may be represented or approximated by sums of simpler trigonometric functions. Fourier analysis grew from the study of Fourier series, and is named after Joseph Fourier, who showed that representing a function as a sum of trigonometric functions greatly simplifies the study of heat transfer.

The Nyquist–Shannon sampling theorem is an essential principle for digital signal processing linking the frequency range of a signal and the sample rate required to avoid a type of distortion called aliasing. The theorem states that the sample rate must be at least twice the bandwidth of the signal to avoid aliasing. In practice, it is used to select band-limiting filters to keep aliasing below an acceptable amount when an analog signal is sampled or when sample rates are changed within a digital signal processing function.

A low-pass filter is a filter that passes signals with a frequency lower than a selected cutoff frequency and attenuates signals with frequencies higher than the cutoff frequency. The exact frequency response of the filter depends on the filter design. The filter is sometimes called a high-cut filter, or treble-cut filter in audio applications. A low-pass filter is the complement of a high-pass filter.

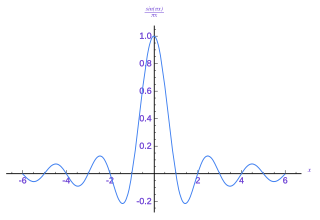

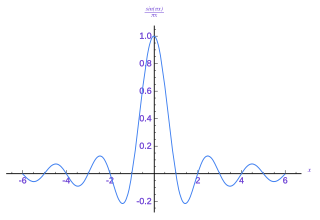

The Whittaker–Shannon interpolation formula or sinc interpolation is a method to construct a continuous-time bandlimited function from a sequence of real numbers. The formula dates back to the works of E. Borel in 1898, and E. T. Whittaker in 1915, and was cited from works of J. M. Whittaker in 1935, and in the formulation of the Nyquist–Shannon sampling theorem by Claude Shannon in 1949. It is also commonly called Shannon's interpolation formula and Whittaker's interpolation formula. E. T. Whittaker, who published it in 1915, called it the Cardinal series.

In signal processing, a sinc filter can refer to either a sinc-in-time filter whose impulse response is a sinc function and whose frequency response is rectangular, or to a sinc-in-frequency filter whose impulse response is rectangular and whose frequency response is a sinc function. Calling them according to which domain the filter resembles a sinc avoids confusion. If the domain is unspecified, sinc-in-time is often assumed, or context hopefully can infer the correct domain.

In signal processing, a finite impulse response (FIR) filter is a filter whose impulse response is of finite duration, because it settles to zero in finite time. This is in contrast to infinite impulse response (IIR) filters, which may have internal feedback and may continue to respond indefinitely.

In signal processing, a periodogram is an estimate of the spectral density of a signal. The term was coined by Arthur Schuster in 1898. Today, the periodogram is a component of more sophisticated methods. It is the most common tool for examining the amplitude vs frequency characteristics of FIR filters and window functions. FFT spectrum analyzers are also implemented as a time-sequence of periodograms.

Bandlimiting refers to a process which reduces the energy of a signal to an acceptably low level outside of a desired frequency range.

In numerical analysis and functional analysis, a discrete wavelet transform (DWT) is any wavelet transform for which the wavelets are discretely sampled. As with other wavelet transforms, a key advantage it has over Fourier transforms is temporal resolution: it captures both frequency and location information.

In mathematics, the discrete-time Fourier transform (DTFT) is a form of Fourier analysis that is applicable to a sequence of discrete values.

In digital signal processing, upsampling, expansion, and interpolation are terms associated with the process of resampling in a multi-rate digital signal processing system. Upsampling can be synonymous with expansion, or it can describe an entire process of expansion and filtering (interpolation). When upsampling is performed on a sequence of samples of a signal or other continuous function, it produces an approximation of the sequence that would have been obtained by sampling the signal at a higher rate. For example, if compact disc audio at 44,100 samples/second is upsampled by a factor of 5/4, the resulting sample-rate is 55,125.

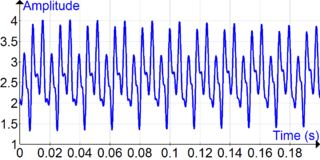

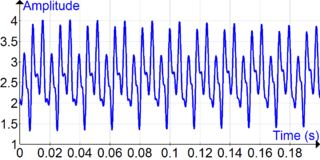

In signal processing, oversampling is the process of sampling a signal at a sampling frequency significantly higher than the Nyquist rate. Theoretically, a bandwidth-limited signal can be perfectly reconstructed if sampled at the Nyquist rate or above it. The Nyquist rate is defined as twice the bandwidth of the signal. Oversampling is capable of improving resolution and signal-to-noise ratio, and can be helpful in avoiding aliasing and phase distortion by relaxing anti-aliasing filter performance requirements.

In system analysis, among other fields of study, a linear time-invariant (LTI) system is a system that produces an output signal from any input signal subject to the constraints of linearity and time-invariance; these terms are briefly defined in the overview below. These properties apply (exactly or approximately) to many important physical systems, in which case the response y(t) of the system to an arbitrary input x(t) can be found directly using convolution: y(t) = (x ∗ h)(t) where h(t) is called the system's impulse response and ∗ represents convolution (not to be confused with multiplication). What's more, there are systematic methods for solving any such system (determining h(t)), whereas systems not meeting both properties are generally more difficult (or impossible) to solve analytically. A good example of an LTI system is any electrical circuit consisting of resistors, capacitors, inductors and linear amplifiers.

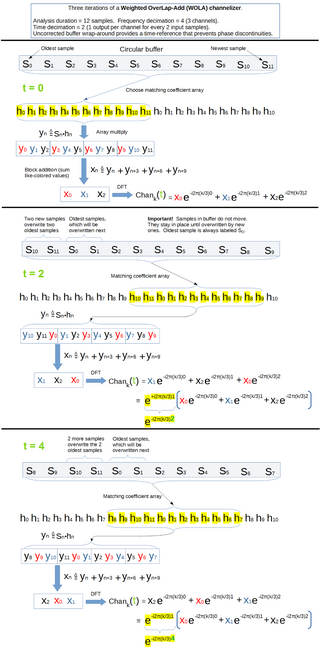

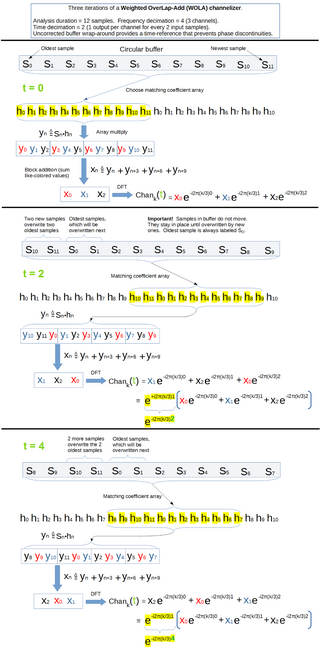

In signal processing, a filter bank is an array of bandpass filters that separates the input signal into multiple components, each one carrying a sub-band of the original signal. One application of a filter bank is a graphic equalizer, which can attenuate the components differently and recombine them into a modified version of the original signal. The process of decomposition performed by the filter bank is called analysis ; the output of analysis is referred to as a subband signal with as many subbands as there are filters in the filter bank. The reconstruction process is called synthesis, meaning reconstitution of a complete signal resulting from the filtering process.

Circular convolution, also known as cyclic convolution, is a special case of periodic convolution, which is the convolution of two periodic functions that have the same period. Periodic convolution arises, for example, in the context of the discrete-time Fourier transform (DTFT). In particular, the DTFT of the product of two discrete sequences is the periodic convolution of the DTFTs of the individual sequences. And each DTFT is a periodic summation of a continuous Fourier transform function. Although DTFTs are usually continuous functions of frequency, the concepts of periodic and circular convolution are also directly applicable to discrete sequences of data. In that context, circular convolution plays an important role in maximizing the efficiency of a certain kind of common filtering operation.

The zero-order hold (ZOH) is a mathematical model of the practical signal reconstruction done by a conventional digital-to-analog converter (DAC). That is, it describes the effect of converting a discrete-time signal to a continuous-time signal by holding each sample value for one sample interval. It has several applications in electrical communication.

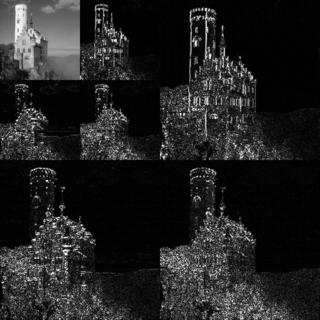

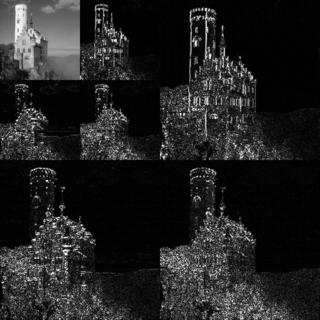

In mathematical analysis and applications, multidimensional transforms are used to analyze the frequency content of signals in a domain of two or more dimensions.

This article provides a short survey of the concepts, principles and applications of Multirate filter banks and Multidimensional Directional filter banks.