Related Research Articles

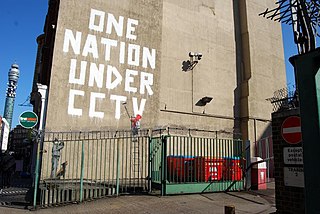

Privacy is the ability of an individual or group to seclude themselves or information about themselves, and thereby express themselves selectively.

Anonymity describes situations where the acting person's identity is unknown. Some writers have argued that namelessness, though technically correct, does not capture what is more centrally at stake in contexts of anonymity. The important idea here is that a person be non-identifiable, unreachable, or untrackable. Anonymity is seen as a technique, or a way of realizing, a certain other values, such as privacy, or liberty. Over the past few years, anonymity tools used on the dark web by criminals and malicious users have drastically altered the ability of law enforcement to use conventional surveillance techniques.

Information privacy is the relationship between the collection and dissemination of data, technology, the public expectation of privacy, contextual information norms, and the legal and political issues surrounding them. It is also known as data privacy or data protection.

Java Anon Proxy (JAP) also known as JonDonym, was a proxy system designed to allow browsing the Web with revocable pseudonymity. It was originally developed as part of a project of the Technische Universität Dresden, the Universität Regensburg and Privacy Commissioner of the state of Schleswig-Holstein. The client-software is written in the Java programming language. The service has been closed since August 2021.

Test data is data which has been specifically identified for use in tests, typically of a computer program.

Pseudonymization is a data management and de-identification procedure by which personally identifiable information fields within a data record are replaced by one or more artificial identifiers, or pseudonyms. A single pseudonym for each replaced field or collection of replaced fields makes the data record less identifiable while remaining suitable for data analysis and data processing.

An Inference Attack is a data mining technique performed by analyzing data in order to illegitimately gain knowledge about a subject or database. A subject's sensitive information can be considered as leaked if an adversary can infer its real value with a high confidence. This is an example of breached information security. An Inference attack occurs when a user is able to infer from trivial information more robust information about a database without directly accessing it. The object of Inference attacks is to piece together information at one security level to determine a fact that should be protected at a higher security level.

Privacy-enhancing technologies (PET) are technologies that embody fundamental data protection principles by minimizing personal data use, maximizing data security, and empowering individuals. PETs allow online users to protect the privacy of their personally identifiable information (PII) provided to and handled by services or applications. PETs use techniques to minimize possession of personal data without losing the functionality of an information system. Generally speaking, PETs can be categorized as hard and soft privacy technologies.

Differential privacy (DP) is a system for publicly sharing information about a dataset by describing the patterns of groups within the dataset while withholding information about individuals in the dataset. The idea behind differential privacy is that if the effect of making an arbitrary single substitution in the database is small enough, the query result cannot be used to infer much about any single individual, and therefore provides privacy.

Synthetic data is "any production data applicable to a given situation that are not obtained by direct measurement" according to the McGraw-Hill Dictionary of Scientific and Technical Terms; where Craig S. Mullins, an expert in data management, defines production data as "information that is persistently stored and used by professionals to conduct business processes."

De-identification is the process used to prevent someone's personal identity from being revealed. For example, data produced during human subject research might be de-identified to preserve the privacy of research participants. Biological data may be de-identified in order to comply with HIPAA regulations that define and stipulate patient privacy laws.

Data anonymization is a type of information sanitization whose intent is privacy protection. It is the process of removing personally identifiable information from data sets, so that the people whom the data describe remain anonymous.

Datafly algorithm is an algorithm for providing anonymity in medical data. The algorithm was developed by Latanya Arvette Sweeney in 1997−98. Anonymization is achieved by automatically generalizing, substituting, inserting, and removing information as appropriate without losing many of the details found within the data. The method can be used on-the-fly in role-based security within an institution, and in batch mode for exporting data from an institution. Organizations release and receive medical data with all explicit identifiers—such as name—removed, in the erroneous belief that patient confidentiality is maintained because the resulting data look anonymous. However the remaining data can be used to re-identify individuals by linking or matching the data to other databases or by looking at unique characteristics found in the fields and records of the database itself.

k-anonymity is a property possessed by certain anonymized data. The concept of k-anonymity was first introduced by Latanya Sweeney and Pierangela Samarati in a paper published in 1998 as an attempt to solve the problem: "Given person-specific field-structured data, produce a release of the data with scientific guarantees that the individuals who are the subjects of the data cannot be re-identified while the data remain practically useful." A release of data is said to have the k-anonymity property if the information for each person contained in the release cannot be distinguished from at least individuals whose information also appear in the release.

l-diversity, also written as ℓ-diversity, is a form of group based anonymization that is used to preserve privacy in data sets by reducing the granularity of a data representation. This reduction is a trade off that results in some loss of effectiveness of data management or mining algorithms in order to gain some privacy. The l-diversity model is an extension of the k-anonymity model which reduces the granularity of data representation using techniques including generalization and suppression such that any given record maps onto at least k-1 other records in the data. The l-diversity model handles some of the weaknesses in the k-anonymity model where protected identities to the level of k-individuals is not equivalent to protecting the corresponding sensitive values that were generalized or suppressed, especially when the sensitive values within a group exhibit homogeneity. The l-diversity model adds the promotion of intra-group diversity for sensitive values in the anonymization mechanism.

Data re-identification or de-anonymization is the practice of matching anonymous data with publicly available information, or auxiliary data, in order to discover the individual to which the data belong. This is a concern because companies with privacy policies, health care providers, and financial institutions may release the data they collect after the data has gone through the de-identification process.

The Hopkins statistic is a way of measuring the cluster tendency of a data set. It belongs to the family of sparse sampling tests. It acts as a statistical hypothesis test where the null hypothesis is that the data is generated by a Poisson point process and are thus uniformly randomly distributed. A value close to 1 tends to indicate the data is highly clustered, random data will tend to result in values around 0.5, and uniformly distributed data will tend to result in values close to 0.

Since the advent of differential privacy, a number of systems supporting differentially private data analyses have been implemented and deployed.

Spatial cloaking is a privacy mechanism that is used to satisfy specific privacy requirements by blurring users’ exact locations into cloaked regions. This technique is usually integrated into applications in various environments to minimize the disclosure of private information when users request location-based service. Since the database server does not receive the accurate location information, a set including the satisfying solution would be sent back to the user. General privacy requirements include K-anonymity, maximum area, and minimum area.

Soft privacy technology falls under the category of PET, Privacy-enhancing technology, as methods of protecting data. PET has another sub-category, called hard privacy. Soft privacy technology has the goal to keep information safe and process data while having full control of how the data are being used. Soft privacy technology emphasis the usage of third-party programs to protect privacy, emphasizing audit, certification, consent, access control, encryption, and differential privacy. With the advent of new technology, there is a need to process billions of data every day in many areas such as health care, autonomous cars, smart cards, social media data, and more.

References

- ↑ Li, Ninghui; Li, Tiancheng; Venkatasubramanian, Suresh (2007). "T-Closeness: Privacy Beyond k-Anonymity and l-Diversity". t-Closeness: Privacy beyond k-anonymity and l-diversity (PDF). pp. 106–115. doi:10.1109/ICDE.2007.367856. ISBN 978-1-4244-0802-3. S2CID 2949246.

- ↑ Charu C. Aggarwal; Philip S. Yu, eds. (2008). "A General Survey of Privacy". Privacy-Preserving Data Mining – Models and Algorithms (PDF). Springer. ISBN 978-0-387-70991-8.