Related Research Articles

The Capability Maturity Model (CMM) is a development model created in 1986 after a study of data collected from organizations that contracted with the U.S. Department of Defense, who funded the research. The term "maturity" relates to the degree of formality and optimization of processes, from ad hoc practices, to formally defined steps, to managed result metrics, to active optimization of the processes.

Information technology (IT)governance is a subset discipline of corporate governance, focused on information technology (IT) and its performance and risk management. The interest in IT governance is due to the ongoing need within organizations to focus value creation efforts on an organization's strategic objectives and to better manage the performance of those responsible for creating this value in the best interest of all stakeholders. It has evolved from The Principles of Scientific Management, Total Quality Management and ISO 9001 Quality Management System.

ISO/IEC 15504Information technology – Process assessment, also termed Software Process Improvement and Capability dEtermination (SPICE), is a set of technical standards documents for the computer software development process and related business management functions. It is one of the joint International Organization for Standardization (ISO) and International Electrotechnical Commission (IEC) standards, which was developed by the ISO and IEC joint subcommittee, ISO/IEC JTC 1/SC 7.

Capability Maturity Model Integration (CMMI) is a process level improvement training and appraisal program. Administered by the CMMI Institute, a subsidiary of ISACA, it was developed at Carnegie Mellon University (CMU). It is required by many U.S. Government contracts, especially in software development. CMU claims CMMI can be used to guide process improvement across a project, division, or an entire organization.

COBIT is a framework created by ISACA for information technology (IT) management and IT governance.

Microsoft Solutions Framework (MSF) is a set of principles, models, disciplines, concepts, and guidelines for delivering information technology services from Microsoft. MSF is not limited to developing applications only; it is also applicable to other IT projects like deployment, networking or infrastructure projects. MSF does not force the developer to use a specific methodology.

Information security standards are techniques generally outlined in published materials that attempt to protect the cyber environment of a user or organization. This environment includes users themselves, networks, devices, all software, processes, information in storage or transit, applications, services, and systems that can be connected directly or indirectly to networks.

Model-driven engineering (MDE) is a software development methodology that focuses on creating and exploiting domain models, which are conceptual models of all the topics related to a specific problem. Hence, it highlights and aims at abstract representations of the knowledge and activities that govern a particular application domain, rather than the computing concepts.

An independent test organization is an organization, person, or company that tests products, materials, software, etc. according to agreed requirements. The test organization can be affiliated with the government or universities or can be an independent testing laboratory. They are independent because they are not affiliated with the producer nor the user of the item being tested: no commercial bias is present. These "contract testing" facilities are sometimes called "third party" testing or evaluation facilities.

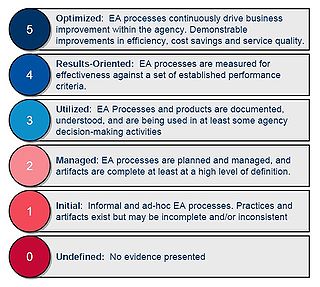

A maturity model is a framework for measuring an organization's maturity, or that of a business function within an organization, with maturity being defined as a measurement of the ability of an organization for continuous improvement in a particular discipline. The higher the maturity, the higher will be the chances that incidents or errors will lead to improvements either in the quality or in the use of the resources of the discipline as implemented by the organization.

The Enterprise Architecture Assessment Framework (EAAF) was created by the US Federal government Office of Management and Budget (OMB) to allow federal agencies to assess and report their enterprise architecture activity and maturity, and advance the use of enterprise architecture in the federal government.

The Lexile Framework for Reading is an educational tool that uses a measure called a Lexile to match readers with books, articles and other leveled reading resources. Readers and books are assigned a score on the Lexile scale, in which lower scores reflect easier readability for books and lower reading ability for readers. The Lexile framework uses quantitative methods, based on individual words and sentence lengths, rather than qualitative analysis of content, to produce scores. Accordingly, the scores for texts do not reflect factors such as multiple levels of meaning or maturity of themes. Hence, the United States Common Core State Standards recommends the use of alternative, qualitative methods for selecting books for students at grade 6 and over. In the US, Lexile measures are reported from reading programs and assessments annually. Thus, about half of U.S. students in grades 3rd through 12th receive a Lexile measure each year. In addition to being used in schools in all 50 states, Lexile measures are also used outside of the United States.

In software engineering, a software development process or software development life cycle (SDLC) is a process of planning and managing software development. It typically involves dividing software development work into smaller, parallel, or sequential steps or sub-processes to improve design and/or product management. The methodology may include the pre-definition of specific deliverables and artifacts that are created and completed by a project team to develop or maintain an application.

Management accounting principles (MAP) were developed to serve the core needs of internal management to improve decision support objectives, internal business processes, resource application, customer value, and capacity utilization needed to achieve corporate goals in an optimal manner. Another term often used for management accounting principles for these purposes is managerial costing principles. The two management accounting principles are:

- Principle of Causality and,

- Principle of Analogy.

Bill Curtis is a software engineer best known for leading the development of the Capability Maturity Model and the People CMM in the Software Engineering Institute at Carnegie Mellon University, and for championing the spread of software process improvement and software measurement globally. In 2007 he was elected a Fellow of the Institute of Electrical and Electronics Engineers (IEEE) for his contributions to software process improvement and measurement. He was named to the 2022 class of ACM Fellows, "for contributions to software process, software measurement, and human factors in software engineering".

Tudor IT Process Assessment (TIPA) is a methodological framework for process assessment. Its first version was published in 2003 by the Public Research Centre Henri Tudor based in Luxembourg. TIPA is now a registered trademark of the Luxembourg Institute of Science and Technology (LIST). TIPA offers a structured approach to determine process capability compared to recognized best practices. TIPA also supports process improvement by providing a gap analysis and proposing improvement recommendations.

Model for assessment of telemedicine (MAST) is a framework for assessment of the value of telemedicine.

The External Dependencies Management Assessment is a voluntary, in-person, facilitated assessment created by the United States Department of Homeland Security. The EDM Assessment is intended for the owners and operators of critical infrastructure organizations in the United States. It measures and reports on the ability of the subject organization to manage external dependencies as they relate to the supply and operation of information and communications technology (ICT). This area of risk management is also sometimes called Third Party Risk Management or Supply Chain Risk Management.

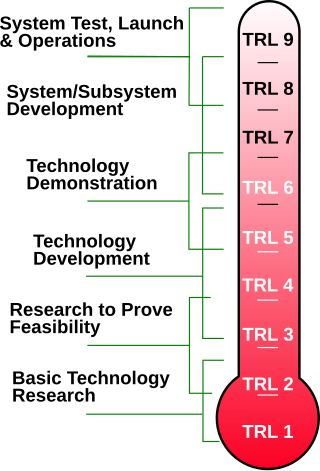

Technology readiness levels (TRLs) are a method for estimating the maturity of technologies during the acquisition phase of a program. TRLs enable consistent and uniform discussions of technical maturity across different types of technology. TRL is determined during a technology readiness assessment (TRA) that examines program concepts, technology requirements, and demonstrated technology capabilities. TRLs are based on a scale from 1 to 9 with 9 being the most mature technology.

The Cybersecurity Maturity Model Certification (CMMC) is an assessment framework and assessor certification program designed to increase the trust in measures of compliance to a variety of standards published by the National Institute of Standards and Technology.

References

The article describing this concept was first published in: Crosstalk, August and September 1996 "Developing a Testing Maturity Model: Parts I and II", Ilene Burnstein, Taratip Suwannasart, and C.R. Carlson, Illinois Institute of Technology (article not in online archives at Crosstalk online anymore)

- ↑ Article in Crosstalk, I. Burnstein, A. Homyen, R. Grom and C.R. Carlson, “A Model to Assess Testing Process Maturity”, CROSSTALK 1998, Software Technology Support Center, Hill Air Force Base, Utah

- ↑ TMMi reference Archived 2009-08-02 at archive.today , Sources of TMMi

- ↑ TMMi Foundation, TMMi Foundation