Related Research Articles

Entropy is a scientific concept, as well as a measurable physical property, that is most commonly associated with a state of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynamics, where it was first recognized, to the microscopic description of nature in statistical physics, and to the principles of information theory. It has found far-ranging applications in chemistry and physics, in biological systems and their relation to life, in cosmology, economics, sociology, weather science, climate change, and information systems including the transmission of information in telecommunication.

Thermodynamics is a branch of physics that deals with heat, work, and temperature, and their relation to energy, entropy, and the physical properties of matter and radiation. The behavior of these quantities is governed by the four laws of thermodynamics which convey a quantitative description using measurable macroscopic physical quantities, but may be explained in terms of microscopic constituents by statistical mechanics. Thermodynamics applies to a wide variety of topics in science and engineering, especially physical chemistry, biochemistry, chemical engineering and mechanical engineering, but also in other complex fields such as meteorology.

Thermochemistry is the study of the heat energy which is associated with chemical reactions and/or phase changes such as melting and boiling. A reaction may release or absorb energy, and a phase change may do the same. Thermochemistry focuses on the energy exchange between a system and its surroundings in the form of heat. Thermochemistry is useful in predicting reactant and product quantities throughout the course of a given reaction. In combination with entropy determinations, it is also used to predict whether a reaction is spontaneous or non-spontaneous, favorable or unfavorable.

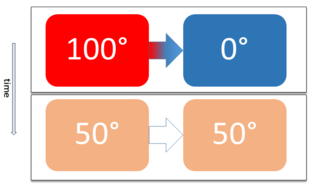

The second law of thermodynamics is a physical law based on universal experience concerning heat and energy interconversions. One simple statement of the law is that heat always moves from hotter objects to colder objects, unless energy in some form is supplied to reverse the direction of heat flow. Another definition is: "Not all heat energy can be converted into work in a cyclic process."

The zeroth law of thermodynamics is one of the four principal laws of thermodynamics. It provides an independent definition of temperature without reference to entropy, which is defined in the second law. The law was established by Ralph H. Fowler in the 1930s, long after the first, second, and third laws were widely recognized.

Thermodynamic equilibrium is an axiomatic concept of thermodynamics. It is an internal state of a single thermodynamic system, or a relation between several thermodynamic systems connected by more or less permeable or impermeable walls. In thermodynamic equilibrium, there are no net macroscopic flows of matter nor of energy within a system or between systems. In a system that is in its own state of internal thermodynamic equilibrium, no macroscopic change occurs.

The heat death of the universe is a hypothesis on the ultimate fate of the universe, which suggests the universe will evolve to a state of no thermodynamic free energy, and will therefore be unable to sustain processes that increase entropy. Heat death does not imply any particular absolute temperature; it only requires that temperature differences or other processes may no longer be exploited to perform work. In the language of physics, this is when the universe reaches thermodynamic equilibrium. The Heat Death theory has become the leading theory in the modern age with the fewest unpredictable factors.

In thermodynamics, an isothermal process is a type of thermodynamic process in which the temperature T of a system remains constant: ΔT = 0. This typically occurs when a system is in contact with an outside thermal reservoir, and a change in the system occurs slowly enough to allow the system to be continuously adjusted to the temperature of the reservoir through heat exchange (see quasi-equilibrium). In contrast, an adiabatic process is where a system exchanges no heat with its surroundings (Q = 0).

A thermodynamic system is a body of matter and/or radiation, considered as separate from its surroundings, and studied using the laws of thermodynamics. Thermodynamic systems may be isolated, closed, or open. An isolated system exchanges no matter or energy with its surroundings, whereas a closed system does not exchange matter but may exchange heat and experience and exert forces. An open system can interact with its surroundings by exchanging both matter and energy. The physical condition of a thermodynamic system at a given time is described by its state, which can be specified by the values of a set of thermodynamic state variables. A thermodynamic system is in thermodynamic equilibrium when there are no macroscopically apparent flows of matter or energy within it or between it and other systems.

Two physical systems are in thermal equilibrium if there is no net flow of thermal energy between them when they are connected by a path permeable to heat. Thermal equilibrium obeys the zeroth law of thermodynamics. A system is said to be in thermal equilibrium with itself if the temperature within the system is spatially uniform and temporally constant.

The laws of thermodynamics are a set of scientific laws which define a group of physical quantities, such as temperature, energy, and entropy, that characterize thermodynamic systems in thermodynamic equilibrium. The laws also use various parameters for thermodynamic processes, such as thermodynamic work and heat, and establish relationships between them. They state empirical facts that form a basis of precluding the possibility of certain phenomena, such as perpetual motion. In addition to their use in thermodynamics, they are important fundamental laws of physics in general, and are applicable in other natural sciences.

In thermodynamics, the exergy of a system is the maximum useful work possible during a process that brings the system into equilibrium with a heat reservoir, reaching maximum entropy. When the surroundings are the reservoir, exergy is the potential of a system to cause a change as it achieves equilibrium with its environment. Exergy is the energy that is available to be used. After the system and surroundings reach equilibrium, the exergy is zero. Determining exergy was also the first goal of thermodynamics. The term "exergy" was coined in 1956 by Zoran Rant (1904–1972) by using the Greek ex and ergon meaning "from work", but the concept had been earlier developed by J Willard Gibbs in 1873.

Thermodynamics is expressed by a mathematical framework of thermodynamic equations which relate various thermodynamic quantities and physical properties measured in a laboratory or production process. Thermodynamics is based on a fundamental set of postulates, that became the laws of thermodynamics.

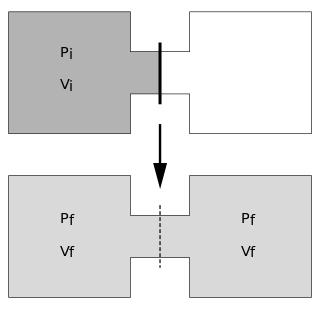

The Joule expansion is an irreversible process in thermodynamics in which a volume of gas is kept in one side of a thermally isolated container, with the other side of the container being evacuated. The partition between the two parts of the container is then opened, and the gas fills the whole container.

In physical science, an isolated system is either of the following:

- a physical system so far removed from other systems that it does not interact with them.

- a thermodynamic system enclosed by rigid immovable walls through which neither mass nor energy can pass.

Biological thermodynamics is the quantitative study of the energy transductions that occur in or between living organisms, structures, and cells and of the nature and function of the chemical processes underlying these transductions. Biological thermodynamics may address the question of whether the benefit associated with any particular phenotypic trait is worth the energy investment it requires.

Classical thermodynamics considers three main kinds of thermodynamic process: (1) changes in a system, (2) cycles in a system, and (3) flow processes.

In classical thermodynamics, entropy is a property of a thermodynamic system that expresses the direction or outcome of spontaneous changes in the system. The term was introduced by Rudolf Clausius in the mid-19th century to explain the relationship of the internal energy that is available or unavailable for transformations in form of heat and work. Entropy predicts that certain processes are irreversible or impossible, despite not violating the conservation of energy. The definition of entropy is central to the establishment of the second law of thermodynamics, which states that the entropy of isolated systems cannot decrease with time, as they always tend to arrive at a state of thermodynamic equilibrium, where the entropy is highest. Entropy is therefore also considered to be a measure of disorder in the system.

The concept entropy was first developed by German physicist Rudolf Clausius in the mid-nineteenth century as a thermodynamic property that predicts that certain spontaneous processes are irreversible or impossible. In statistical mechanics, entropy is formulated as a statistical property using probability theory. The statistical entropy perspective was introduced in 1870 by Austrian physicist Ludwig Boltzmann, who established a new field of physics that provided the descriptive linkage between the macroscopic observation of nature and the microscopic view based on the rigorous treatment of large ensembles of microstates that constitute thermodynamic systems.

In thermodynamics, heat is defined as the form of energy crossing the boundary of a thermodynamic system by virtue of a temperature difference across the boundary. A thermodynamic system does not contain heat. Nevertheless, the term is also often used to refer to the thermal energy contained in a system as a component of its internal energy and that is reflected in the temperature of the system. For both uses of the term, heat is a form of energy.

References

- ↑ Lemons, Don S. (2008). Mere Thermodynamics. JHU Press. p. 68. ISBN 9780801890154 . Retrieved 2012-12-11.