A robot is a machine—especially one programmable by a computer—capable of carrying out a complex series of actions automatically. A robot can be guided by an external control device, or the control may be embedded within. Robots may be constructed to evoke human form, but most robots are task-performing machines, designed with an emphasis on stark functionality, rather than expressive aesthetics.

Usability engineering is a professional discipline that focuses on improving the usability of interactive systems. It draws on theories from computer science and psychology to define problems that occur during the use of such a system. Usability Engineering involves the testing of designs at various stages of the development process, with users or with usability experts. The history of usability engineering in this context dates back to the 1980s. In 1988, authors John Whiteside and John Bennett—of Digital Equipment Corporation and IBM, respectively—published material on the subject, isolating the early setting of goals, iterative evaluation, and prototyping as key activities. The usability expert Jakob Nielsen is a leader in the field of usability engineering. In his 1993 book Usability Engineering, Nielsen describes methods to use throughout a product development process—so designers can ensure they take into account the most important barriers to learnability, efficiency, memorability, error-free use, and subjective satisfaction before implementing the product. Nielsen’s work describes how to perform usability tests and how to use usability heuristics in the usability engineering lifecycle. Ensuring good usability via this process prevents problems in product adoption after release. Rather than focusing on finding solutions for usability problems—which is the focus of a UX or interaction designer—a usability engineer mainly concentrates on the research phase. In this sense, it is not strictly a design role, and many usability engineers have a background in computer science because of this. Despite this point, its connection to the design trade is absolutely crucial, not least as it delivers the framework by which designers can work so as to be sure that their products will connect properly with their target usership.

BEAM robotics is a style of robotics that primarily uses simple analogue circuits, such as comparators, instead of a microprocessor in order to produce an unusually simple design. While not as flexible as microprocessor based robotics, BEAM robotics can be robust and efficient in performing the task for which it was designed.

Military robots are autonomous robots or remote-controlled mobile robots designed for military applications, from transport to search & rescue and attack.

The DARPA Grand Challenge is a prize competition for American autonomous vehicles, funded by the Defense Advanced Research Projects Agency, the most prominent research organization of the United States Department of Defense. Congress has authorized DARPA to award cash prizes to further DARPA's mission to sponsor revolutionary, high-payoff research that bridges the gap between fundamental discoveries and military use. The initial DARPA Grand Challenge in 2004 was created to spur the development of technologies needed to create the first fully autonomous ground vehicles capable of completing a substantial off-road course within a limited time. The third event, the DARPA Urban Challenge in 2007, extended the initial Challenge to autonomous operation in a mock urban environment. The 2012 DARPA Robotics Challenge, focused on autonomous emergency-maintenance robots, and new Challenges are still being conceived. The DARPA Subterranean Challenge was tasked with building robotic teams to autonomously map, navigate, and search subterranean environments. Such teams could be useful in exploring hazardous areas and in search and rescue.

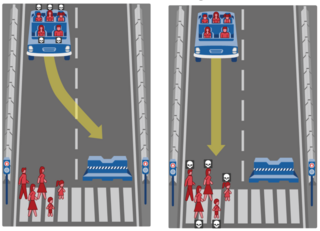

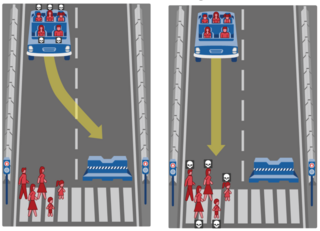

The trolley problem is a series of thought experiments in ethics, psychology and artificial intelligence involving stylized ethical dilemmas of whether to sacrifice one person to save a larger number. The series usually begins with a scenario in which a runaway tram or trolley is on course to collide with and kill a number of people down the track, but a driver or bystander can intervene and divert the vehicle to kill just one person on a different track. Then other variations of the runaway vehicle, and analogous life-and-death dilemmas are posed, each containing the option to either do nothing, in which case several people will be killed, or intervene and sacrifice one initially "safe" person to save the others.

User-centered design (UCD) or user-driven development (UDD) is a framework of processes in which usability goals, user characteristics, environment, tasks and workflow of a product, service or process are given extensive attention at each stage of the design process. These tests are conducted with or without actual users during each stage of the process from requirements, pre-production models and post production, completing a circle of proof back to and ensuring that "development proceeds with the user as the center of focus." Such testing is necessary as it is often very difficult for the designers of a product to understand intuitively the experiences of first-time users, and what each user's learning curve may look like. User-centered design is based on the understanding of a user, their demands, priorities and experiences and when used, is known to lead to an increased product usefulness and usability as it delivers satisfaction to the user. User-centered design applies cognitive science principles to create intuitive, efficient products by understanding users' mental processes, behaviors, and needs.

Laws of robotics are any set of laws, rules, or principles, which are intended as a fundamental framework to underpin the behavior of robots designed to have a degree of autonomy. Robots of this degree of complexity do not yet exist, but they have been widely anticipated in science fiction, films and are a topic of active research and development in the fields of robotics and artificial intelligence.

Human-centered computing (HCC) studies the design, development, and deployment of mixed-initiative human-computer systems. It is emerged from the convergence of multiple disciplines that are concerned both with understanding human beings and with the design of computational artifacts. Human-centered computing is closely related to human-computer interaction and information science. Human-centered computing is usually concerned with systems and practices of technology use while human-computer interaction is more focused on ergonomics and the usability of computing artifacts and information science is focused on practices surrounding the collection, manipulation, and use of information.

Weak artificial intelligence is artificial intelligence that implements a limited part of the mind, or, as narrow AI, is focused on one narrow task.

Vehicular automation involves the use of mechatronics, artificial intelligence, and multi-agent systems to assist the operator of a vehicle such as a car, lorry, aircraft, or watercraft. A vehicle using automation for tasks such as navigation to ease but not replace human control, qualify as semi-autonomous, whereas a fully self-operated vehicle is termed autonomous.

Robot ethics, sometimes known as "roboethics", concerns ethical problems that occur with robots, such as whether robots pose a threat to humans in the long or short run, whether some uses of robots are problematic, and how robots should be designed such that they act 'ethically'. Alternatively, roboethics refers specifically to the ethics of human behavior towards robots, as robots become increasingly advanced. Robot ethics is a sub-field of ethics of technology, specifically information technology, and it has close links to legal as well as socio-economic concerns. Researchers from diverse areas are beginning to tackle ethical questions about creating robotic technology and implementing it in societies, in a way that will still ensure the safety of the human race.

The ethics of artificial intelligence covers a broad range of topics within the field that are considered to have particular ethical stakes. This includes algorithmic biases, fairness, automated decision-making, accountability, privacy, and regulation. It also covers various emerging or potential future challenges such as machine ethics, lethal autonomous weapon systems, arms race dynamics, AI safety and alignment, technological unemployment, AI-enabled misinformation, how to treat certain AI systems if they have a moral status, artificial superintelligence and existential risks.

Machine ethics is a part of the ethics of artificial intelligence concerned with adding or ensuring moral behaviors of man-made machines that use artificial intelligence, otherwise known as artificial intelligent agents. Machine ethics differs from other ethical fields related to engineering and technology. It should not be confused with computer ethics, which focuses on human use of computers. It should also be distinguished from the philosophy of technology, which concerns itself with technology's grander social effects.

User research focuses on understanding user behaviors, needs and motivations through interviews, surveys, usability evaluations and other forms of feedback methodologies. It is used to understand how people interact with products and evaluate whether design solutions meet their needs. This field of research aims at improving the user experience (UX) of products, services, or processes by incorporating experimental and observational research methods to guide the design, development, and refinement of a product. User research is used to improve a multitude of products like websites, mobile phones, medical devices, banking, government services and many more. It is an iterative process that can be used at anytime during product development and is a core part of user-centered design.

The Machine Question: Critical Perspectives on AI, Robots, and Ethics is a 2012 nonfiction book by David J. Gunkel that discusses the evolution of the theory of human ethical responsibilities toward non-human things and to what extent intelligent, autonomous machines can be considered to have legitimate moral responsibilities and what legitimate claims to moral consideration they can hold. The book was awarded as the 2012 Best Single Authored Book by the Communication Ethics Division of the National Communication Association.

Moral Machine is an online platform, developed by Iyad Rahwan's Scalable Cooperation group at the Massachusetts Institute of Technology, that generates moral dilemmas and collects information on the decisions that people make between two destructive outcomes. The platform is the idea of Iyad Rahwan and social psychologists Azim Shariff and Jean-François Bonnefon, who conceived of the idea ahead of the publication of their article about the ethics of self-driving cars. The key contributors to building the platform were MIT Media Lab graduate students Edmond Awad and Sohan Dsouza.

Robotic governance provides a regulatory framework to deal with autonomous and intelligent machines. This includes research and development activities as well as handling of these machines. The idea is related to the concepts of corporate governance, technology governance and IT-governance, which provide a framework for the management of organizations or the focus of a global IT infrastructure.

Ajung Moon is a Korean-Canadian experimental roboticist specializing in ethics and responsible design of interactive robots and autonomous intelligent systems. She is an assistant professor of electrical and computer engineering at McGill University and the Director of the McGill Responsible Autonomy & Intelligent System Ethics (RAISE) lab. Her research interests lie in human-robot interaction, AI ethics, and robot ethics.

Autonomous mobility on demand (AMoD) is a service consisting of a fleet of autonomous vehicles used for one-way passenger mobility. An AMoD fleet operates in a specific and limited environment, such as a city or a rural area.