Related Research Articles

IBM mainframes are large computer systems produced by IBM since 1952. During the 1960s and 1970s, IBM dominated the computer market with the 7000 series and the later System/360, followed by the System/370. Current mainframe computers in IBM's line of business computers are developments of the basic design of the System/360.

In computing, endianness is the order in which bytes within a word of digital data are transmitted over a data communication medium or addressed in computer memory, counting only byte significance compared to earliness. Endianness is primarily expressed as big-endian (BE) or little-endian (LE), terms introduced by Danny Cohen into computer science for data ordering in an Internet Experiment Note published in 1980. The adjective endian has its origin in the writings of 18th century Anglo-Irish writer Jonathan Swift. In the 1726 novel Gulliver's Travels, he portrays the conflict between sects of Lilliputians divided into those breaking the shell of a boiled egg from the big end or from the little end. By analogy, a CPU may read a digital word big end first, or little end first.

The IBM 704 is the model name of a large digital mainframe computer introduced by IBM in 1954. It was the first mass-produced computer with hardware for floating-point arithmetic. The IBM 704 Manual of operation states:

The type 704 Electronic Data-Processing Machine is a large-scale, high-speed electronic calculator controlled by an internally stored program of the single address type.

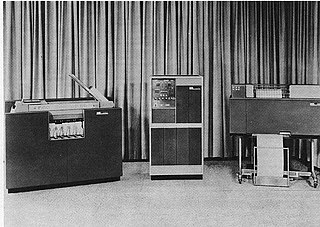

The IBM 1620 was announced by IBM on October 21, 1959, and marketed as an inexpensive scientific computer. After a total production of about two thousand machines, it was withdrawn on November 19, 1970. Modified versions of the 1620 were used as the CPU of the IBM 1710 and IBM 1720 Industrial Process Control Systems.

The IBM 1401 is a variable-wordlength decimal computer that was announced by IBM on October 5, 1959. The first member of the highly successful IBM 1400 series, it was aimed at replacing unit record equipment for processing data stored on punched cards and at providing peripheral services for larger computers. The 1401 is considered by IBM to be the Ford Model-T of the computer industry due to its mass appeal. Over 12,000 units were produced and many were leased or resold after they were replaced with newer technology. The 1401 was withdrawn on February 8, 1971.

The IBM 7090 is a second-generation transistorized version of the earlier IBM 709 vacuum tube mainframe computer that was designed for "large-scale scientific and technological applications". The 7090 is the fourth member of the IBM 700/7000 series scientific computers. The first 7090 installation was in December 1959. In 1960, a typical system sold for $2.9 million or could be rented for $63,500 a month.

The IBM 700/7000 series is a series of large-scale (mainframe) computer systems that were made by IBM through the 1950s and early 1960s. The series includes several different, incompatible processor architectures. The 700s use vacuum-tube logic and were made obsolete by the introduction of the transistorized 7000s. The 7000s, in turn, were eventually replaced with System/360, which was announced in 1964. However the 360/65, the first 360 powerful enough to replace 7000s, did not become available until November 1965. Early problems with OS/360 and the high cost of converting software kept many 7000s in service for years afterward.

The history of computing hardware starting at 1960 is marked by the conversion from vacuum tube to solid-state devices such as transistors and then integrated circuit (IC) chips. Around 1953 to 1959, discrete transistors started being considered sufficiently reliable and economical that they made further vacuum tube computers uncompetitive. Metal–oxide–semiconductor (MOS) large-scale integration (LSI) technology subsequently led to the development of semiconductor memory in the mid-to-late 1960s and then the microprocessor in the early 1970s. This led to primary computer memory moving away from magnetic-core memory devices to solid-state static and dynamic semiconductor memory, which greatly reduced the cost, size, and power consumption of computers. These advances led to the miniaturized personal computer (PC) in the 1970s, starting with home computers and desktop computers, followed by laptops and then mobile computers over the next several decades.

An index register in a computer's CPU is a processor register used for pointing to operand addresses during the run of a program. It is useful for stepping through strings and arrays. It can also be used for holding loop iterations and counters. In some architectures it is used for read/writing blocks of memory. Depending on the architecture it may be a dedicated index register or a general-purpose register. Some instruction sets allow more than one index register to be used; in that case additional instruction fields may specify which index registers to use.

In computing, a memory address is a reference to a specific memory location used at various levels by software and hardware. Memory addresses are fixed-length sequences of digits conventionally displayed and manipulated as unsigned integers. Such numerical semantic bases itself upon features of CPU, as well upon use of the memory like an array endorsed by various programming languages.

Addressing modes are an aspect of the instruction set architecture in most central processing unit (CPU) designs. The various addressing modes that are defined in a given instruction set architecture define how the machine language instructions in that architecture identify the operand(s) of each instruction. An addressing mode specifies how to calculate the effective memory address of an operand by using information held in registers and/or constants contained within a machine instruction or elsewhere.

The IBM 1400 series are second-generation (transistor) mid-range business decimal computers that IBM marketed in the early 1960s. The computers were offered to replace tabulating machines like the IBM 407. The 1400-series machines stored information in magnetic cores as variable-length character strings separated on the left by a special bit, called a "wordmark," and on the right by a "record mark." Arithmetic was performed digit-by-digit. Input and output support included punched card, magnetic tape, and high-speed line printers. Disk storage was also available.

In computing, a word is the natural unit of data used by a particular processor design. A word is a fixed-sized datum handled as a unit by the instruction set or the hardware of the processor. The number of bits or digits in a word is an important characteristic of any specific processor design or computer architecture.

Autocoder is any of a group of assemblers for a number of IBM computers of the 1950s and 1960s. The first Autocoders appear to have been the earliest assemblers to provide a macro facility.

The Honeywell 200 was a character-oriented two-address commercial computer introduced by Honeywell in December 1963, the basis of later models in Honeywell 200 Series, including 1200, 1250, 2200, 3200, 4200 and others, and the character processor of the Honeywell 8200 (1968).

The IBM System/360 Model 30 was a low-end member of the IBM System/360 family. It was announced on April 7, 1964, shipped in 1965, and withdrawn on October 7, 1977. The Model 30 was designed by IBM's General Systems Division in Endicott, New York, and manufactured in Endicott and other IBM manufacturing sites outside of U.S.

A decimal computer is a computer that can represent numbers and addresses in decimal and that provides instructions to operate on those numbers and addresses directly in decimal, without conversion to a pure binary representation. Some also had a variable wordlength, which enabled operations on numbers with a large number of digits.

This timeline of binary prefixes lists events in the history of the evolution, development, and use of units of measure that are germane to the definition of the binary prefixes by the International Electrotechnical Commission (IEC) in 1998, used primarily with units of information such as the bit and the byte.

BCD, also called alphanumeric BCD, alphameric BCD, BCD Interchange Code, or BCDIC, is a family of representations of numerals, uppercase Latin letters, and some special and control characters as six-bit character codes.

References

- ↑ IBM (April 1962). IBM 1401 Data Processing System: Reference Manual (PDF). p. 20. A24-1403-5. Retrieved 2019-09-30.

- ↑ "IBM Archives: 1620 Data Processing System". 23 January 2003. Archived from the original on January 14, 2005.