Related Research Articles

A cognitive bias is a systematic pattern of deviation from norm or rationality in judgment. Individuals create their own "subjective reality" from their perception of the input. An individual's construction of reality, not the objective input, may dictate their behavior in the world. Thus, cognitive biases may sometimes lead to perceptual distortion, inaccurate judgment, illogical interpretation, and irrationality.

In social psychology, the fundamental attribution error (FAE) is a cognitive attribution bias in which observers underemphasize situational and environmental factors for the behavior of an actor while overemphasizing dispositional or personality factors. In other words, observers tend to overattribute the behaviors of others to their personality (e.g., he is late because he's selfish) and underattribute them to the situation or context (e.g., he is late because he got stuck in traffic). Although personality traits and predispositions are considered to be observable facts in psychology, the fundamental attribution error is an error because it misinterprets their effects.

Hindsight bias, also known as the knew-it-all-along phenomenon or creeping determinism, is the common tendency for people to perceive past events as having been more predictable than they were.

In psychology, an attribution bias or attributional errors is a cognitive bias that refers to the systematic errors made when people evaluate or try to find reasons for their own and others' behaviors. It refers to the systematic patterns of deviation from norm or rationality in judgment, often leading to perceptual distortions, inaccurate assessments, or illogical interpretations of events and behaviors.

Trait ascription bias is the tendency for people to view themselves as relatively variable in terms of personality, behavior and mood while viewing others as much more predictable in their personal traits across different situations. More specifically, it is a tendency to describe one's own behaviour in terms of situational factors while preferring to describe another's behaviour by ascribing fixed dispositions to their personality. This may occur because peoples' own internal states are more readily observable and available to them than those of others.

The halo effect is the proclivity for positive impressions of a person, company, country, brand, or product in one area to positively influence one's opinion or feelings. The halo effect is "the name given to the phenomenon whereby evaluators tend to be influenced by their previous judgments of performance or personality." The halo effect is a cognitive bias which can prevent someone from forming an image of a person, a product or a brand based on the sum of all objective circumstances at hand.

The anchoring effect is a psychological phenomenon in which an individual's judgments or decisions are influenced by a reference point or "anchor" which can be completely irrelevant. Both numeric and non-numeric anchoring have been reported in research. In numeric anchoring, once the value of the anchor is set, subsequent arguments, estimates, etc. made by an individual may change from what they would have otherwise been without the anchor. For example, an individual may be more likely to purchase a car if it is placed alongside a more expensive model. Prices discussed in negotiations that are lower than the anchor may seem reasonable, perhaps even cheap to the buyer, even if said prices are still relatively higher than the actual market value of the car. Another example may be when estimating the orbit of Mars, one might start with the Earth's orbit and then adjust upward until they reach a value that seems reasonable.

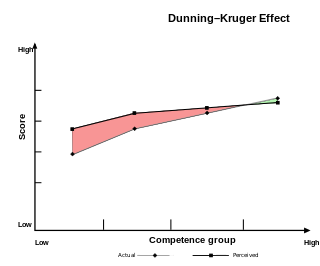

The Dunning–Kruger effect is a cognitive bias in which people with limited competence in a particular domain overestimate their abilities. It was first described by Justin Kruger and David Dunning in 1999. Some researchers also include the opposite effect for high performers: their tendency to underestimate their skills. In popular culture, the Dunning–Kruger effect is often misunderstood as a claim about general overconfidence of people with low intelligence instead of specific overconfidence of people unskilled at a particular task.

The overconfidence effect is a well-established bias in which a person's subjective confidence in their judgments is reliably greater than the objective accuracy of those judgments, especially when confidence is relatively high. Overconfidence is one example of a miscalibration of subjective probabilities. Throughout the research literature, overconfidence has been defined in three distinct ways: (1) overestimation of one's actual performance; (2) overplacement of one's performance relative to others; and (3) overprecision in expressing unwarranted certainty in the accuracy of one's beliefs.

The subadditivity effect is the tendency to judge probability of the whole to be less than the probabilities of the parts.

Optimism bias or optimistic bias is a cognitive bias that causes someone to believe that they themselves are less likely to experience a negative event. It is also known as unrealistic optimism or comparative optimism.

The negativity bias, also known as the negativity effect, is a cognitive bias that, even when positive or neutral things of equal intensity occur, things of a more negative nature have a greater effect on one's psychological state and processes than neutral or positive things. In other words, something very positive will generally have less of an impact on a person's behavior and cognition than something equally emotional but negative. The negativity bias has been investigated within many different domains, including the formation of impressions and general evaluations; attention, learning, and memory; and decision-making and risk considerations.

Positive illusions are unrealistically favorable attitudes that people have towards themselves or to people that are close to them. Positive illusions are a form of self-deception or self-enhancement that feel good; maintain self-esteem; or avoid discomfort, at least in the short term. There are three general forms: inflated assessment of one's own abilities, unrealistic optimism about the future, and an illusion of control. The term "positive illusions" originates in a 1988 paper by Taylor and Brown. "Taylor and Brown's (1988) model of mental health maintains that certain positive illusions are highly prevalent in normal thought and predictive of criteria traditionally associated with mental health."

Self-enhancement is a type of motivation that works to make people feel good about themselves and to maintain self-esteem. This motive becomes especially prominent in situations of threat, failure or blows to one's self-esteem. Self-enhancement involves a preference for positive over negative self-views. It is one of the three self-evaluation motives along with self-assessment and self-verification . Self-evaluation motives drive the process of self-regulation, that is, how people control and direct their own actions.

In social psychology, illusory superiority is a cognitive bias wherein people overestimate their own qualities and abilities compared to others. Illusory superiority is one of many positive illusions, relating to the self, that are evident in the study of intelligence, the effective performance of tasks and tests, and the possession of desirable personal characteristics and personality traits. Overestimation of abilities compared to an objective measure is known as the overconfidence effect.

In cognitive psychology and decision science, conservatism or conservatism bias is a bias which refers to the tendency to revise one's belief insufficiently when presented with new evidence. This bias describes human belief revision in which people over-weigh the prior distribution and under-weigh new sample evidence when compared to Bayesian belief-revision.

Naïve cynicism is a philosophy of mind, cognitive bias and form of psychological egoism that occurs when people naïvely expect more egocentric bias in others than actually is the case.

The hard–easy effect is a cognitive bias that manifests itself as a tendency to overestimate the probability of one's success at a task perceived as hard, and to underestimate the likelihood of one's success at a task perceived as easy. The hard-easy effect takes place, for example, when individuals exhibit a degree of underconfidence in answering relatively easy questions and a degree of overconfidence in answering relatively difficult questions. "Hard tasks tend to produce overconfidence but worse-than-average perceptions," reported Katherine A. Burson, Richard P. Larrick, and Jack B. Soll in a 2005 study, "whereas easy tasks tend to produce underconfidence and better-than-average effects."

The false-uniqueness effect is an attributional type of cognitive bias in social psychology that describes how people tend to view their qualities, traits, and personal attributes as unique when in reality they are not. This bias is often measured by looking at the difference between estimates that people make about how many of their peers share a certain trait or behaviour and the actual number of peers who report these traits and behaviours.

References

- ↑ Kruger, J. (1999). "Lake Wobegon be gone! The "below-average effect" and the egocentric nature of comparative ability judgments". Journal of Personality and Social Psychology . 77 (2): 221–232. doi:10.1037/0022-3514.77.2.221. PMID 10474208.

- ↑ Hilbert, Martin (2012). "Toward a synthesis of cognitive biases: How noisy information processing can bias human decision making" (PDF). Psychological Bulletin. 138 (2): 211–237. CiteSeerX 10.1.1.432.8763 . doi:10.1037/a0025940. PMID 22122235. Archived from the original (PDF) on 2016-03-21.