In mathematics, and more specifically in linear algebra, a linear map is a mapping between two vector spaces that preserves the operations of vector addition and scalar multiplication. The same names and the same definition are also used for the more general case of modules over a ring; see Module homomorphism.

In mathematics, a set B of vectors in a vector space V is called a basis if every element of V may be written in a unique way as a finite linear combination of elements of B. The coefficients of this linear combination are referred to as components or coordinates of the vector with respect to B. The elements of a basis are called basis vectors.

Linear algebra is the branch of mathematics concerning linear equations such as:

In mathematics, an operator is generally a mapping or function that acts on elements of a space to produce elements of another space. There is no general definition of an operator, but the term is often used in place of function when the domain is a set of functions or other structured objects. Also, the domain of an operator is often difficult to characterize explicitly, and may be extended so as to act on related objects. See Operator (physics) for other examples.

In mathematics and physics, a vector space is a set whose elements, often called vectors, may be added together and multiplied ("scaled") by numbers called scalars. Scalars are often real numbers, but can be complex numbers or, more generally, elements of any field. The operations of vector addition and scalar multiplication must satisfy certain requirements, called vector axioms. The terms real vector space and complex vector space are often used to specify the nature of the scalars: real coordinate space or complex coordinate space.

In Euclidean geometry, an affine transformation or affinity is a geometric transformation that preserves lines and parallelism, but not necessarily Euclidean distances and angles.

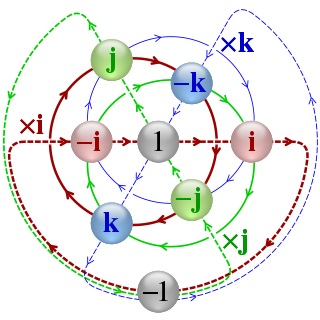

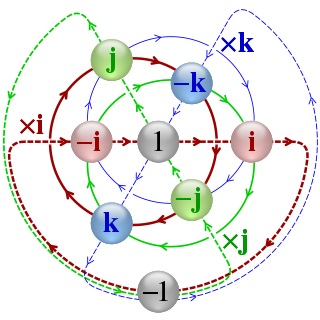

In mathematics, the quaternion number system extends the complex numbers. Quaternions were first described by the Irish mathematician William Rowan Hamilton in 1843 and applied to mechanics in three-dimensional space. Hamilton defined a quaternion as the quotient of two directed lines in a three-dimensional space, or, equivalently, as the quotient of two vectors. Multiplication of quaternions is noncommutative.

In the theory of vector spaces, a set of vectors is said to be linearly independent if there exists no nontrivial linear combination of the vectors that equals the zero vector. If such a linear combination exists, then the vectors are said to be linearly dependent. These concepts are central to the definition of dimension.

In mathematics, the general linear group of degree n is the set of n×n invertible matrices, together with the operation of ordinary matrix multiplication. This forms a group, because the product of two invertible matrices is again invertible, and the inverse of an invertible matrix is invertible, with the identity matrix as the identity element of the group. The group is so named because the columns of an invertible matrix are linearly independent, hence the vectors/points they define are in general linear position, and matrices in the general linear group take points in general linear position to points in general linear position.

In mathematics, the orthogonal group in dimension , denoted , is the group of distance-preserving transformations of a Euclidean space of dimension that preserve a fixed point, where the group operation is given by composing transformations. The orthogonal group is sometimes called the general orthogonal group, by analogy with the general linear group. Equivalently, it is the group of orthogonal matrices, where the group operation is given by matrix multiplication. The orthogonal group is an algebraic group and a Lie group. It is compact.

In linear algebra, a diagonal matrix is a matrix in which the entries outside the main diagonal are all zero; the term usually refers to square matrices. Elements of the main diagonal can either be zero or nonzero. An example of a 2×2 diagonal matrix is , while an example of a 3×3 diagonal matrix is. An identity matrix of any size, or any multiple of it, is a diagonal matrix.

In mathematics, an algebra over a field is a vector space equipped with a bilinear product. Thus, an algebra is an algebraic structure consisting of a set together with operations of multiplication and addition and scalar multiplication by elements of a field and satisfying the axioms implied by "vector space" and "bilinear".

In mathematics, and in particular linear algebra, the Moore–Penrose inverse of a matrix is the most widely known generalization of the inverse matrix. It was independently described by E. H. Moore in 1920, Arne Bjerhammar in 1951, and Roger Penrose in 1955. Earlier, Erik Ivar Fredholm had introduced the concept of a pseudoinverse of integral operators in 1903. When referring to a matrix, the term pseudoinverse, without further specification, is often used to indicate the Moore–Penrose inverse. The term generalized inverse is sometimes used as a synonym for pseudoinverse.

In linear algebra, the quotient of a vector space by a subspace is a vector space obtained by "collapsing" to zero. The space obtained is called a quotient space and is denoted .

In abstract algebra, the split-quaternions or coquaternions form an algebraic structure introduced by James Cockle in 1849 under the latter name. They form an associative algebra of dimension four over the real numbers.

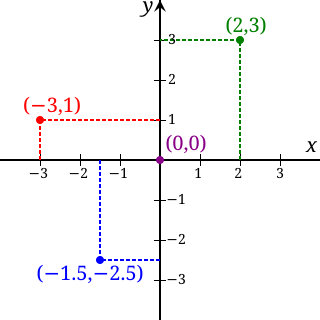

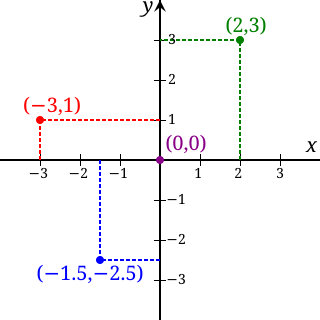

In mathematics, the real coordinate space of dimension n, denoted Rn or , is the set of the n-tuples of real numbers, that is the set of all sequences of n real numbers. Special cases are called the real lineR1 and the real coordinate planeR2. With component-wise addition and scalar multiplication, it is a real vector space, and its elements are called coordinate vectors.

In linear algebra, an eigenvector or characteristic vector of a linear transformation is a nonzero vector that changes at most by a constant factor when that linear transformation is applied to it. The corresponding eigenvalue, often represented by , is the multiplying factor.

In mathematics, especially in linear algebra and matrix theory, the vectorization of a matrix is a linear transformation which converts the matrix into a vector. Specifically, the vectorization of a m × n matrix A, denoted vec(A), is the mn × 1 column vector obtained by stacking the columns of the matrix A on top of one another:

In mathematics, a definite quadratic form is a quadratic form over some real vector space V that has the same sign for every non-zero vector of V. According to that sign, the quadratic form is called positive-definite or negative-definite.

In mathematics, a matrix is a rectangular array or table of numbers, symbols, or expressions, arranged in rows and columns, which is used to represent a mathematical object or a property of such an object.