A matrix is typically stored as a two-dimensional array. Each entry in the array represents an element ai,j of the matrix and is accessed by the two indices i and j. Conventionally, i is the row index, numbered from top to bottom, and j is the column index, numbered from left to right. For an m × n matrix, the amount of memory required to store the matrix in this format is proportional to m × n (disregarding the fact that the dimensions of the matrix also need to be stored).

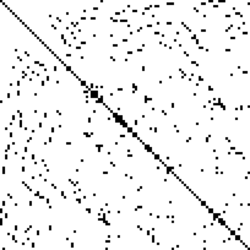

In the case of a sparse matrix, substantial memory requirement reductions can be realized by storing only the non-zero entries. Depending on the number and distribution of the non-zero entries, different data structures can be used and yield huge savings in memory when compared to the basic approach. The trade-off is that accessing the individual elements becomes more complex and additional structures are needed to be able to recover the original matrix unambiguously.

The compressed sparse row (CSR) or compressed row storage (CRS) or Yale format represents a matrix M by three (one-dimensional) arrays, that respectively contain nonzero values, the extents of rows, and column indices. It is similar to COO, but compresses the row indices, hence the name. This format allows fast row access and matrix-vector multiplications (Mx). The CSR format has been in use since at least the mid-1960s, with the first complete description appearing in 1967. [7]

The CSR format stores a sparse m × n matrix M in row form using three (one-dimensional) arrays (V, COL_INDEX, ROW_INDEX). Let NNZ denote the number of nonzero entries in M. (Note that zero-based indices shall be used here.)

- The arrays V and COL_INDEX are of length NNZ, and contain the non-zero values and the column indices of those values respectively

- COL_INDEX contains the column in which the corresponding entry V is located.

- The array ROW_INDEX is of length m + 1 and encodes the index in V and COL_INDEX where the given row starts. This is equivalent to ROW_INDEX[j] encoding the total number of nonzeros above row j. The last element is NNZ , i.e., the fictitious index in V immediately after the last valid index NNZ − 1. [8]

For example, the matrix  is a 4 × 4 matrix with 4 nonzero elements, hence

is a 4 × 4 matrix with 4 nonzero elements, hence

V = [ 5 8 3 6 ] COL_INDEX = [ 0 1 2 1 ] ROW_INDEX = [ 0 1 2 3 4 ]

assuming a zero-indexed language.

To extract a row, we first define:

row_start = ROW_INDEX[row] row_end = ROW_INDEX[row + 1]

Then we take slices from V and COL_INDEX starting at row_start and ending at row_end.

To extract the row 1 (the second row) of this matrix we set row_start=1 and row_end=2. Then we make the slices V[1:2] = [8] and COL_INDEX[1:2] = [1]. We now know that in row 1 we have one element at column 1 with value 8.

In this case the CSR representation contains 13 entries, compared to 16 in the original matrix. The CSR format saves on memory only when NNZ < (m (n − 1) − 1) / 2.

Another example, the matrix  is a 4 × 6 matrix (24 entries) with 8 nonzero elements, so

is a 4 × 6 matrix (24 entries) with 8 nonzero elements, so

V = [ 10 20 30 40 50 60 70 80 ] COL_INDEX = [ 0 1 1 3 2 3 4 5 ] ROW_INDEX = [ 0 2 4 7 8 ]

The whole is stored as 21 entries: 8 in V, 8 in COL_INDEX, and 5 in ROW_INDEX.

- ROW_INDEX splits the array V into rows:

(10, 20) (30, 40) (50, 60, 70) (80), indicating the index of V (and COL_INDEX) where each row starts and ends; - COL_INDEX aligns values in columns:

(10, 20, ...) (0, 30, 0, 40, ...)(0, 0, 50, 60, 70, 0) (0, 0, 0, 0, 0, 80).

Note that in this format, the first value of ROW_INDEX is always zero and the last is always NNZ, so they are in some sense redundant (although in programming languages where the array length needs to be explicitly stored, NNZ would not be redundant). Nonetheless, this does avoid the need to handle an exceptional case when computing the length of each row, as it guarantees the formula ROW_INDEX[i + 1] − ROW_INDEX[i] works for any row i. Moreover, the memory cost of this redundant storage is likely insignificant for a sufficiently large matrix.

The (old and new) Yale sparse matrix formats are instances of the CSR scheme. The old Yale format works exactly as described above, with three arrays; the new format combines ROW_INDEX and COL_INDEX into a single array and handles the diagonal of the matrix separately. [9]

For logical adjacency matrices, the data array can be omitted, as the existence of an entry in the row array is sufficient to model a binary adjacency relation.

It is likely known as the Yale format because it was proposed in the 1977 Yale Sparse Matrix Package report from Department of Computer Science at Yale University. [10]

Compressed sparse column (CSC or CCS)

CSC is similar to CSR except that values are read first by column, a row index is stored for each value, and column pointers are stored. For example, CSC is (val, row_ind, col_ptr), where val is an array of the (top-to-bottom, then left-to-right) non-zero values of the matrix; row_ind is the row indices corresponding to the values; and, col_ptr is the list of val indexes where each column starts. The name is based on the fact that column index information is compressed relative to the COO format. One typically uses another format (LIL, DOK, COO) for construction. This format is efficient for arithmetic operations, column slicing, and matrix-vector products. This is the traditional format for specifying a sparse matrix in MATLAB (via the sparse function).