A computer mouse is a hand-held pointing device that detects two-dimensional motion relative to a surface. This motion is typically translated into the motion of the pointer on a display, which allows a smooth control of the graphical user interface of a computer.

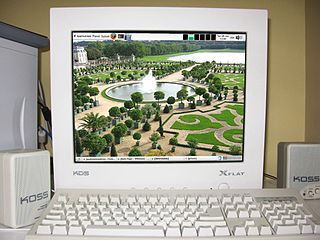

A graphical user interface, or GUI, is a form of user interface that allows users to interact with electronic devices through graphical icons and visual indicators such as secondary notation. In many applications, GUIs are used instead of text-based UIs, which are based on typed command labels or text navigation. GUIs were introduced in reaction to the perceived steep learning curve of command-line interfaces (CLIs), which require commands to be typed on a computer keyboard.

In computing, a pointing device gesture or mouse gesture is a way of combining pointing device or finger movements and clicks that the software recognizes as a specific computer event and responds to accordingly. They can be useful for people who have difficulties typing on a keyboard. For example, in a web browser, a user can navigate to the previously viewed page by pressing the right pointing device button, moving the pointing device briefly to the left, then releasing the button.

A pointing device is a human interface device that allows a user to input spatial data to a computer. Graphical user interfaces (GUI) and CAD systems allow the user to control and provide data to the computer using physical gestures by moving a hand-held mouse or similar device across the surface of the physical desktop and activating switches on the mouse. Movements of the pointing device are echoed on the screen by movements of the pointer and other visual changes. Common gestures are point and click and drag and drop.

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine from the human end, while the machine simultaneously feeds back information that aids the operators' decision-making process. Examples of this broad concept of user interfaces include the interactive aspects of computer operating systems, hand tools, heavy machinery operator controls and process controls. The design considerations applicable when creating user interfaces are related to, or involve such disciplines as, ergonomics and psychology.

In computer science, human–computer interaction, and interaction design, direct manipulation is an approach to interfaces which involves continuous representation of objects of interest together with rapid, reversible, and incremental actions and feedback. As opposed to other interaction styles, for example, the command language, the intention of direct manipulation is to allow a user to manipulate objects presented to them, using actions that correspond at least loosely to manipulation of physical objects. An example of direct manipulation is resizing a graphical shape, such as a rectangle, by dragging its corners or edges with a mouse.

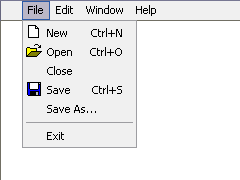

In user interface design, a menu is a list of options presented to the user.

ISO 9241 is a multi-part standard from the International Organization for Standardization (ISO) covering ergonomics of human-system interaction and related, human-centered design processes. It is managed by the ISO Technical Committee 159. It was originally titled Ergonomic requirements for office work with visual display terminals (VDTs). From 2006 onwards, the standards were retitled to the more generic Ergonomics of Human System Interaction.

In human–computer interaction, WIMP stands for "windows, icons, menus, pointer", denoting a style of interaction using these elements of the user interface. Other expansions are sometimes used, such as substituting "mouse" and "mice" for menus, or "pull-down menu" and "pointing" for pointer.

Across the many fields concerned with interactivity, including information science, computer science, human-computer interaction, communication, and industrial design, there is little agreement over the meaning of the term "interactivity", but most definitions are related to interaction between users and computers and other machines through a user interface. Interactivity can however also refer to interaction between people. It nevertheless usually refers to interaction between people and computers – and sometimes to interaction between computers – through software, hardware, and networks.

The following outline is provided as an overview of and topical guide to human–computer interaction:

GOMS is a specialized human information processor model for human-computer interaction observation that describes a user's cognitive structure on four components. In the book The Psychology of Human Computer Interaction. written in 1983 by Stuart K. Card, Thomas P. Moran and Allen Newell, the authors introduce: "a set of Goals, a set of Operators, a set of Methods for achieving the goals, and a set of Selections rules for choosing among competing methods for goals." GOMS is a widely used method by usability specialists for computer system designers because it produces quantitative and qualitative predictions of how people will use a proposed system.

A tangible user interface (TUI) is a user interface in which a person interacts with digital information through the physical environment. The initial name was Graspable User Interface, which is no longer used. The purpose of TUI development is to empower collaboration, learning, and design by giving physical forms to digital information, thus taking advantage of the human ability to grasp and manipulate physical objects and materials.

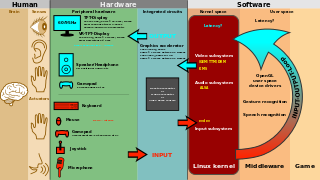

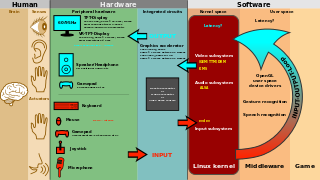

Multimodal interaction provides the user with multiple modes of interacting with a system. A multimodal interface provides several distinct tools for input and output of data.

In human–computer interaction, a cursor is an indicator used to show the current position on a computer monitor or other display device that will respond to input, such as a text cursor or a mouse pointer.

User interface (UI) design or user interface engineering is the design of user interfaces for machines and software, such as computers, home appliances, mobile devices, and other electronic devices, with the focus on maximizing usability and the user experience. In computer or software design, user interface (UI) design primarily focuses on information architecture. It is the process of building interfaces that clearly communicate to the user what's important. UI design refers to graphical user interfaces and other forms of interface design. The goal of user interface design is to make the user's interaction as simple and efficient as possible, in terms of accomplishing user goals. User-centered design is typically accomplished through the execution of modern design thinking which involves empathizing with the target audience, defining a problem statement, ideating potential solutions, prototyping wireframes, and testing prototypes in order to refine final interface mockups.

A context-sensitive user interface offers the user options based on the state of the active program. Context sensitivity is ubiquitous in current graphical user interfaces, often in context menus.

In computing, 3D interaction is a form of human-machine interaction where users are able to move and perform interaction in 3D space. Both human and machine process information where the physical position of elements in the 3D space is relevant.

In computing, input/output is the communication between an information processing system, such as a computer, and the outside world, such as another computer system, peripherals, or a human operator. Inputs are the signals or data received by the system and outputs are the signals or data sent from it. The term can also be used as part of an action; to "perform I/O" is to perform an input or output operation.

Human–computer interaction (HCI) is research in the design and the use of computer technology, which focuses on the interfaces between people (users) and computers. HCI researchers observe the ways humans interact with computers and design technologies that allow humans to interact with computers in novel ways. A device that allows interaction between human being and a computer is known as a "Human-computer Interface (HCI)".