In mathematics, the factorial of a non-negative integer , denoted by , is the product of all positive integers less than or equal to . The factorial of also equals the product of with the next smaller factorial:

In number theory, integer factorization is the decomposition of a positive integer into a product of integers. Every positive integer greater than 1 is either the product of two or more integer factors, in which case it is called a composite number, or it is not, in which case it is called a prime number. For example, 15 is a composite number because 15 = 3 · 5, but 7 is a prime number because it cannot be decomposed in this way. If one of the factors is composite, it can in turn be written as a product of smaller factors, for example 60 = 3 · 20 = 3 · (5 · 4). Continuing this process until every factor is prime is called prime factorization; the result is always unique up to the order of the factors by the prime factorization theorem.

In mathematics, a Mersenne prime is a prime number that is one less than a power of two. That is, it is a prime number of the form Mn = 2n − 1 for some integer n. They are named after Marin Mersenne, a French Minim friar, who studied them in the early 17th century. If n is a composite number then so is 2n − 1. Therefore, an equivalent definition of the Mersenne primes is that they are the prime numbers of the form Mp = 2p − 1 for some prime p.

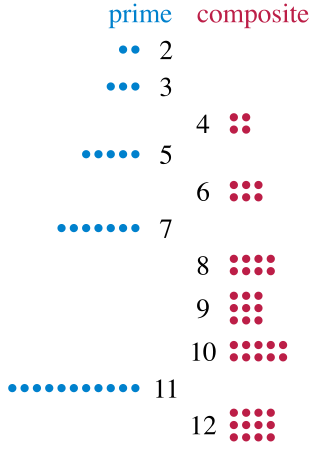

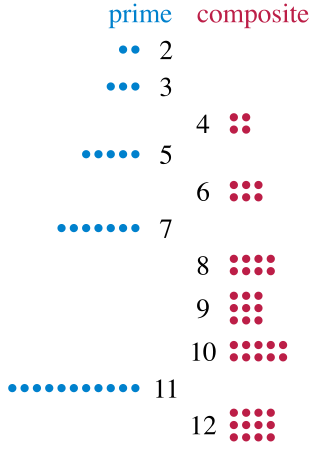

A prime number is a natural number greater than 1 that is not a product of two smaller natural numbers. A natural number greater than 1 that is not prime is called a composite number. For example, 5 is prime because the only ways of writing it as a product, 1 × 5 or 5 × 1, involve 5 itself. However, 4 is composite because it is a product (2 × 2) in which both numbers are smaller than 4. Primes are central in number theory because of the fundamental theorem of arithmetic: every natural number greater than 1 is either a prime itself or can be factorized as a product of primes that is unique up to their order.

In mathematics, a square-free integer (or squarefree integer) is an integer which is divisible by no square number other than 1. That is, its prime factorization has exactly one factor for each prime that appears in it. For example, 10 = 2 ⋅ 5 is square-free, but 18 = 2 ⋅ 3 ⋅ 3 is not, because 18 is divisible by 9 = 32. The smallest positive square-free numbers are

In number theory, Fermat's little theorem states that if p is a prime number, then for any integer a, the number ap − a is an integer multiple of p. In the notation of modular arithmetic, this is expressed as

In mathematics, a Fermat number, named after Pierre de Fermat, the first known to have studied them, is a positive integer of the form

In number theory, the general number field sieve (GNFS) is the most efficient classical algorithm known for factoring integers larger than 10100. Heuristically, its complexity for factoring an integer n (consisting of ⌊log2n⌋ + 1 bits) is of the form

The Fermat primality test is a probabilistic test to determine whether a number is a probable prime.

A primality test is an algorithm for determining whether an input number is prime. Among other fields of mathematics, it is used for cryptography. Unlike integer factorization, primality tests do not generally give prime factors, only stating whether the input number is prime or not. Factorization is thought to be a computationally difficult problem, whereas primality testing is comparatively easy. Some primality tests prove that a number is prime, while others like Miller–Rabin prove that a number is composite. Therefore, the latter might more accurately be called compositeness tests instead of primality tests.

In mathematics, a square number or perfect square is an integer that is the square of an integer; in other words, it is the product of some integer with itself. For example, 9 is a square number, since it equals 32 and can be written as 3 × 3.

The AKS primality test is a deterministic primality-proving algorithm created and published by Manindra Agrawal, Neeraj Kayal, and Nitin Saxena, computer scientists at the Indian Institute of Technology Kanpur, on August 6, 2002, in an article titled "PRIMES is in P". The algorithm was the first one which is able to determine in polynomial time, whether a given number is prime or composite and this without relying on mathematical conjectures such as the generalized Riemann hypothesis. The proof is also notable for not relying on the field of analysis. In 2006 the authors received both the Gödel Prize and Fulkerson Prize for their work.

The quadratic sieve algorithm (QS) is an integer factorization algorithm and, in practice, the second-fastest method known. It is still the fastest for integers under 100 decimal digits or so, and is considerably simpler than the number field sieve. It is a general-purpose factorization algorithm, meaning that its running time depends solely on the size of the integer to be factored, and not on special structure or properties. It was invented by Carl Pomerance in 1981 as an improvement to Schroeppel's linear sieve.

In number theory, a branch of mathematics, the special number field sieve (SNFS) is a special-purpose integer factorization algorithm. The general number field sieve (GNFS) was derived from it.

In number theory, an n-smooth (or n-friable) number is an integer whose prime factors are all less than or equal to n. For example, a 7-smooth number is a number whose every prime factor is at most 7, so 49 = 72 and 15750 = 2 × 32 × 53 × 7 are both 7-smooth, while 11 and 702 = 2 × 33 × 13 are not 7-smooth. The term seems to have been coined by Leonard Adleman. Smooth numbers are especially important in cryptography, which relies on factorization of integers. The 2-smooth numbers are just the powers of 2, while 5-smooth numbers are known as regular numbers.

In computational number theory, a variety of algorithms make it possible to generate prime numbers efficiently. These are used in various applications, for example hashing, public-key cryptography, and search of prime factors in large numbers.

In mathematics, the rational sieve is a general algorithm for factoring integers into prime factors. It is a special case of the general number field sieve. While it is less efficient than the general algorithm, it is conceptually simpler. It serves as a helpful first step in understanding how the general number field sieve works.

Wheel factorization is a method for generating a sequence of natural numbers by repeated additions, as determined by a number of the first few primes, so that the generated numbers are coprime with these primes, by construction.

In mathematics, elliptic curve primality testing techniques, or elliptic curve primality proving (ECPP), are among the quickest and most widely used methods in primality proving. It is an idea put forward by Shafi Goldwasser and Joe Kilian in 1986 and turned into an algorithm by A. O. L. Atkin the same year. The algorithm was altered and improved by several collaborators subsequently, and notably by Atkin and François Morain, in 1993. The concept of using elliptic curves in factorization had been developed by H. W. Lenstra in 1985, and the implications for its use in primality testing followed quickly.