In computing, a database is an organized collection of data or a type of data store based on the use of a database management system (DBMS), the software that interacts with end users, applications, and the database itself to capture and analyze the data. The DBMS additionally encompasses the core facilities provided to administer the database. The sum total of the database, the DBMS and the associated applications can be referred to as a database system. Often the term "database" is also used loosely to refer to any of the DBMS, the database system or an application associated with the database.

Computerized batch processing is a method of running software programs called jobs in batches automatically. While users are required to submit the jobs, no other interaction by the user is required to process the batch. Batches may automatically be run at scheduled times as well as being run contingent on the availability of computer resources.

An embedded system is a computer system—a combination of a computer processor, computer memory, and input/output peripheral devices—that has a dedicated function within a larger mechanical or electronic system. It is embedded as part of a complete device often including electrical or electronic hardware and mechanical parts. Because an embedded system typically controls physical operations of the machine that it is embedded within, it often has real-time computing constraints. Embedded systems control many devices in common use. In 2009, it was estimated that ninety-eight percent of all microprocessors manufactured were used in embedded systems.

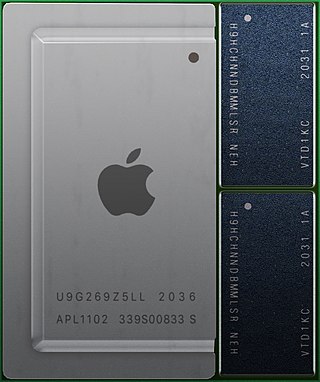

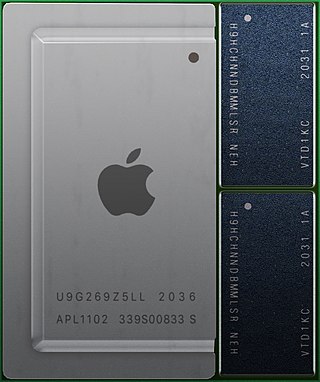

A system on a chip or system-on-chip is an integrated circuit that integrates most or all components of a computer or other electronic system. These components almost always include on-chip central processing unit (CPU), memory interfaces, input/output devices and interfaces, and secondary storage interfaces, often alongside other components such as radio modems and a graphics processing unit (GPU) – all on a single substrate or microchip. SoCs may contain digital and also analog, mixed-signal and often radio frequency signal processing functions.

In computer science, algorithmic efficiency is a property of an algorithm which relates to the amount of computational resources used by the algorithm. An algorithm must be analyzed to determine its resource usage, and the efficiency of an algorithm can be measured based on the usage of different resources. Algorithmic efficiency can be thought of as analogous to engineering productivity for a repeating or continuous process.

Computer science is the study of the theoretical foundations of information and computation and their implementation and application in computer systems. One well known subject classification system for computer science is the ACM Computing Classification System devised by the Association for Computing Machinery.

The following outline is provided as an overview of and topical guide to software engineering:

Bio-inspired computing, short for biologically inspired computing, is a field of study which seeks to solve computer science problems using models of biology. It relates to connectionism, social behavior, and emergence. Within computer science, bio-inspired computing relates to artificial intelligence and machine learning. Bio-inspired computing is a major subset of natural computation.

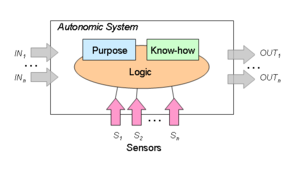

Self-management is the process by which computer systems manage their own operation without human intervention. Self-management technologies are expected to pervade the next generation of network management systems.

Autonomic Networking follows the concept of Autonomic Computing, an initiative started by IBM in 2001. Its ultimate aim is to create self-managing networks to overcome the rapidly growing complexity of the Internet and other networks and to enable their further growth, far beyond the size of today.

Employee scheduling software automates the process of creating and maintaining a schedule. Automating the scheduling of employees increases productivity and allows organizations with hourly workforces to re-allocate resources to non-scheduling activities. Such software will usually track vacation time, sick time, compensation time, and alert when there are conflicts. As scheduling data is accumulated over time, it may be extracted for payroll or to analyze past activity. Although employee scheduling software may or may not make optimization decisions, it does manage and coordinate the tasks. Today's employee scheduling software often includes mobile applications. Mobile scheduling further increased scheduling productivity and eliminated inefficient scheduling steps. It may also include functionality including applicant tracking and on-boarding, time and attendance, and automatic limits on overtime. Such functionality can help organizations with issues like employee retention, compliance with labor laws, and other workforce management challenges.

Accounting software is a computer program that maintains account books on computers, including recording transactions and account balances. It may depends on virtual thinking. Depending on the purpose, the software can manage budgets, perform accounting tasks for multiple currencies, perform payroll and customer relationship management, and prepare financial reporting. Work to have accounting functions be implemented on computers goes back to the earliest days of electronic data processing. Over time, accounting software has revolutionized from supporting basic accounting operations to performing real-time accounting and supporting financial processing and reporting. Cloud accounting software was first introduced in 2011, and it allowed the performance of all accounting functions through the internet.

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software.

Cloud computing is the on-demand availability of computer system resources, especially data storage and computing power, without direct active management by the user. Large clouds often have functions distributed over multiple locations, each of which is a data center. Cloud computing relies on sharing of resources to achieve coherence and typically uses a pay-as-you-go model, which can help in reducing capital expenses but may also lead to unexpected operating expenses for users.

Lateral computing is a lateral thinking approach to solving computing problems. Lateral thinking has been made popular by Edward de Bono. This thinking technique is applied to generate creative ideas and solve problems. Similarly, by applying lateral-computing techniques to a problem, it can become much easier to arrive at a computationally inexpensive, easy to implement, efficient, innovative or unconventional solution.

Techila Distributed Computing Engine is a commercial grid computing software product. It speeds up simulation, analysis and other computational applications by enabling scalability across the IT resources in user's on-premises data center and in the user's own cloud account. Techila Distributed Computing Engine is developed and licensed by Techila Technologies Ltd, a privately held company headquartered in Tampere, Finland. The product is also available as an on-demand solution in Google Cloud Launcher, the online marketplace created and operated by Google. According to IDC, the solution enables organizations to create HPC infrastructure without the major capital investments and operating expenses required by new HPC hardware.

Federated architecture (FA) is a pattern in enterprise architecture that allows interoperability and information sharing between semi-autonomous de-centrally organized lines of business (LOBs), information technology systems and applications.

Policy-based management is a technology that can simplify the complex task of managing networks and distributed systems. Under this paradigm, an administrator can manage different aspects of a network or distributed system in a flexible and simplified manner by deploying a set of policies that govern its behaviour. Policies are technology independent rules aiming to enhance the hard-coded functionality of managed devices by introducing interpreted logic that can be dynamically changed without modifying the underlying implementation. This allows for a certain degree of programmability without the need to interrupt the operation of either the managed system or of the management system itself. Policy-based management can increase significantly the self-managing aspects of any distributed system or network, leading to more autonomic behaviour demonstrated by Autonomic computing systems.

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence, its sub-disciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.