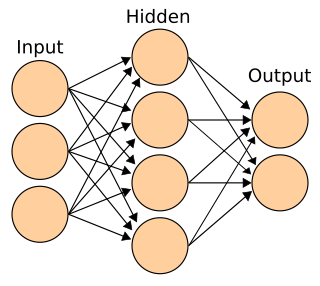

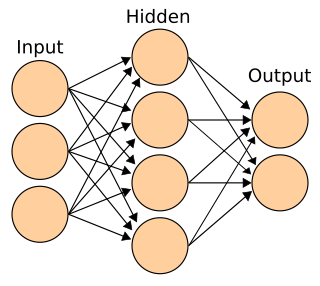

Neural networks are a branch of machine learning models that are built using the principles of neuronal organization found in the biological neural networks constituting animal brains.

Connectionism is the name of an approach to the study of human mental processes and cognition that utilizes mathematical models known as connectionist networks or artificial neural networks. Connectionism has had many 'waves' since its beginnings.

In computer science, evolutionary computation is a family of algorithms for global optimization inspired by biological evolution, and the subfield of artificial intelligence and soft computing studying these algorithms. In technical terms, they are a family of population-based trial and error problem solvers with a metaheuristic or stochastic optimization character.

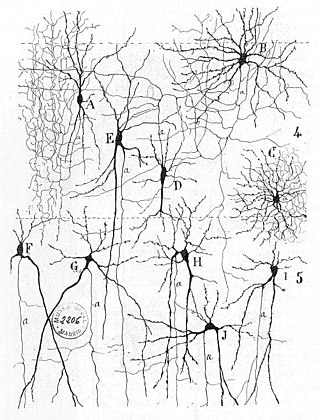

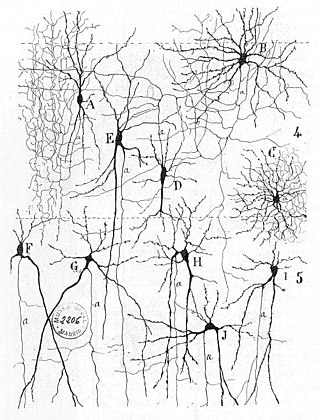

Computational neuroscience is a branch of neuroscience which employs mathematics, computer science, theoretical analysis and abstractions of the brain to understand the principles that govern the development, structure, physiology and cognitive abilities of the nervous system.

Neuromorphic computing is an approach to computing that is inspired by the structure and function of the human brain. A neuromorphic computer/chip is any device that uses physical artificial neurons to do computations. In recent times, the term neuromorphic has been used to describe analog, digital, mixed-mode analog/digital VLSI, and software systems that implement models of neural systems. The implementation of neuromorphic computing on the hardware level can be realized by oxide-based memristors, spintronic memories, threshold switches, transistors, among others. Training software-based neuromorphic systems of spiking neural networks can be achieved using error backpropagation, e.g., using Python based frameworks such as snnTorch, or using canonical learning rules from the biological learning literature, e.g., using BindsNet.

The expression computational intelligence (CI) usually refers to the ability of a computer to learn a specific task from data or experimental observation. Even though it is commonly considered a synonym of soft computing, there is still no commonly accepted definition of computational intelligence.

A cognitive architecture refers to both a theory about the structure of the human mind and to a computational instantiation of such a theory used in the fields of artificial intelligence (AI) and computational cognitive science. The formalized models can be used to further refine a comprehensive theory of cognition and as a useful artificial intelligence program. Successful cognitive architectures include ACT-R and SOAR. The research on cognitive architectures as software instantiation of cognitive theories was initiated by Allen Newell in 1990.

A neural network, also called a neuronal network, is an interconnected population of neurons. Biological neural networks are studied to understand the organization and functioning of nervous systems.

An artificial brain is software and hardware with cognitive abilities similar to those of the animal or human brain.

A wetware computer is an organic computer composed of organic material "wetware" such as "living" neurons. Wetware computers composed of neurons are different than conventional computers because they use biological materials, and offer the possibility of substantially more energy-efficient computing. While a wetware computer is still largely conceptual, there has been limited success with construction and prototyping, which has acted as a proof of the concept's realistic application to computing in the future. The most notable prototypes have stemmed from the research completed by biological engineer William Ditto during his time at the Georgia Institute of Technology. His work constructing a simple neurocomputer capable of basic addition from leech neurons in 1999 was a significant discovery for the concept. This research was a primary example driving interest in creating these artificially constructed, but still organic brains.

Neuroinformatics is the field that combines informatics and neuroscience. Neuroinformatics is related with neuroscience data and information processing by artificial neural networks. There are three main directions where neuroinformatics has to be applied:

The following outline is provided as an overview of and topical guide to artificial intelligence:

Spiking neural networks (SNNs) are artificial neural networks (ANN) that more closely mimic natural neural networks. In addition to neuronal and synaptic state, SNNs incorporate the concept of time into their operating model. The idea is that neurons in the SNN do not transmit information at each propagation cycle, but rather transmit information only when a membrane potential—an intrinsic quality of the neuron related to its membrane electrical charge—reaches a specific value, called the threshold. When the membrane potential reaches the threshold, the neuron fires, and generates a signal that travels to other neurons which, in turn, increase or decrease their potentials in response to this signal. A neuron model that fires at the moment of threshold crossing is also called a spiking neuron model.

Brain simulation is the concept of creating a functioning computer model of a brain or part of a brain. Brain simulation projects intend to contribute to a complete understanding of the brain, and eventually also assist the process of treating and diagnosing brain diseases.

Natural computing, also called natural computation, is a terminology introduced to encompass three classes of methods: 1) those that take inspiration from nature for the development of novel problem-solving techniques; 2) those that are based on the use of computers to synthesize natural phenomena; and 3) those that employ natural materials to compute. The main fields of research that compose these three branches are artificial neural networks, evolutionary algorithms, swarm intelligence, artificial immune systems, fractal geometry, artificial life, DNA computing, and quantum computing, among others.

SyNAPSE is a DARPA program that aims to develop electronic neuromorphic machine technology, an attempt to build a new kind of cognitive computer with form, function, and architecture similar to the mammalian brain. Such artificial brains would be used in robots whose intelligence would scale with the size of the neural system in terms of the total number of neurons and synapses and their connectivity.

Kwabena Adu Boahen is a Ghanaian-born Professor of Bioengineering and Electrical Engineering at Stanford University. He previously taught at the University of Pennsylvania.

A cognitive computer is a computer that hardwires artificial intelligence and machine learning algorithms into an integrated circuit that closely reproduces the behavior of the human brain. It generally adopts a neuromorphic engineering approach. Synonyms include neuromorphic chip and cognitive chip.

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence, its sub-disciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.

Soft computing is an umbrella term used to describe types of algorithms that produce approximate solutions to unsolvable high-level problems in computer science. Typically, traditional hard-computing algorithms heavily rely on concrete data and mathematical models to produce solutions to problems. Soft computing was coined in the late 20th century. During this period, revolutionary research in three fields greatly impacted soft computing. Fuzzy logic is a computational paradigm that entertains the uncertainties in data by using levels of truth rather than rigid 0s and 1s in binary. Next, neural networks which are computational models influenced by human brain functions. Finally, evolutionary computation is a term to describe groups of algorithm that mimic natural processes such as evolution and natural selection.