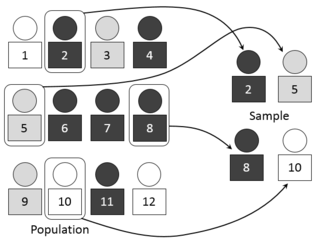

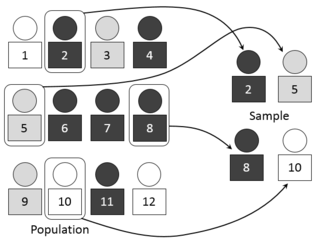

In statistics, sampling bias is a bias in which a sample is collected in such a way that some members of the intended population have a lower sampling probability than others. It results in a biased sample, a non-random sample of a population in which all individuals, or instances, were not equally likely to have been selected. If this is not accounted for, results can be erroneously attributed to the phenomenon under study rather than to the method of sampling.

Bias is disproportionate weight in favor of or against an idea or thing, usually in a way that is closed-minded, prejudicial, or unfair. Biases can be innate or learned. People may develop biases for or against an individual, a group, or a belief. In science and engineering, a bias is a systematic error. Statistical bias results from an unfair sampling of a population, or from an estimation process that does not give accurate results on average.

Epidemiology is the study and analysis of the distribution, patterns and determinants of health and disease conditions in defined populations.

In statistics, quality assurance, and survey methodology, sampling is the selection of a subset of individuals from within a statistical population to estimate characteristics of the whole population. Statisticians attempt for the samples to represent the population in question. Two advantages of sampling are lower cost and faster data collection than measuring the entire population.

In psychology, decision-making is regarded as the cognitive process resulting in the selection of a belief or a course of action among several alternative possibilities. Decision-making is the process of identifying and choosing alternatives based on the values, preferences and beliefs of the decision-maker. Every decision-making process produces a final choice, which may or may not prompt action.

Selection bias is the bias introduced by the selection of individuals, groups or data for analysis in such a way that proper randomization is not achieved, thereby ensuring that the sample obtained is not representative of the population intended to be analyzed. It is sometimes referred to as the selection effect. The phrase "selection bias" most often refers to the distortion of a statistical analysis, resulting from the method of collecting samples. If the selection bias is not taken into account, then some conclusions of the study may be false.

Hindsight bias, also known as the knew-it-all-along phenomenon or creeping determinism, refers to the common tendency for people to perceive events that have already occurred as having been more predictable than they actually were before the events took place. As a result, people often believe, after an event has occurred, that they would have predicted, or perhaps even would have known with a high degree of certainty, what the outcome of the event would have been, before the event occurred. Hindsight bias may cause distortions of our memories of what we knew and/or believed before an event occurred, and is a significant source of overconfidence regarding our ability to predict the outcomes of future events. Examples of hindsight bias can be seen in the writings of historians describing outcomes of battles, physicians recalling clinical trials, and in judicial systems as individuals attribute responsibility on the basis of the supposed predictability of accidents.

In psychology, an attribution bias or attributional bias is a cognitive bias that refers to the systematic errors made when people evaluate or try to find reasons for their own and others' behaviors. People constantly make attributions regarding the cause of their own and others' behaviors; however, attributions do not always accurately reflect reality. Rather than operating as objective perceivers, people are prone to perceptual errors that lead to biased interpretations of their social world.

A self-serving bias is any cognitive or perceptual process that is distorted by the need to maintain and enhance self-esteem, or the tendency to perceive oneself in an overly favorable manner. It is the belief that individuals tend to ascribe success to their own abilities and efforts, but ascribe failure to external factors. When individuals reject the validity of negative feedback, focus on their strengths and achievements but overlook their faults and failures, or take more responsibility for their group's work than they give to other members, they are protecting their ego from threat and injury. These cognitive and perceptual tendencies perpetuate illusions and error, but they also serve the self's need for esteem. For example, a student who attributes earning a good grade on an exam to their own intelligence and preparation but attributes earning a poor grade to the teacher's poor teaching ability or unfair test questions might be exhibiting the self-serving bias. Studies have shown that similar attributions are made in various situations, such as the workplace, interpersonal relationships, sports, and consumer decisions.

The principal–agent problem, in political science and economics occurs when one person or entity, is able to make decisions and/or take actions on behalf of, or that impact, another person or entity: the "principal". This dilemma exists in circumstances where agents are motivated to act in their own best interests, which are contrary to those of their principals, and is an example of moral hazard.

The Great Filter, in the context of the Fermi paradox, is whatever prevents non-living matter from undergoing abiogenesis, in time, to expanding lasting life as measured by the Kardashev scale. The concept originates in Robin Hanson's argument that the failure to find any extraterrestrial civilizations in the observable universe implies the possibility something is wrong with one or more of the arguments from various scientific disciplines that the appearance of advanced intelligent life is probable; this observation is conceptualized in terms of a "Great Filter" which acts to reduce the great number of sites where intelligent life might arise to the tiny number of intelligent species with advanced civilizations actually observed. This probability threshold, which could lie behind us or in front of us, might work as a barrier to the evolution of intelligent life, or as a high probability of self-destruction. The main counter-intuitive conclusion of this observation is that the easier it was for life to evolve to our stage, the bleaker our future chances probably are.

Survivorship bias or survival bias is the logical error of concentrating on the people or things that made it past some selection process and overlooking those that did not, typically because of their lack of visibility. This can lead to false conclusions in several different ways. It is a form of selection bias.

The normalcy bias, or normality bias, is a belief people hold when there is a possibility of a disaster. It causes people to underestimate both the likelihood of a disaster and its possible effects, because people believe that things will always function the way things normally have functioned. This may result in situations where people fail to adequately prepare themselves for disasters, and on a larger scale, the failure of governments to include the populace in its disaster preparations. About 70% of people reportedly display normalcy bias in disasters.

Dual inheritance theory (DIT), also known as gene–culture coevolution or biocultural evolution, was developed in the 1960s through early 1980s to explain how human behavior is a product of two different and interacting evolutionary processes: genetic evolution and cultural evolution. Genes and culture continually interact in a feedback loop, changes in genes can lead to changes in culture which can then influence genetic selection, and vice versa. One of the theory's central claims is that culture evolves partly through a Darwinian selection process, which dual inheritance theorists often describe by analogy to genetic evolution.

Failure in the intelligence cycle or intelligence failure, is the outcome of the inadequacies within the intelligence cycle. The intelligence cycle itself consists of six steps that are constantly in motion. The six steps are: requirements, collection, processing and exploitation, analysis and production, dissemination and consumption, and feedback.

Failure is the state or condition of not meeting a desirable or intended objective, and may be viewed as the opposite of success. Product failure ranges from failure to sell the product to fracture of the product, in the worst cases leading to personal injury, the province of forensic engineering.

The concept known as rational irrationality was popularized by economist Bryan Caplan in 2001 to reconcile the widespread existence of irrational behavior with the assumption of rationality made by mainstream economics and game theory. The theory, along with its implications for democracy, was expanded upon by Caplan in his book The Myth of the Rational Voter.

An ERP system selection methodology is a formal process for selecting an enterprise resource planning (ERP) system. Existing methodologies include:

Cognitive bias mitigation is the prevention and reduction of the negative effects of cognitive biases – unconscious, automatic influences on human judgment and decision making that reliably produce reasoning errors.

Anthropic Bias: Observation Selection Effects in Science and Philosophy (2002) is a book by philosopher Nick Bostrom. Bostrom investigates how to reason when suspected that evidence is biased by "observation selection effects", in other words, evidence that has been filtered by the precondition that there be some appropriate positioned observer to "have" the evidence. This conundrum is sometimes hinted at as "the anthropic principle," "self-locating belief," or "indexical information". Discussed concepts include the self-sampling assumption and the self-indication assumption.