Related Research Articles

Confirmation bias is the tendency to search for, interpret, favor, and recall information in a way that confirms or supports one's prior beliefs or values. People display this bias when they select information that supports their views, ignoring contrary information, or when they interpret ambiguous evidence as supporting their existing attitudes. The effect is strongest for desired outcomes, for emotionally charged issues, and for deeply entrenched beliefs. Confirmation bias is insuperable for most people, but they can manage it, for example, by education and training in critical thinking skills.

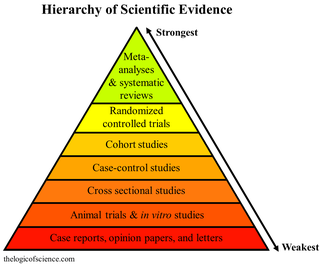

A meta-analysis is the statistical integration of evidence from multiple studies that address a common research question. By extracting effect sizes and measures of variance, numerous outcomes can be combined to compute a summary effect size. Meta-analyses are commonly used to support research grant applications, treatment guidelines, and health policy. Moreover, meta-analytic outcomes are often used to summarize a research area in an effort to better direct future work. Because of this the meta-analysis has become a core methodology of metascience. Meta-analytic results are considered the most trustworthy source of evidence by the evidence-based medicine literature. In addition to being able to provide an estimate of an unknown effect size, meta-analyses has the capacity to contrast results from different studies and identify both patterns and sources of disagreement among study results, or other relationships highlighted by multiple studies.

A randomized controlled trial is a form of scientific experiment used to control factors not under direct experimental control. Examples of RCTs are clinical trials that compare the effects of drugs, surgical techniques, medical devices, diagnostic procedures or other medical treatments.

Statistical bias, in the mathematical field of statistics, is a systematic tendency in which the methods used to gather data and generate statistics present an inaccurate, skewed or biased depiction of reality. Statistical bias exists in numerous stages of the data collection and analysis process, including: the source of the data, the methods used to collect the data, the estimator chosen, and the methods used to analyze the data. Data analysts can take various measures at each stage of the process to reduce the impact of statistical bias in their work. Understanding the source of statistical bias can help to assess whether the observed results are close to actuality. Issues of statistical bias has been argued to be closely linked to issues of statistical validity.

In a blind or blinded experiment, information which may influence the participants of the experiment is withheld until after the experiment is complete. Good blinding can reduce or eliminate experimental biases that arise from a participants' expectations, observer's effect on the participants, observer bias, confirmation bias, and other sources. A blind can be imposed on any participant of an experiment, including subjects, researchers, technicians, data analysts, and evaluators. In some cases, while blinding would be useful, it is impossible or unethical. For example, it is not possible to blind a patient to their treatment in a physical therapy intervention. A good clinical protocol ensures that blinding is as effective as possible within ethical and practical constraints.

Selection bias is the bias introduced by the selection of individuals, groups, or data for analysis in such a way that proper randomization is not achieved, thereby failing to ensure that the sample obtained is representative of the population intended to be analyzed. It is sometimes referred to as the selection effect. The phrase "selection bias" most often refers to the distortion of a statistical analysis, resulting from the method of collecting samples. If the selection bias is not taken into account, then some conclusions of the study may be false.

The Hawthorne effect is a type of human behavior reactivity in which individuals modify an aspect of their behavior in response to their awareness of being observed. The effect was discovered in the context of research conducted at the Hawthorne Western Electric plant; however, some scholars think the descriptions are fictitious.

The observer-expectancy effect is a form of reactivity in which a researcher's cognitive bias causes them to subconsciously influence the participants of an experiment. Confirmation bias can lead to the experimenter interpreting results incorrectly because of the tendency to look for information that conforms to their hypothesis, and overlook information that argues against it. It is a significant threat to a study's internal validity, and is therefore typically controlled using a double-blind experimental design.

A scientific control is an experiment or observation designed to minimize the effects of variables other than the independent variable. This increases the reliability of the results, often through a comparison between control measurements and the other measurements. Scientific controls are a part of the scientific method.

Belief bias is the tendency to judge the strength of arguments based on the plausibility of their conclusion rather than how strongly they justify that conclusion. A person is more likely to accept an argument that supports a conclusion that aligns with their values, beliefs and prior knowledge, while rejecting counter arguments to the conclusion. Belief bias is an extremely common and therefore significant form of error; we can easily be blinded by our beliefs and reach the wrong conclusion. Belief bias has been found to influence various reasoning tasks, including conditional reasoning, relation reasoning and transitive reasoning.

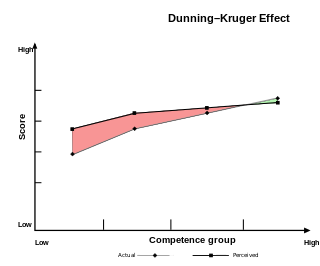

The Dunning–Kruger effect is a cognitive bias in which people with limited competence in a particular domain overestimate their abilities. Some researchers also include the opposite effect for high performers: their tendency to underestimate their skills. In popular culture, the Dunning–Kruger effect is often misunderstood as a claim about general overconfidence of people with low intelligence instead of specific overconfidence of people unskilled at a particular task.

In causal inference, a confounder is a variable that influences both the dependent variable and independent variable, causing a spurious association. Confounding is a causal concept, and as such, cannot be described in terms of correlations or associations. The existence of confounders is an important quantitative explanation why correlation does not imply causation. Some notations are explicitly designed to identify the existence, possible existence, or non-existence of confounders in causal relationships between elements of a system.

Latent learning is the subconscious retention of information without reinforcement or motivation. In latent learning, one changes behavior only when there is sufficient motivation later than when they subconsciously retained the information.

In social research, particularly in psychology, the term demand characteristic refers to an experimental artifact where participants form an interpretation of the experiment's purpose and subconsciously change their behavior to fit that interpretation. Typically, demand characteristics are considered an extraneous variable, exerting an effect on behavior other than that intended by the experimenter. Pioneering research was conducted on demand characteristics by Martin Orne.

In fields such as epidemiology, social sciences, psychology and statistics, an observational study draws inferences from a sample to a population where the independent variable is not under the control of the researcher because of ethical concerns or logistical constraints. One common observational study is about the possible effect of a treatment on subjects, where the assignment of subjects into a treated group versus a control group is outside the control of the investigator. This is in contrast with experiments, such as randomized controlled trials, where each subject is randomly assigned to a treated group or a control group. Observational studies, for lacking an assignment mechanism, naturally present difficulties for inferential analysis.

In natural and social science research, a protocol is most commonly a predefined procedural method in the design and implementation of an experiment. Protocols are written whenever it is desirable to standardize a laboratory method to ensure successful replication of results by others in the same laboratory or by other laboratories. Additionally, and by extension, protocols have the advantage of facilitating the assessment of experimental results through peer review. In addition to detailed procedures, equipment, and instruments, protocols will also contain study objectives, reasoning for experimental design, reasoning for chosen sample sizes, safety precautions, and how results were calculated and reported, including statistical analysis and any rules for predefining and documenting excluded data to avoid bias.

Reactivity is a phenomenon that occurs when individuals alter their performance or behavior due to the awareness that they are being observed. The change may be positive or negative, and depends on the situation. It is a significant threat to a research study's external validity and is typically controlled for using blind experiment designs.

The introspection illusion is a cognitive bias in which people wrongly think they have direct insight into the origins of their mental states, while treating others' introspections as unreliable. The illusion has been examined in psychological experiments, and suggested as a basis for biases in how people compare themselves to others. These experiments have been interpreted as suggesting that, rather than offering direct access to the processes underlying mental states, introspection is a process of construction and inference, much as people indirectly infer others' mental states from their behaviour.

Funding bias, also known as sponsorship bias, funding outcome bias, funding publication bias, and funding effect, refers to the tendency of a scientific study to support the interests of the study's financial sponsor. This phenomenon is recognized sufficiently that researchers undertake studies to examine bias in past published studies. Funding bias has been associated, in particular, with research into chemical toxicity, tobacco, and pharmaceutical drugs. It is an instance of experimenter's bias.

Observational methods in psychological research entail the observation and description of a subject's behavior. Researchers utilizing the observational method can exert varying amounts of control over the environment in which the observation takes place. This makes observational research a sort of middle ground between the highly controlled method of experimental design and the less structured approach of conducting interviews.

References

- 1 2 3 4 Mahtani, Kamal; Spencer, Elizabeth A.; Brassey, Jon; Heneghan, Carl (2018-02-01). "Catalogue of bias: observer bias". BMJ Evidence-Based Medicine. 23 (1): 23–24. doi:10.1136/ebmed-2017-110884. ISSN 2515-446X. PMID 29367322. S2CID 46794082.

- ↑ Miettinen, Olli S. (2008-11-01). "M. Porta, S. Greenland & J. M. Last (eds): A Dictionary of Epidemiology. A Handbook Sponsored by the I.E.A.". European Journal of Epidemiology. 23 (12): 813–817. doi:10.1007/s10654-008-9296-5. ISSN 0393-2990. S2CID 41169767.

- ↑ Pronin, Emily (2007-01-01). "Perception and misperception of bias in human judgment". Trends in Cognitive Sciences. 11 (1): 37–43. doi:10.1016/j.tics.2006.11.001. ISSN 1364-6613. PMID 17129749. S2CID 2754235.

- ↑ Hróbjartsson, Asbjørn; Thomsen, Ann Sofia Skou; Emanuelsson, Frida; Tendal, Britta; Hilden, Jørgen; Boutron, Isabelle; Ravaud, Philippe; Brorson, Stig (2012-02-27). "Observer bias in randomised clinical trials with binary outcomes: systematic review of trials with both blinded and non-blinded outcome assessors". BMJ. 344: e1119. doi: 10.1136/bmj.e1119 . ISSN 0959-8138. PMID 22371859. S2CID 23296493.

- 1 2 Tripepi, Giovanni; Jager, Kitty J.; Dekker, Friedo W.; Zoccali, Carmine (2010). "Selection Bias and Information Bias in Clinical Research". Nephron Clinical Practice. 115 (2): c94–c99. doi: 10.1159/000312871 . ISSN 1660-2110. PMID 20407272. S2CID 18856450.

- 1 2 Tuyttens, F. A. M.; de Graaf, S.; Heerkens, J. L. T.; Jacobs, L.; Nalon, E.; Ott, S.; Stadig, L.; Van Laer, E.; Ampe, B. (2014-04-01). "Observer bias in animal behaviour research: can we believe what we score, if we score what we believe?". Animal Behaviour. 90: 273–280. doi:10.1016/j.anbehav.2014.02.007. ISSN 0003-3472. S2CID 53195951.

- ↑ Samhita, Laasya; Gross, Hans J (2013-11-09). "The 'Clever Hans Phenomenon' revisited". Communicative & Integrative Biology. 6 (6): e27122. doi:10.4161/cib.27122. PMC 3921203 . PMID 24563716.

- ↑ Gillie, Oliver (1977). "Did Sir Cyril Burt Fake His Research on Heritability of Intelligence? Part I". The Phi Delta Kappan. 58 (6): 469–471. ISSN 0031-7217. JSTOR 20298643.

- ↑ Rosenthal, Robert; Fode, Kermit L. (1963). "Psychology of the Scientist: V. Three Experiments in Experimenter Bias". Psychological Reports. 12 (2): 491. doi:10.2466/pr0.1963.12.2.491.

- ↑ Geisen, Emily; Sha, Mandy; Roper, Farren (2024). Bias testing in market research: A framework to enable inclusive research design (published January 3, 2024). ISBN 979-8862902785.

- ↑ Wilgenburg, Ellen van; Elgar, Mark A. (2013-01-23). "Confirmation Bias in Studies of Nestmate Recognition: A Cautionary Note for Research into the Behaviour of Animals". PLOS ONE. 8 (1): e53548. Bibcode:2013PLoSO...853548V. doi: 10.1371/journal.pone.0053548 . ISSN 1932-6203. PMC 3553103 . PMID 23372659.

- 1 2 West, Charles (February 1980). "Book Reviews: Achenbach, Thomas M. Research in Developmental Psychology: Concepts, Strategies, Methods. New York: The Free Press, 1978. 350 + xiii pp. $14.95". Educational Researcher. 9 (2): 16–17. doi:10.3102/0013189x009002016. ISSN 0013-189X. S2CID 145015499.

- ↑ Noble, Helen; Heale, Roberta (2019-07-01). "Triangulation in research, with examples". Evidence-Based Nursing. 22 (3): 67–68. doi: 10.1136/ebnurs-2019-103145 . ISSN 1367-6539. PMID 31201209. S2CID 189862202.

- ↑ Carter, Nancy; Bryant-Lukosius, Denise; DiCenso, Alba; Blythe, Jennifer; Neville, Alan J. (2014-08-26). "The Use of Triangulation in Qualitative Research". Oncology Nursing Forum. 41 (5): 545–547. doi: 10.1188/14.ONF.545-547 . PMID 25158659.

- ↑ Persell, Stephen D.; Doctor, Jason N.; Friedberg, Mark W.; Meeker, Daniella; Friesema, Elisha; Cooper, Andrew; Haryani, Ajay; Gregory, Dyanna L.; Fox, Craig R.; Goldstein, Noah J.; Linder, Jeffrey A. (2016-08-05). "Behavioral interventions to reduce inappropriate antibiotic prescribing: a randomized pilot trial". BMC Infectious Diseases. 16 (1): 373. doi: 10.1186/s12879-016-1715-8 . ISSN 1471-2334. PMC 4975897 . PMID 27495917.