Related Research Articles

Disk storage is a general category of storage mechanisms where data is recorded by various electronic, magnetic, optical, or mechanical changes to a surface layer of one or more rotating disks. A disk drive is a device implementing such a storage mechanism. Notable types are the hard disk drive (HDD) containing a non-removable disk, the floppy disk drive (FDD) and its removable floppy disk, and various optical disc drives (ODD) and associated optical disc media.

A hard disk drive (HDD), hard disk, hard drive, or fixed disk, is an electro-mechanical data storage device that stores and retrieves digital data using magnetic storage with one or more rigid rapidly rotating platters coated with magnetic material. The platters are paired with magnetic heads, usually arranged on a moving actuator arm, which read and write data to the platter surfaces. Data is accessed in a random-access manner, meaning that individual blocks of data can be stored and retrieved in any order. HDDs are a type of non-volatile storage, retaining stored data when powered off. Modern HDDs are typically in the form of a small rectangular box.

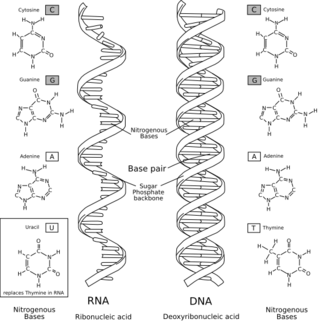

Data storage is the recording (storing) of information (data) in a storage medium. Handwriting, phonographic recording, magnetic tape, and optical discs are all examples of storage media. Biological molecules such as RNA and DNA are considered by some as data storage. Recording may be accomplished with virtually any form of energy. Electronic data storage requires electrical power to store and retrieve data.

A tape drive is a data storage device that reads and writes data on a magnetic tape. Magnetic-tape data storage is typically used for offline, archival data storage. Tape media generally has a favorable unit cost and a long archival stability.

New Technology File System (NTFS) is a proprietary journaling file system developed by Microsoft. Starting with Windows NT 3.1, it is the default file system of the Windows NT family. It superseded File Allocation Table (FAT) as the preferred filesystem on Windows and is supported in Linux and BSD as well. NTFS reading and writing support is provided using a free and open-source kernel implementation known as NTFS3 in Linux and the NTFS-3G driver in BSD. By using the convert command, Windows can convert FAT32/16/12 into NTFS without the need to rewrite all files. NTFS uses several files typically hidden from the user to store metadata about other files stored on the drive which can help improve speed and performance when reading data. Unlike FAT and High Performance File System (HPFS), NTFS supports access control lists (ACLs), filesystem encryption, transparent compression, sparse files and file system journaling. NTFS also supports shadow copy to allow backups of a system while it is running, but the functionality of the shadow copies varies between different versions of Windows.

The Information Age is a historical period that began in the mid-20th century. It is characterized by a rapid shift from traditional industries, as established during the Industrial Revolution, to an economy centered on information technology. The onset of the Information Age has been linked to the development of the transistor in 1947, the optical amplifier in 1957, and Unix time, which began on January 1, 1970. These technological advances have had a significant impact on the way information is processed and transmitted.

In computer organisation, the memory hierarchy separates computer storage into a hierarchy based on response time. Since response time, complexity, and capacity are related, the levels may also be distinguished by their performance and controlling technologies. Memory hierarchy affects performance in computer architectural design, algorithm predictions, and lower level programming constructs involving locality of reference.

In computing, a removable media is a data storage media that is designed to be readily inserted and removed from a system. Most early removable media, such as floppy disks and optical discs, require a dedicated read/write device to be installed in the computer, while others, such as USB flash drives, are plug-and-play with all the hardware required to read them built into the device, so only need a driver software to be installed in order to communicate with the device. Some removable media readers/drives are integrated into the computer case, while others are standalone devices that need to be additionally installed or connected.

In computer storage, a tape library, sometimes called a tape silo, tape robot or tape jukebox, is a storage device that contains one or more tape drives, a number of slots to hold tape cartridges, a barcode reader to identify tape cartridges and an automated method for loading tapes. Additionally, the area where tapes that are not currently in a silo are stored is also called a tape library. Tape libraries can contain millions of tapes.

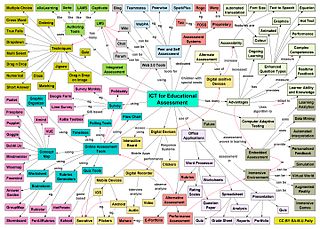

Information and communications technology (ICT) is an extensional term for information technology (IT) that stresses the role of unified communications and the integration of telecommunications and computers, as well as necessary enterprise software, middleware, storage and audiovisual, that enable users to access, store, transmit, understand and manipulate information.

Digital permanence addresses the history and development of digital storage techniques, specifically quantifying the expected lifetime of data stored on various digital media and the factors which influence the permanence of digital data. It is often a mix of ensuring the data itself can be retained on a particular form of media and that the technology remains viable. Where possible, as well as describing expected lifetimes, factors affecting data retention will be detailed, including potential technology issues.

Hierarchical storage management (HSM), also known as Tiered storage, is a data storage and Data management technique that automatically moves data between high-cost and low-cost storage media. HSM systems exist because high-speed storage devices, such as solid state drive arrays, are more expensive than slower devices, such as hard disk drives, optical discs and magnetic tape drives. While it would be ideal to have all data available on high-speed devices all the time, this is prohibitively expensive for many organizations. Instead, HSM systems store the bulk of the enterprise's data on slower devices, and then copy data to faster disk drives when needed. The HSM system monitors the way data is used and makes best guesses as to which data can safely be moved to slower devices and which data should stay on the fast devices.

Mark Howard Kryder was Seagate Corp.'s senior vice president of research and chief technology officer. Kryder holds a Bachelor of Science degree in electrical engineering from Stanford University and a Ph.D. in electrical engineering and physics from the California Institute of Technology.

Magnetic-tape data storage is a system for storing digital information on magnetic tape using digital recording.

Windows Home Server is a home server operating system from Microsoft. It was announced on 7 January 2007 at the Consumer Electronics Show by Bill Gates, released to manufacturing on 16 July 2007 and officially released on 4 November 2007.

Information is an abstract concept that refers to that which has the power to inform. At the most fundamental level, information pertains to the interpretation of that which may be sensed, or their abstractions. Any natural process that is not completely random and any observable pattern in any medium can be said to convey some amount of information. Whereas digital signals and other data use discrete signs to convey information, other phenomena and artefacts such as analogue signals, poems, pictures, music or other sounds, and currents convey information in a more continuous form. Information is not knowledge itself, but the meaning that may be derived from a representation through interpretation.

Pivot is a software application from Microsoft Live Labs that allows users to interact with and search large amounts of data. It is based on Microsoft's Seadragon. It has been described as allowing users to view the web as a web rather than as isolated pages.

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data-processing application software. Data with many entries (rows) offer greater statistical power, while data with higher complexity may lead to a higher false discovery rate. Though used sometimes loosely partly because of a lack of formal definition, the interpretation that seems to best describe big data is the one associated with a large body of information that we could not comprehend when used only in smaller amounts.

Data-intensive computing is a class of parallel computing applications which use a data parallel approach to process large volumes of data typically terabytes or petabytes in size and typically referred to as big data. Computing applications which devote most of their execution time to computational requirements are deemed compute-intensive, whereas computing applications which require large volumes of data and devote most of their processing time to I/O and manipulation of data are deemed data-intensive.

The Zettabyte Era or Zettabyte Zone is a period of human and computer science history that started in the mid-2010s. The precise starting date depends on whether it is defined as when the global IP traffic first exceeded one zettabyte, which happened in 2016, or when the amount of digital data in the world first exceeded a zettabyte, which happened in 2012. A zettabyte is a multiple of the unit byte that measures digital storage, and it is equivalent to 1,000,000,000,000,000,000,000 (1021) bytes.

References

- ↑ Hilbert, M. (2015). Global information Explosion:https://www.youtube.com/watch?v=8-AqzPe_gNs&list=PLtjBSCvWCU3rNm46D3R85efM0hrzjuAIg. Digital Technology and Social Change [Open Online Course at the University of California] freely available at: https://canvas.instructure.com/courses/949415

- ↑ “Information.” http://dictionary.oed.com. accessed January 4, 2008

- ↑ "U. S. WILL REMOVE REACTOR IN ARCTIC; Compacting Snow Squeezes Device Under Ice Sheet". The New York Times. 7 June 1964.

- ↑ Weaver, Sylvester (22 Nov 1955). "The Impact of TV in the U.S." Daily Iowan. p. 2. Retrieved 18 Aug 2021.

I believe that in the last few years we have set in motion an information explosion. To each man there is flooding more information than he can presently handle, but he is learning how to handle it and, as he learns, it will do him good.

- 1 2 3 4 Sweeney, Latanya. "Information explosion." Confidentiality, disclosure, and data access: Theory and practical applications for statistical agencies (2001): 43-74.

- ↑ Fuller, Jack. What is happening to news: The information explosion and the crisis in journalism. University of Chicago Press, 2010.

- ↑ Major, Claire Howell, and Maggi Savin-Baden. An introduction to qualitative research synthesis: Managing the information explosion in social science research. Routledge, 2010.

- 1 2 3 "The Womartinhilbert.net/WorldInfoCapacity.html "free access to the study" and "video animation".

- ↑ Disk/Trend report 1983,” Computer Week. Mountain View, CA. (46) 11/11/83.

- ↑ Rigid disk drive sales to top $34 billion in 1997,” Disk/Trend News. Mountain View, CA: Disk/Trend, Inc., 1997.

- ↑ Google Books Ngram viewer for the terms mentioned here

- 1 2 3 4 Berner, Eta S., and Jacqueline Moss. "Informatics challenges for the impending patient information explosion." Journal of the American Medical Informatics Association 12.6 (2005): 614-617.

- ↑ Huth, Edward J. "The information explosion." Bulletin of the New York Academy of Medicine 65.6 (1989): 647.

- ↑ Robert H Zakon (15 December 2010). "Hobbes' Internet Timeline 10.1". zakon.org. Retrieved 27 August 2011.

- ↑ "August 2011 Web Server Survey". netcraft.com. August 2011. Retrieved 27 August 2011.

- ↑ "State of the Blogosphere, April 2006 Part 1: On Blogosphere Growth". Sifry's Alerts (sifry.com). April 17, 2006. Archived from the original on 9 January 2013. Retrieved 27 August 2011.