Finite discrete distributions

For a discrete probability distribution with a finite number n of indices at which the probability mass function f takes non-zero values, the basic sampling algorithm is straightforward. The interval [0, 1) is divided in n intervals [0, f(1)), [f(1), f(1) + f(2)), ... The width of interval i equals the probability f(i). One draws a uniformly distributed pseudo-random number X, and searches for the index i of the corresponding interval. The so determined i will have the distribution f(i).

Formalizing this idea becomes easier by using the cumulative distribution function

It is convenient to set F(0) = 0. The n intervals are then simply [F(0), F(1)), [F(1), F(2)), ..., [F(n − 1), F(n)). The main computational task is then to determine i for which F(i − 1) ≤ X < F(i).

This can be done by different algorithms:

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events.

A pseudorandom number generator (PRNG), also known as a deterministic random bit generator (DRBG), is an algorithm for generating a sequence of numbers whose properties approximate the properties of sequences of random numbers. The PRNG-generated sequence is not truly random, because it is completely determined by an initial value, called the PRNG's seed. Although sequences that are closer to truly random can be generated using hardware random number generators, pseudorandom number generators are important in practice for their speed in number generation and their reproducibility.

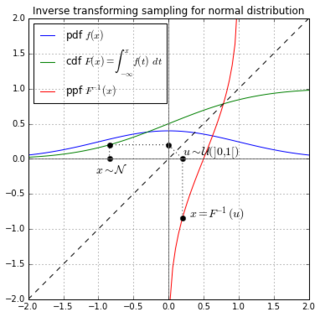

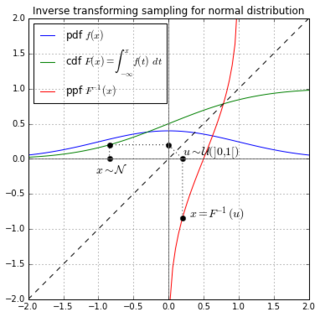

Inverse transform sampling is a basic method for pseudo-random number sampling, i.e., for generating sample numbers at random from any probability distribution given its cumulative distribution function.

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. They are often used in physical and mathematical problems and are most useful when it is difficult or impossible to use other approaches. Monte Carlo methods are mainly used in three problem classes: optimization, numerical integration, and generating draws from a probability distribution.

In statistics and statistical physics, the Metropolis–Hastings algorithm is a Markov chain Monte Carlo (MCMC) method for obtaining a sequence of random samples from a probability distribution from which direct sampling is difficult. This sequence can be used to approximate the distribution or to compute an integral. Metropolis–Hastings and other MCMC algorithms are generally used for sampling from multi-dimensional distributions, especially when the number of dimensions is high. For single-dimensional distributions, there are usually other methods that can directly return independent samples from the distribution, and these are free from the problem of autocorrelated samples that is inherent in MCMC methods.

The Box–Muller transform, by George Edward Pelham Box and Mervin Edgar Muller, is a random number sampling method for generating pairs of independent, standard, normally distributed random numbers, given a source of uniformly distributed random numbers. The method was in fact first mentioned explicitly by Raymond E. A. C. Paley and Norbert Wiener in 1934.

In statistics, Markov chain Monte Carlo (MCMC) methods comprise a class of algorithms for sampling from a probability distribution. By constructing a Markov chain that has the desired distribution as its equilibrium distribution, one can obtain a sample of the desired distribution by recording states from the chain. The more steps that are included, the more closely the distribution of the sample matches the actual desired distribution. Various algorithms exist for constructing chains, including the Metropolis–Hastings algorithm.

In numerical analysis and computational statistics, rejection sampling is a basic technique used to generate observations from a distribution. It is also commonly called the acceptance-rejection method or "accept-reject algorithm" and is a type of exact simulation method. The method works for any distribution in with a density.

In mathematics, Monte Carlo integration is a technique for numerical integration using random numbers. It is a particular Monte Carlo method that numerically computes a definite integral. While other algorithms usually evaluate the integrand at a regular grid, Monte Carlo randomly chooses points at which the integrand is evaluated. This method is particularly useful for higher-dimensional integrals.

Random number generation is a process by which, often by means of a random number generator (RNG), a sequence of numbers or symbols that cannot be reasonably predicted better than by random chance is generated. This means that the particular outcome sequence will contain some patterns detectable in hindsight but unpredictable to foresight. True random number generators can be hardware random-number generators (HRNGS) that generate random numbers, wherein each generation is a function of the current value of a physical environment's attribute that is constantly changing in a manner that is practically impossible to model. This would be in contrast to so-called "random number generations" done by pseudorandom number generators (PRNGs) that generate numbers that only look random but are in fact pre-determined—these generations can be reproduced simply by knowing the state of the PRNG.

In probability and statistics, a random variate or simply variate is a particular outcome of a random variable: the random variates which are other outcomes of the same random variable might have different values. A random deviate or simply deviate is the difference of random variate with respect to the distribution central location, often divided by the standard deviation of the distribution.

In statistics, resampling is the creation of new samples based on one observed sample. Resampling methods are:

- Permutation tests

- Bootstrapping

- Cross validation

Slice sampling is a type of Markov chain Monte Carlo algorithm for pseudo-random number sampling, i.e. for drawing random samples from a statistical distribution. The method is based on the observation that to sample a random variable one can sample uniformly from the region under the graph of its density function.

A stochastic simulation is a simulation of a system that has variables that can change stochastically (randomly) with individual probabilities.

In the theory of finite population sampling, Bernoulli sampling is a sampling process where each element of the population is subjected to an independent Bernoulli trial which determines whether the element becomes part of the sample. An essential property of Bernoulli sampling is that all elements of the population have equal probability of being included in the sample.

Computational statistics, or statistical computing, is the bond between statistics and computer science. It means statistical methods that are enabled by using computational methods. It is the area of computational science specific to the mathematical science of statistics. This area is also developing rapidly, leading to calls that a broader concept of computing should be taught as part of general statistical education.

This page is based on this

Wikipedia article Text is available under the

CC BY-SA 4.0 license; additional terms may apply.

Images, videos and audio are available under their respective licenses.