In mathematics, the concept of a measure is a generalization and formalization of geometrical measures and other common notions, such as magnitude, mass, and probability of events. These seemingly distinct concepts have many similarities and can often be treated together in a single mathematical context. Measures are foundational in probability theory, integration theory, and can be generalized to assume negative values, as with electrical charge. Far-reaching generalizations of measure are widely used in quantum physics and physics in general.

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events.

A random variable is a mathematical formalization of a quantity or object which depends on random events. The term 'random variable' can be misleading as its mathematical definition is not actually random nor a variable, but rather it is a function from possible outcomes in a sample space to a measurable space, often to the real numbers.

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other.

In mathematical analysis and in probability theory, a σ-algebra on a set X is a nonempty collection Σ of subsets of X closed under complement, countable unions, and countable intersections. The ordered pair is called a measurable space.

In probability theory, a probability space or a probability triple is a mathematical construct that provides a formal model of a random process or "experiment". For example, one can define a probability space which models the throwing of a die.

In mathematics, the symmetric difference of two sets, also known as the disjunctive union and set sum, is the set of elements which are in either of the sets, but not in their intersection. For example, the symmetric difference of the sets and is .

In mathematics, Fatou's lemma establishes an inequality relating the Lebesgue integral of the limit inferior of a sequence of functions to the limit inferior of integrals of these functions. The lemma is named after Pierre Fatou.

In probability theory, the conditional expectation, conditional expected value, or conditional mean of a random variable is its expected value evaluated with respect to the conditional probability distribution. If the random variable can take on only a finite number of values, the "conditions" are that the variable can only take on a subset of those values. More formally, in the case when the random variable is defined over a discrete probability space, the "conditions" are a partition of this probability space.

In mathematics, a filtration is an indexed family of subobjects of a given algebraic structure , with the index running over some totally ordered index set , subject to the condition that

Possibility theory is a mathematical theory for dealing with certain types of uncertainty and is an alternative to probability theory. It uses measures of possibility and necessity between 0 and 1, ranging from impossible to possible and unnecessary to necessary, respectively. Professor Lotfi Zadeh first introduced possibility theory in 1978 as an extension of his theory of fuzzy sets and fuzzy logic. Didier Dubois and Henri Prade further contributed to its development. Earlier, in the 1950s, economist G. L. S. Shackle proposed the min/max algebra to describe degrees of potential surprise.

A Dynkin system, named after Eugene Dynkin, is a collection of subsets of another universal set satisfying a set of axioms weaker than those of 𝜎-algebra. Dynkin systems are sometimes referred to as 𝜆-systems or d-system. These set families have applications in measure theory and probability.

In mathematics, the Hahn decomposition theorem, named after the Austrian mathematician Hans Hahn, states that for any measurable space and any signed measure defined on the -algebra , there exist two -measurable sets, and , of such that:

- and .

- For every such that , one has , i.e., is a positive set for .

- For every such that , one has , i.e., is a negative set for .

In measure theory, Carathéodory's extension theorem states that any pre-measure defined on a given ring of subsets R of a given set Ω can be extended to a measure on the σ-ring generated by R, and this extension is unique if the pre-measure is σ-finite. Consequently, any pre-measure on a ring containing all intervals of real numbers can be extended to the Borel algebra of the set of real numbers. This is an extremely powerful result of measure theory, and leads, for example, to the Lebesgue measure.

In mathematics, a π-system on a set is a collection of certain subsets of such that

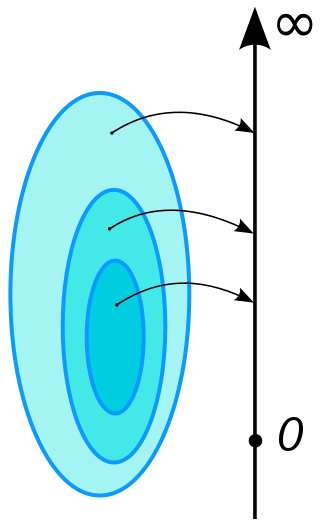

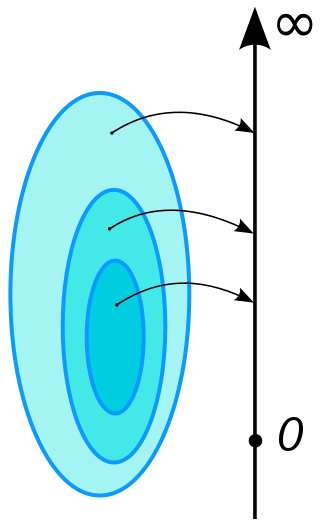

In probability theory, a standard probability space, also called Lebesgue–Rokhlin probability space or just Lebesgue space is a probability space satisfying certain assumptions introduced by Vladimir Rokhlin in 1940. Informally, it is a probability space consisting of an interval and/or a finite or countable number of atoms.

In probability theory, conditional probability is a measure of the probability of an event occurring, given that another event (by assumption, presumption, assertion or evidence) is already known to have occurred. This particular method relies on event A occurring with some sort of relationship with another event B. In this situation, the event A can be analyzed by a conditional probability with respect to B. If the event of interest is A and the event B is known or assumed to have occurred, "the conditional probability of A given B", or "the probability of A under the condition B", is usually written as P(A|B) or occasionally PB(A). This can also be understood as the fraction of probability B that intersects with A, or the ratio of the probabilities of both events happening to the "given" one happening (how many times A occurs rather than not assuming B has occurred): .

In mathematics, especially measure theory, a set function is a function whose domain is a family of subsets of some given set and that (usually) takes its values in the extended real number line which consists of the real numbers and

In mathematics, a submodular set function is a set function that, informally, describes the relationship between a set of inputs and an output, where adding more of one input has a decreasing additional benefit. The natural diminishing returns property which makes them suitable for many applications, including approximation algorithms, game theory and electrical networks. Recently, submodular functions have also found utility in several real world problems in machine learning and artificial intelligence, including automatic summarization, multi-document summarization, feature selection, active learning, sensor placement, image collection summarization and many other domains.

In the mathematical field of set theory, an ultrafilter on a set is a maximal filter on the set In other words, it is a collection of subsets of that satisfies the definition of a filter on and that is maximal with respect to inclusion, in the sense that there does not exist a strictly larger collection of subsets of that is also a filter. Equivalently, an ultrafilter on the set can also be characterized as a filter on with the property that for every subset of either or its complement belongs to the ultrafilter.