Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.

A sensor is a device that produces an output signal for the purpose of sensing a physical phenomenon.

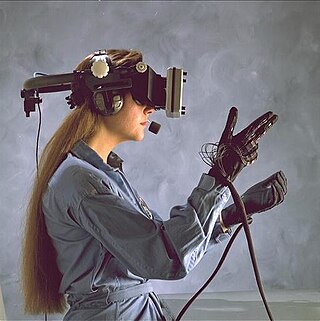

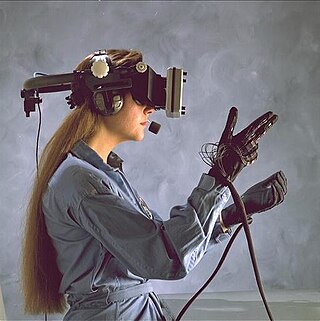

Haptic technology is technology that can create an experience of touch by applying forces, vibrations, or motions to the user. These technologies can be used to create virtual objects in a computer simulation, to control virtual objects, and to enhance remote control of machines and devices (telerobotics). Haptic devices may incorporate tactile sensors that measure forces exerted by the user on the interface. The word haptic, from the Greek: ἁπτικός (haptikos), means "tactile, pertaining to the sense of touch". Simple haptic devices are common in the form of game controllers, joysticks, and steering wheels.

A biosensor is an analytical device, used for the detection of a chemical substance, that combines a biological component with a physicochemical detector. The sensitive biological element, e.g. tissue, microorganisms, organelles, cell receptors, enzymes, antibodies, nucleic acids, etc., is a biologically derived material or biomimetic component that interacts with, binds with, or recognizes the analyte under study. The biologically sensitive elements can also be created by biological engineering. The transducer or the detector element, which transforms one signal into another one, works in a physicochemical way: optical, piezoelectric, electrochemical, electrochemiluminescence etc., resulting from the interaction of the analyte with the biological element, to easily measure and quantify. The biosensor reader device connects with the associated electronics or signal processors that are primarily responsible for the display of the results in a user-friendly way. This sometimes accounts for the most expensive part of the sensor device, however it is possible to generate a user friendly display that includes transducer and sensitive element. The readers are usually custom-designed and manufactured to suit the different working principles of biosensors.

Stimulus modality, also called sensory modality, is one aspect of a stimulus or what is perceived after a stimulus. For example, the temperature modality is registered after heat or cold stimulate a receptor. Some sensory modalities include: light, sound, temperature, taste, pressure, and smell. The type and location of the sensory receptor activated by the stimulus plays the primary role in coding the sensation. All sensory modalities work together to heighten stimuli sensation when necessary.

Sensory substitution is a change of the characteristics of one sensory modality into stimuli of another sensory modality.

Machine olfaction is the automated simulation of the sense of smell. An emerging application in modern engineering, it involves the use of robots or other automated systems to analyze air-borne chemicals. Such an apparatus is often called an electronic nose or e-nose. The development of machine olfaction is complicated by the fact that e-nose devices to date have responded to a limited number of chemicals, whereas odors are produced by unique sets of odorant compounds. The technology, though still in the early stages of development, promises many applications, such as: quality control in food processing, detection and diagnosis in medicine, detection of drugs, explosives and other dangerous or illegal substances, disaster response, and environmental monitoring.

An electronic nose is an electronic sensing device intended to detect odors or flavors. The expression "electronic sensing" refers to the capability of reproducing human senses using sensor arrays and pattern recognition systems.

The electronic tongue is an instrument that measures and compares tastes. As per the IUPAC technical report, an “electronic tongue” as analytical instrument including an array of non-selective chemical sensors with partial specificity to different solution components and an appropriate pattern recognition instrument, capable to recognize quantitative and qualitative compositions of simple and complex solutions

Machine perception is the capability of a computer system to interpret data in a manner that is similar to the way humans use their senses to relate to the world around them. The basic method that the computers take in and respond to their environment is through the attached hardware. Until recently input was limited to a keyboard, or a mouse, but advances in technology, both in hardware and software, have allowed computers to take in sensory input in a way similar to humans.

Tactile discrimination is the ability to differentiate information through the sense of touch. The somatosensory system is the nervous system pathway that is responsible for this essential survival ability used in adaptation. There are various types of tactile discrimination. One of the most well known and most researched is two-point discrimination, the ability to differentiate between two different tactile stimuli which are relatively close together. Other types of discrimination like graphesthesia and spatial discrimination also exist but are not as extensively researched. Tactile discrimination is something that can be stronger or weaker in different people and two major conditions, chronic pain and blindness, can affect it greatly. Blindness increases tactile discrimination abilities which is extremely helpful for tasks like reading braille. In contrast, chronic pain conditions, like arthritis, decrease a person's tactile discrimination. One other major application of tactile discrimination is in new prosthetics and robotics which attempt to mimic the abilities of the human hand. In this case tactile sensors function similarly to mechanoreceptors in a human hand to differentiate tactile stimuli.

Haptic perception means literally the ability "to grasp something". Perception in this case is achieved through the active exploration of surfaces and objects by a moving subject, as opposed to passive contact by a static subject during tactile perception.

The sense of smell, or olfaction, is the special sense through which smells are perceived. The sense of smell has many functions, including detecting desirable foods, hazards, and pheromones, and plays a role in taste.

In physiology, the somatosensory system is the network of neural structures in the brain and body that produce the perception of touch, as well as temperature (thermoception), body position (proprioception), and pain. It is a subset of the sensory nervous system, which also represents visual, auditory, olfactory, and gustatory stimuli.

A sense is a biological system used by an organism for sensation, the process of gathering information about the world through the detection of stimuli. Although in some cultures five human senses were traditionally identified as such, it is now recognized that there are many more. Senses used by non-human organisms are even greater in variety and number. During sensation, sense organs collect various stimuli for transduction, meaning transformation into a form that can be understood by the brain. Sensation and perception are fundamental to nearly every aspect of an organism's cognition, behavior and thought.

A tactile sensor is a device that measures information arising from physical interaction with its environment. Tactile sensors are generally modeled after the biological sense of cutaneous touch which is capable of detecting stimuli resulting from mechanical stimulation, temperature, and pain. Tactile sensors are used in robotics, computer hardware and security systems. A common application of tactile sensors is in touchscreen devices on mobile phones and computing.

Sensory design aims to establish an overall diagnosis of the sensory perceptions of a product, and define appropriate means to design or redesign it on that basis. It involves an observation of the diverse and varying situations in which a given product or object is used in order to measure the users' overall opinion of the product, its positive and negative aspects in terms of tactility, appearance, sound and so on.

Electronic skin refers to flexible, stretchable and self-healing electronics that are able to mimic functionalities of human or animal skin. The broad class of materials often contain sensing abilities that are intended to reproduce the capabilities of human skin to respond to environmental factors such as changes in heat and pressure.

Soft robotics is a subfield of robotics that concerns the design, control, and fabrication of robots composed of compliant materials, instead of rigid links. In contrast to rigid-bodied robots built from metals, ceramics and hard plastics, the compliance of soft robots can improve their safety when working in close contact with humans.

A chemical sensor array is a sensor architecture with multiple sensor components that create a pattern for analyte detection from the additive responses of individual sensor components. There exist several types of chemical sensor arrays including electronic, optical, acoustic wave, and potentiometric devices. These chemical sensor arrays can employ multiple sensor types that are cross-reactive or tuned to sense specific analytes.