History

The specific design of the elements of the Continuous Media Web project were invented by Silvia Pfeiffer and Conrad Parker at CSIRO Australia in mid-2001. Some of the ideas behind CMML and the generic addressing of temporal offsets were proposed in a 1997 paper by Bill Simpson-Young and Ken Yap.

In January 2002 the Annodex team took on two students, Andrew Nesbit and Andre Pang, along with Simon Lai who became the first person to author meaningful content in CMML. During this time the basics of the Annodex technology were designed, including the design of temporal URI fragments, the basic DTDs, the choice of the Ogg encapsulation format and the initial design of the libraries.

By late 2004, Andre Pang developed the Annodex Plug-in for Mozilla Firefox Browsers, allowing for the playback of Annodex media encoded with the Ogg Theora video codec and the Ogg Vorbis audio codec. Time URIs implemented at the Location Bar provides the server-side seeking functionality on Annodex media and enables hyperlinking into and out of Annodex media through a table of contents clip list for CMML content.

Over time there was increasing development of Annodex technology from the open-source community, starting with Debian packages by Jamie Wilkinson, Python bindings by Ben Leslie, and Perl bindings by Angus Lees. The command-line authoring tools were completed early in 2001, whilst being continually updated to adhere to the current Version 3 of the Annodex annotation standards by 2005. [1]

In November 2005, CSIRO wanted to focus on closed-source research and build existing products on top of the technology, thus losing interest in the open source standard components of it. Therefore, a decision was made to separate out the open-source components into its own organisation by creating an Annodex Foundation similar in spirit to the many other foundations that have been created around other FOSS technologies. [2]

A codec is a device or computer program which encodes or decodes a digital data stream or signal. Codec is a portmanteau of coder-decoder.

Ogg is a free, open container format maintained by the Xiph.Org Foundation. The creators of the Ogg format state that it is unrestricted by software patents and is designed to provide for efficient streaming and manipulation of high-quality digital multimedia. Its name is derived from "ogging", jargon from the computer game Netrek.

Vorbis is a free and open-source software project headed by the Xiph.Org Foundation. The project produces an audio coding format and software reference encoder/decoder (codec) for lossy audio compression. Vorbis is most commonly used in conjunction with the Ogg container format and it is therefore often referred to as Ogg Vorbis.

Windows Media Video (WMV) is a series of video codecs and their corresponding video coding formats developed by Microsoft. It is part of the Windows Media framework. WMV consists of three distinct codecs: The original video compression technology known as WMV, was originally designed for Internet streaming applications, as a competitor to RealVideo. The other compression technologies, WMV Screen and WMV Image, cater for specialized content. After standardization by the Society of Motion Picture and Television Engineers (SMPTE), WMV version 9 was adapted for physical-delivery formats such as HD DVD and Blu-ray Disc and became known as VC-1. Microsoft also developed a digital container format called Advanced Systems Format to store video encoded by Windows Media Video.

Xiph.Org Foundation is a nonprofit organization that produces free multimedia formats and software tools. It focuses on the Ogg family of formats, the most successful of which has been Vorbis, an open and freely licensed audio format and codec designed to compete with the patented WMA, MP3 and AAC. As of 2013, development work was focused on Daala, an open and patent-free video format and codec designed to compete with VP9 and the patented High Efficiency Video Coding.

Nullsoft Streaming Video (NSV) was a media container designed for streaming video content over the Internet. NSV was developed by Nullsoft, the makers of Winamp.

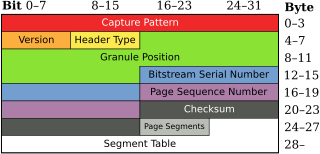

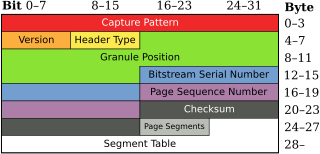

An Ogg page is a unit of data in an Ogg bitstream, usually between 4 kB and 8 kB, with a maximum size of 65,307 bytes.

A container format belongs to a class of computer files that exist to allow multiple data streams to be embedded into a single file, usually along with metadata for identifying and further detailing those streams. Notable examples of container formats include archive files (such as the ZIP format and formats used for multimedia playback. Among the earliest cross-platform container formats were Distinguished Encoding Rules and the 1985 Interchange File Format.

This table compares many features of container formats. To see which multimedia players support which container format, look at comparison of media players.

MPEG-4 Part 14 or MP4 is a digital multimedia container format most commonly used to store video and audio, but it can also be used to store other data such as subtitles and still images. Like most modern container formats, it allows streaming over the Internet. The only official filename extension for MPEG-4 Part 14 files is .mp4. MPEG-4 Part 14 is a standard specified as a part of MPEG-4.

Continuous Media Markup Language (CMML) is to audio or video what HTML is to text. CMML is essentially a timed text codec. It allows file creators to structure a time-continuously sampled data file by dividing it into temporal sections, and provides these clips with some additional information. This information is HTML-like and is essentially a textual representation of the audio or video file. CMML enables textual searches on these otherwise binary files.

Clesh is a cloud-based video editing platform designed for the consumers, prosumers, and online communities to integrate user generated content. The core technology is based on FORscene which is geared towards professionals working for example in broadcasting, news media, post production.

Metavid is a free-software wiki-based community archive project for audio video media. The site hosts public domain US legislative footage. It was started as a Digital Arts/New Media MFA thesis project of Michael Dale and Abram Stern under the advisement of Professor Warren Sack in late 2005 at the University of California, Santa Cruz. Its continued development is supported by a grant from the Sunlight Foundation. It works by using a "simple Linux box to record everything that C-SPAN shoots", which can then be used to provide "brief searchable clips using closed-captioning text".

The HTML5 draft specification adds video and audio elements for embedding video and audio in HTML documents. The specification had formerly recommended support for playback of Theora video and Vorbis audio encapsulated in Ogg containers to provide for easier distribution of audio and video over the internet by using open standards, but the recommendation was soon after dropped.

The HTML5 specification introduced the video element for the purpose of playing videos, partially replacing the object element. HTML5 video is intended by its creators to become the new standard way to show video on the web, instead of the previous de facto standard of using the proprietary Adobe Flash plugin, though early adoption was hampered by lack of agreement as to which video coding formats and audio coding formats should be supported in web browsers.

Opus is a lossy audio coding format developed by the Xiph.Org Foundation and standardized by the Internet Engineering Task Force, designed to efficiently code speech and general audio in a single format, while remaining low-latency enough for real-time interactive communication and low-complexity enough for low-end embedded processors. Opus replaces both Vorbis and Speex for new applications, and several blind listening tests have ranked it higher-quality than any other standard audio format at any given bitrate until transparency is reached, including MP3, AAC, and HE-AAC.

A video coding format is a content representation format for storage or transmission of digital video content. It typically uses a standardized video compression algorithm, most commonly based on discrete cosine transform (DCT) coding and motion compensation. Examples of video coding formats include H.262, MPEG-4 Part 2, H.264, HEVC (H.265), Theora, RealVideo RV40, VP9, and AV1. A specific software or hardware implementation capable of compression or decompression to/from a specific video coding format is called a video codec; an example of a video codec is Xvid, which is one of several different codecs which implements encoding and decoding videos in the MPEG-4 Part 2 video coding format in software.

HTML5 Audio is a subject of the HTML5 specification, incorporating audio input, playback, and synthesis, as well as speech to text, in the browser.

An online video platform (OVP), provided by a video hosting service, enables users to upload, convert, store and play back video content on the Internet, often via a structured, large-scale system that may generate revenue. Users generally will upload video content via the hosting service's website, mobile or desktop application, or other interface (API). The type of video content uploaded might be anything from shorts to full-length TV shows and movies. The video host stores the video on its server and offers users the ability to enable different types of embed codes or links that allow others to view the video content. The website, mainly used as the video hosting website, is usually called the video sharing website.