Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a scene file containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, texture, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output.

The RenderMan Interface Specification, or RISpec in short, is an open API developed by Pixar Animation Studios to describe three-dimensional scenes and turn them into digital photorealistic images. It includes the RenderMan Shading Language.

The Phong reflection model is an empirical model of the local illumination of points on a surface designed by the computer graphics researcher Bui Tuong Phong. In 3D computer graphics, it is sometimes referred to as "Phong shading", particularly if the model is used with the interpolation method of the same name and in the context of pixel shaders or other places where a lighting calculation can be referred to as “shading”.

In 3D computer graphics, Phong shading, Phong interpolation, or normal-vector interpolation shading is an interpolation technique for surface shading invented by computer graphics pioneer Bui Tuong Phong. Phong shading interpolates surface normals across rasterized polygons and computes pixel colors based on the interpolated normals and a reflection model. Phong shading may also refer to the specific combination of Phong interpolation and the Phong reflection model.

Shading refers to the depiction of depth perception in 3D models or illustrations by varying the level of darkness. Shading tries to approximate local behavior of light on the object's surface and is not to be confused with techniques of adding shadows, such as shadow mapping or shadow volumes, which fall under global behavior of light.

Diffuse reflection is the reflection of light or other waves or particles from a surface such that a ray incident on the surface is scattered at many angles rather than at just one angle as in the case of specular reflection. An ideal diffuse reflecting surface is said to exhibit Lambertian reflection, meaning that there is equal luminance when viewed from all directions lying in the half-space adjacent to the surface.

A shading language is a graphics programming language adapted to programming shader effects. Shading languages usually consist of special data types like "vector", "matrix", "color" and "normal".

Subsurface scattering (SSS), also known as subsurface light transport (SSLT), is a mechanism of light transport in which light that penetrates the surface of a translucent object is scattered by interacting with the material and exits the surface at a different point. The light will generally penetrate the surface and be reflected a number of times at irregular angles inside the material before passing back out of the material at a different angle than it would have had if it had been reflected directly off the surface.

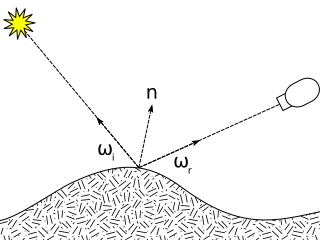

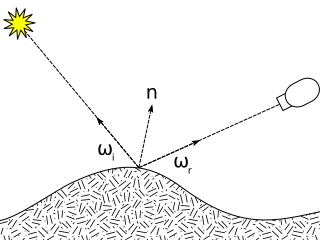

The bidirectional reflectance distribution function (BRDF), symbol , is a function of four real variables that defines how light is reflected at an opaque surface. It is employed in the optics of real-world light, in computer graphics algorithms, and in computer vision algorithms. The function takes an incoming light direction, , and outgoing direction, , and returns the ratio of reflected radiance exiting along to the irradiance incident on the surface from direction . Each direction is itself parameterized by azimuth angle and zenith angle , therefore the BRDF as a whole is a function of 4 variables. The BRDF has units sr−1, with steradians (sr) being a unit of solid angle.

Path tracing is a computer graphics Monte Carlo method of rendering images of three-dimensional scenes such that the global illumination is faithful to reality. Fundamentally, the algorithm is integrating over all the illuminance arriving to a single point on the surface of an object. This illuminance is then reduced by a surface reflectance function (BRDF) to determine how much of it will go towards the viewpoint camera. This integration procedure is repeated for every pixel in the output image. When combined with physically accurate models of surfaces, accurate models of real light sources, and optically correct cameras, path tracing can produce still images that are indistinguishable from photographs.

In computer graphics, per-pixel lighting refers to any technique for lighting an image or scene that calculates illumination for each pixel on a rendered image. This is in contrast to other popular methods of lighting such as vertex lighting, which calculates illumination at each vertex of a 3D model and then interpolates the resulting values over the model's faces to calculate the final per-pixel color values.

3D rendering is the 3D computer graphics process of converting 3D models into 2D images on a computer. 3D renders may include photorealistic effects or non-photorealistic styles.

Patrick M. Hanrahan is an American computer graphics researcher, the Canon USA Professor of Computer Science and Electrical Engineering in the Computer Graphics Laboratory at Stanford University. His research focuses on rendering algorithms, graphics processing units, as well as scientific illustration and visualization. He has received numerous awards, including the 2019 Turing Award.

Computer graphics lighting is the collection of techniques used to simulate light in computer graphics scenes. While lighting techniques offer flexibility in the level of detail and functionality available, they also operate at different levels of computational demand and complexity. Graphics artists can choose from a variety of light sources, models, shading techniques, and effects to suit the needs of each application.

Reflection in computer graphics is used to render reflective objects like mirrors and shiny surfaces.

Computer graphics is a sub-field of computer science which studies methods for digitally synthesizing and manipulating visual content. Although the term often refers to the study of three-dimensional computer graphics, it also encompasses two-dimensional graphics and image processing.

Panta Rhei is a video game engine developed by Capcom, for use with 8th generation consoles PlayStation 4, Xbox One; as a replacement for its previous MT Framework engine.

Matt Pharr is an American computer graphics researcher and writer, and one of the primary originators of the physically based rendering process. His research focuses on rendering algorithms, graphics processing units, as well as scientific illustration and visualization.

This is a glossary of terms relating to computer graphics.