In digital signal processing, spatial anti-aliasing is a technique for minimizing the distortion artifacts (aliasing) when representing a high-resolution image at a lower resolution. Anti-aliasing is used in digital photography, computer graphics, digital audio, and many other applications.

Texture mapping is a method for mapping a texture on a computer-generated graphic. Texture here can be high frequency detail, surface texture, or color.

Media player software is a type of application software for playing multimedia computer files like audio and video files. Media players commonly display standard media control icons known from physical devices such as tape recorders and CD players, such as play, pause, fastforward (⏩️), rewind (⏪), and stop buttons. In addition, they generally have progress bars, which are sliders to locate the current position in the duration of the media file.

Telecine is the process of transferring film into video and is performed in a color suite. The term is also used to refer to the equipment used in this post-production process.

In computer graphics, mipmaps or pyramids are pre-calculated, optimized sequences of images, each of which is a progressively lower resolution representation of the previous. The height and width of each image, or level, in the mipmap is a factor of two smaller than the previous level. Mipmaps do not have to be square. They are intended to increase rendering speed and reduce aliasing artifacts. A high-resolution mipmap image is used for high-density samples, such as for objects close to the camera; lower-resolution images are used as the object appears farther away. This is a more efficient way of downfiltering (minifying) a texture than sampling all texels in the original texture that would contribute to a screen pixel; it is faster to take a constant number of samples from the appropriately downfiltered textures. Mipmaps are widely used in 3D computer games, flight simulators, other 3D imaging systems for texture filtering, and 2D and 3D GIS software. Their use is known as mipmapping. The letters MIP in the name are an acronym of the Latin phrase multum in parvo, meaning "much in little".

In video technology, 24p refers to a video format that operates at 24 frames per second frame rate with progressive scanning. Originally, 24p was used in the non-linear editing of film-originated material. Today, 24p formats are being increasingly used for aesthetic reasons in image acquisition, delivering film-like motion characteristics. Some vendors advertise 24p products as a cheaper alternative to film acquisition.

Deinterlacing is the process of converting interlaced video into a non-interlaced or progressive form. Interlaced video signals are commonly found in analog television, VHS, Laserdisc, digital television (HDTV) when in the 1080i format, some DVD titles, and a smaller number of Blu-ray discs.

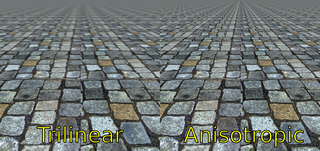

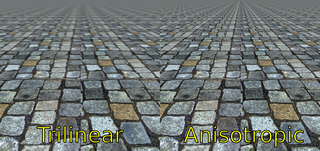

In 3D computer graphics, anisotropic filtering is a method of enhancing the image quality of textures on surfaces of computer graphics that are at oblique viewing angles with respect to the camera where the projection of the texture appears to be non-orthogonal.

In mathematics, bilinear interpolation is a method for interpolating functions of two variables using repeated linear interpolation. It is usually applied to functions sampled on a 2D rectilinear grid, though it can be generalized to functions defined on the vertices of arbitrary convex quadrilaterals.

In computer graphics, texture filtering or texture smoothing is the method used to determine the texture color for a texture mapped pixel, using the colors of nearby texels.

Trilinear filtering is an extension of the bilinear texture filtering method, which also performs linear interpolation between mipmaps.

In computer graphics, a shader is a computer program that calculates the appropriate levels of light, darkness, and color during the rendering of a 3D scene—a process known as shading. Shaders have evolved to perform a variety of specialized functions in computer graphics special effects and video post-processing, as well as general-purpose computing on graphics processing units.

Pixel art scaling algorithms are graphical filters that attempt to enhance the appearance of hand-drawn 2D pixel art graphics. These algorithms are a form of automatic image enhancement. Pixel art scaling algorithms employ methods significantly different than the common methods of image rescaling, which have the goal of preserving the appearance of images.

In computer graphics and digital imaging, imagescaling refers to the resizing of a digital image. In video technology, the magnification of digital material is known as upscaling or resolution enhancement.

Bloom is a computer graphics effect used in video games, demos, and high-dynamic-range rendering (HDRR) to reproduce an imaging artifact of real-world cameras. The effect produces fringes of light extending from the borders of bright areas in an image, contributing to the illusion of an extremely bright light overwhelming the camera or eye capturing the scene. It became widely used in video games after an article on the technique was published by the authors of Tron 2.0 in 2004.

In numerical analysis, multivariate interpolation is interpolation on functions of more than one variable ; when the variates are spatial coordinates, it is also known as spatial interpolation.

Display motion blur, also called HDTV blur and LCD motion blur, refers to several visual artifacts that are frequently found on modern consumer high-definition television sets and flat panel displays for computers.

Intel 2700G is a low power graphics co-processor for the XScale PXA27x processor, announced on April 12, 2004. It is built on both the PowerVR MBX Lite chip design and on the MVED1 video encoder/decoder technology.

2D to 3D video conversion is the process of transforming 2D ("flat") film to 3D form, which in almost all cases is stereo, so it is the process of creating imagery for each eye from one 2D image.

This is a glossary of terms relating to computer graphics.