In signal processing, a digital filter is a system that performs mathematical operations on a sampled, discrete-time signal to reduce or enhance certain aspects of that signal. This is in contrast to the other major type of electronic filter, the analog filter, which is typically an electronic circuit operating on continuous-time analog signals.

A wavelet is a wave-like oscillation with an amplitude that begins at zero, increases or decreases, and then returns to zero one or more times. Wavelets are termed a "brief oscillation". A taxonomy of wavelets has been established, based on the number and direction of its pulses. Wavelets are imbued with specific properties that make them useful for signal processing.

An adaptive filter is a system with a linear filter that has a transfer function controlled by variable parameters and a means to adjust those parameters according to an optimization algorithm. Because of the complexity of the optimization algorithms, almost all adaptive filters are digital filters. Adaptive filters are required for some applications because some parameters of the desired processing operation are not known in advance or are changing. The closed loop adaptive filter uses feedback in the form of an error signal to refine its transfer function.

In signal processing, a finite impulse response (FIR) filter is a filter whose impulse response is of finite duration, because it settles to zero in finite time. This is in contrast to infinite impulse response (IIR) filters, which may have internal feedback and may continue to respond indefinitely.

In signal processing, specifically control theory, bounded-input, bounded-output (BIBO) stability is a form of stability for signals and systems that take inputs. If a system is BIBO stable, then the output will be bounded for every input to the system that is bounded.

Stochastic gradient descent is an iterative method for optimizing an objective function with suitable smoothness properties. It can be regarded as a stochastic approximation of gradient descent optimization, since it replaces the actual gradient by an estimate thereof. Especially in high-dimensional optimization problems this reduces the very high computational burden, achieving faster iterations in exchange for a lower convergence rate.

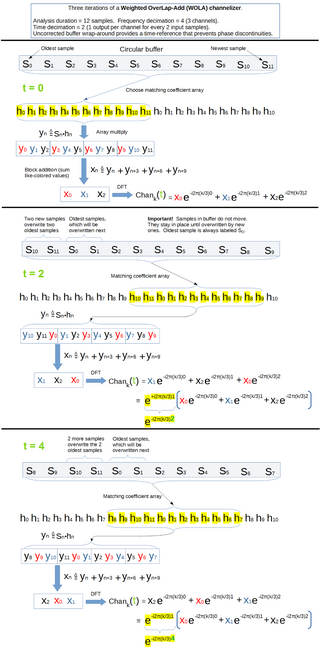

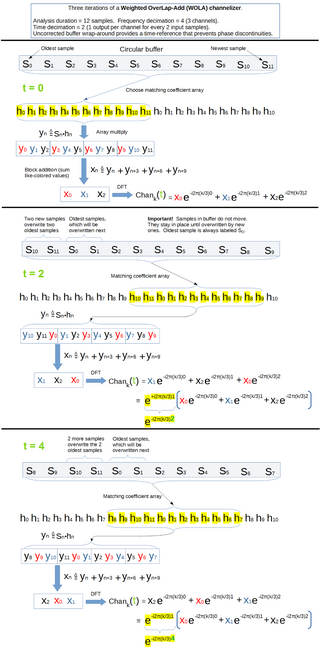

In signal processing, a filter bank is an array of bandpass filters that separates the input signal into multiple components, each one carrying a sub-band of the original signal. One application of a filter bank is a graphic equalizer, which can attenuate the components differently and recombine them into a modified version of the original signal. The process of decomposition performed by the filter bank is called analysis ; the output of analysis is referred to as a subband signal with as many subbands as there are filters in the filter bank. The reconstruction process is called synthesis, meaning reconstitution of a complete signal resulting from the filtering process.

Least mean squares (LMS) algorithms are a class of adaptive filter used to mimic a desired filter by finding the filter coefficients that relate to producing the least mean square of the error signal. It is a stochastic gradient descent method in that the filter is only adapted based on the error at the current time. It was invented in 1960 by Stanford University professor Bernard Widrow and his first Ph.D. student, Ted Hoff, based on their research in single-layer neural networks (ADALINE). Specifically, they used gradient descent to train ADALINE to recognize patterns, and called the algorithm "delta rule". They then applied the rule to filters, resulting in the LMS algorithm.

Recursive least squares (RLS) is an adaptive filter algorithm that recursively finds the coefficients that minimize a weighted linear least squares cost function relating to the input signals. This approach is in contrast to other algorithms such as the least mean squares (LMS) that aim to reduce the mean square error. In the derivation of the RLS, the input signals are considered deterministic, while for the LMS and similar algorithms they are considered stochastic. Compared to most of its competitors, the RLS exhibits extremely fast convergence. However, this benefit comes at the cost of high computational complexity.

In mathematics, a wavelet series is a representation of a square-integrable function by a certain orthonormal series generated by a wavelet. This article provides a formal, mathematical definition of an orthonormal wavelet and of the integral wavelet transform.

Space-time adaptive processing (STAP) is a signal processing technique most commonly used in radar systems. It involves adaptive array processing algorithms to aid in target detection. Radar signal processing benefits from STAP in areas where interference is a problem. Through careful application of STAP, it is possible to achieve order-of-magnitude sensitivity improvements in target detection.

Mean shift is a non-parametric feature-space mathematical analysis technique for locating the maxima of a density function, a so-called mode-seeking algorithm. Application domains include cluster analysis in computer vision and image processing.

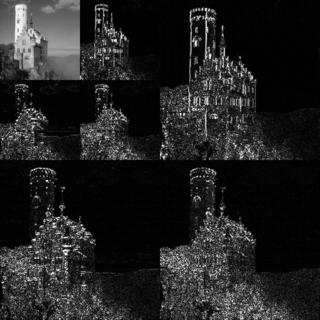

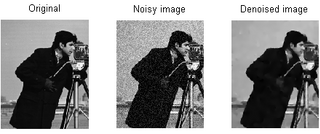

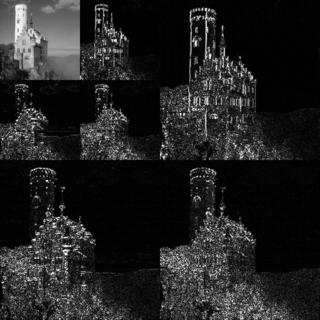

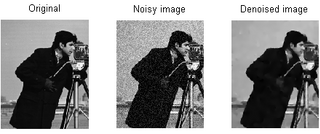

In signal processing, particularly image processing, total variation denoising, also known as total variation regularization or total variation filtering, is a noise removal process (filter). It is based on the principle that signals with excessive and possibly spurious detail have high total variation, that is, the integral of the image gradient magnitude is high. According to this principle, reducing the total variation of the signal—subject to it being a close match to the original signal—removes unwanted detail whilst preserving important details such as edges. The concept was pioneered by L. I. Rudin, S. Osher, and E. Fatemi in 1992 and so is today known as the ROF model.

Two dimensional filters have seen substantial development effort due to their importance and high applicability across several domains. In the 2-D case the situation is quite different from the 1-D case, because the multi-dimensional polynomials cannot in general be factored. This means that an arbitrary transfer function cannot generally be manipulated into a form required by a particular implementation. The input-output relationship of a 2-D IIR filter obeys a constant-coefficient linear partial difference equation from which the value of an output sample can be computed using the input samples and previously computed output samples. Because the values of the output samples are fed back, the 2-D filter, like its 1-D counterpart, can be unstable.

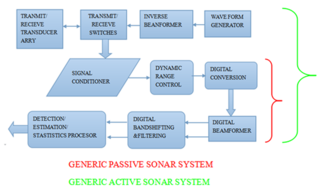

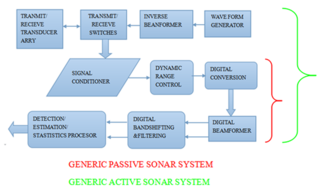

Sonar systems are generally used underwater for range finding and detection. Active sonar emits an acoustic signal, or pulse of sound, into the water. The sound bounces off the target object and returns an echo to the sonar transducer. Unlike active sonar, passive sonar does not emit its own signal, which is an advantage for military vessels. But passive sonar cannot measure the range of an object unless it is used in conjunction with other passive listening devices. Multiple passive sonar devices must be used for triangulation of a sound source. No matter whether active sonar or passive sonar, the information included in the reflected signal can not be used without technical signal processing. To extract the useful information from the mixed signal, some steps are taken to transfer the raw acoustic data.

This article provides a short survey of the concepts, principles and applications of Multirate filter banks and Multidimensional Directional filter banks.

In signal processing, multidimensional discrete convolution refers to the mathematical operation between two functions f and g on an n-dimensional lattice that produces a third function, also of n-dimensions. Multidimensional discrete convolution is the discrete analog of the multidimensional convolution of functions on Euclidean space. It is also a special case of convolution on groups when the group is the group of n-tuples of integers.

In signal processing, nonlinear multidimensional signal processing (NMSP) covers all signal processing using nonlinear multidimensional signals and systems. Nonlinear multidimensional signal processing is a subset of signal processing (multidimensional signal processing). Nonlinear multi-dimensional systems can be used in a broad range such as imaging, teletraffic, communications, hydrology, geology, and economics. Nonlinear systems cannot be treated as linear systems, using Fourier transformation and wavelet analysis. Nonlinear systems will have chaotic behavior, limit cycle, steady state, bifurcation, multi-stability and so on. Nonlinear systems do not have a canonical representation, like impulse response for linear systems. But there are some efforts to characterize nonlinear systems, such as Volterra and Wiener series using polynomial integrals as the use of those methods naturally extend the signal into multi-dimensions. Another example is the Empirical mode decomposition method using Hilbert transform instead of Fourier Transform for nonlinear multi-dimensional systems. This method is an empirical method and can be directly applied to data sets. Multi-dimensional nonlinear filters (MDNF) are also an important part of NMSP, MDNF are mainly used to filter noise in real data. There are nonlinear-type hybrid filters used in color image processing, nonlinear edge-preserving filters use in magnetic resonance image restoration. Those filters use both temporal and spatial information and combine the maximum likelihood estimate with the spatial smoothing algorithm.

In signal processing, multidimensional empirical mode decomposition is an extension of the one-dimensional (1-D) EMD algorithm to a signal encompassing multiple dimensions. The Hilbert–Huang empirical mode decomposition (EMD) process decomposes a signal into intrinsic mode functions combined with the Hilbert spectral analysis, known as the Hilbert–Huang transform (HHT). The multidimensional EMD extends the 1-D EMD algorithm into multiple-dimensional signals. This decomposition can be applied to image processing, audio signal processing, and various other multidimensional signals.

Parallelmultidimensionaldigitalsignal processing (mD-DSP) is defined as the application of parallel programming and multiprocessing to digital signal processing techniques to process digital signals that have more than a single dimension. The use of mD-DSP is fundamental to many application areas such as digital image and video processing, medical imaging, geophysical signal analysis, sonar, radar, lidar, array processing, computer vision, computational photography, and augmented and virtual reality. However, as the number of dimensions of a signal increases the computational complexity to operate on the signal increases rapidly. This relationship between the number of dimensions and the amount of complexity, related to both time and space, as studied in the field of algorithm analysis, is analogues to the concept of the curse of dimensionality. This large complexity generally results in an extremely long execution run-time of a given mD-DSP application rendering its usage to become impractical for many applications; especially for real-time applications. This long run-time is the primary motivation of applying parallel algorithmic techniques to mD-DSP problems.