Cluster sampling is a sampling plan used when mutually homogeneous yet internally heterogeneous groupings are evident in a statistical population. It is often used in marketing research. In this sampling plan, the total population is divided into these groups and a simple random sample of the groups is selected. The elements in each cluster are then sampled. If all elements in each sampled cluster are sampled, then this is referred to as a "one-stage" cluster sampling plan. If a simple random subsample of elements is selected within each of these groups, this is referred to as a "two-stage" cluster sampling plan. A common motivation for cluster sampling is to reduce the total number of interviews and costs given the desired accuracy. For a fixed sample size, the expected random error is smaller when most of the variation in the population is present internally within the groups, and not between the groups.

Economic history is the study of economies or economic phenomena of the past. Analysis in economic history is undertaken using a combination of historical methods, statistical methods and the application of economic theory to historical situations and institutions. The topic includes financial and business history and overlaps with areas of social history such as demographic and labor history. The quantitative—in this case, econometric—study of economic history is also known as cliometrics.

An economist is a practitioner in the social science discipline of economics.

Behavioral economics studies the effects of psychological, cognitive, emotional, cultural and social factors on the economic decisions of individuals and institutions and how those decisions vary from those implied by classical theory.

Managerial economics deals with the application of the economic concepts, theories, tools, and methodologies to solve practical problems in a business. It helps the manager in decision making and acts as a link between practice and theory". It is sometimes referred to as business economics and is a branch of economics that applies microeconomic analysis to decision methods of businesses or other management units.

Experimental economics is the application of experimental methods to study economic questions. Data collected in experiments are used to estimate effect size, test the validity of economic theories, and illuminate market mechanisms. Economic experiments usually use cash to motivate subjects, in order to mimic real-world incentives. Experiments are used to help understand how and why markets and other exchange systems function as they do. Experimental economics have also expanded to understand institutions and the law.

Jerry Allen Hausman is the John and Jennie S. MacDonald Professor of Economics at the Massachusetts Institute of Technology and a notable econometrician. He has published numerous influential papers in microeconometrics. Hausman is the recipient of several prestigious awards including the John Bates Clark Medal in 1985 and the Frisch Medal in 1980.

Economic methodology is the study of methods, especially the scientific method, in relation to economics, including principles underlying economic reasoning. In contemporary English, 'methodology' may reference theoretical or systematic aspects of a method. Philosophy and economics also takes up methodology at the intersection of the two subjects.

Sir Richard William Blundell CBE FBA is a British economist and econometrician.

In statistics, the jackknife is a resampling technique especially useful for variance and bias estimation. The jackknife pre-dates other common resampling methods such as the bootstrap. The jackknife estimator of a parameter is found by systematically leaving out each observation from a dataset and calculating the estimate and then finding the average of these calculations. Given a sample of size , the jackknife estimate is found by aggregating the estimates of each -sized sub-sample.

The Heckman correction is any of a number of related statistical methods developed by James Heckman at the University of Chicago from 1976 to 1979 which allow the researcher to correct for selection bias. Selection bias problems are endemic to applied econometric problems, which make Heckman’s original technique, and subsequent refinements by both himself and others, indispensable to applied econometricians. Heckman received the Economics Nobel Prize in 2000 for his work in this field.

Colin A. Carter is Professor of Agricultural and Resource Economics at the University of California, Davis. His research/teaching interests include international trade, futures markets, and commodity markets.

Applied mathematics is the application of mathematical methods by different fields such as science, engineering, business, computer science, and industry. Thus, applied mathematics is a combination of mathematical science and specialized knowledge. The term "applied mathematics" also describes the professional specialty in which mathematicians work on practical problems by formulating and studying mathematical models. In the past, practical applications have motivated the development of mathematical theories, which then became the subject of study in pure mathematics where abstract concepts are studied for their own sake. The activity of applied mathematics is thus intimately connected with research in pure mathematics.

In probability theory, a random variable Y is said to be mean independent of random variable X if and only if its conditional mean E(Y | X=x) equals its (unconditional) mean E(Y) for all x such that the probability that X = x is not zero. Y is said to be mean dependent on X if E(Y | X=x) is not constant for all x for which the probability is non-zero.

Manuel Arellano is a Spanish economist specialising in econometrics and empirical microeconomics. Together with Stephen Bond, he developed the Arellano–Bond estimator, a widely used GMM estimator for panel data. He has been a professor of economics at CEMFI in Madrid since 1991.

Peter Jost Huber is a Swiss statistician. He is known for his contributions to the development of heteroscedasticity-consistent standard errors.

Maximum likelihood estimation with flow data is a parametric approach to deal with flow sampling data.

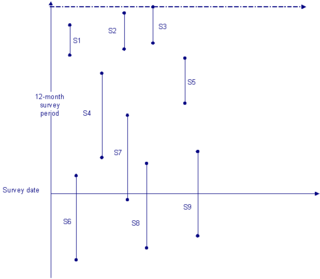

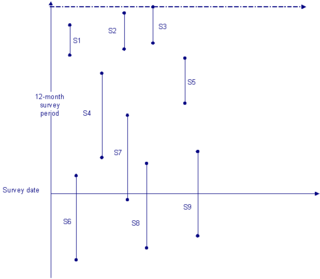

Flow sampling, in contrast to stock sampling, means we collect observations that enter the particular state of interest during a particular interval. When dealing with duration data, the data sampling method has a direct impact on subsequent analyses and inference. An example in demography would be sampling the number of people that die within a given time frame ; a popular example in economics would be the number of people leaving unemployment within a given time frame. Researchers imposing similar assumptions but using different sampling methods, can reach fundamentally different conclusions if the joint distribution across the flow and stock samples differ.

Grouped duration data are widespread in many applications. Unemployment durations are typically measured over weeks or months and these time intervals may be considered too large for continuous approximations to hold. In this case, we will typically have grouping points , where . Models allow for time-invariant and time-variant covariates, but the latter require stronger assumptions in terms of exogeneity. The discrete-time hazard function can be written as:

Stock sampling is sampling people in a certain state at the time of the survey. This is in contrast to flow sampling, where the relationship of interest deals with duration or survival analysis. In stock sampling, rather than focusing on transitions within a certain time interval, we only have observations at a certain point in time. This can lead to both left and right censoring. Imposing the same model on data that have been generated under the two different sampling regimes can lead to research reaching fundamentally different conclusions if the joint distribution across the flow and stock samples differ sufficiently.