Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images into descriptions of world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.

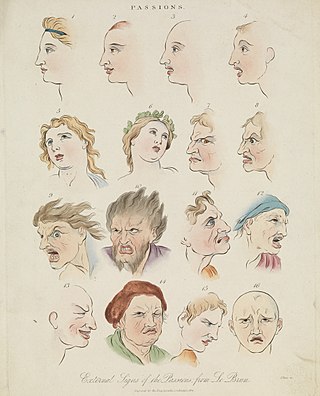

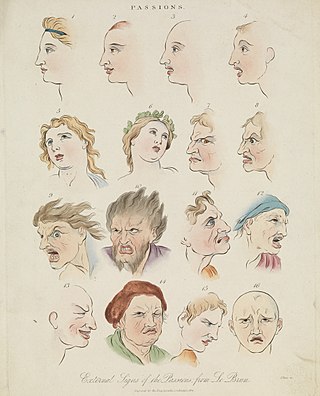

Emotions are physical and mental states brought on by neurophysiological changes, variously associated with thoughts, feelings, behavioral responses, and a degree of pleasure or displeasure. There is no scientific consensus on a definition. Emotions are often intertwined with mood, temperament, personality, disposition, or creativity.

Pattern recognition is the task of assigning a class to an observation based on patterns extracted from data. While similar, pattern recognition (PR) is not to be confused with pattern machines (PM) which may possess (PR) capabilities but their primary function is to distinguish and create emergent patterns. PR has applications in statistical data analysis, signal processing, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Pattern recognition has its origins in statistics and engineering; some modern approaches to pattern recognition include the use of machine learning, due to the increased availability of big data and a new abundance of processing power.

Affective computing is the study and development of systems and devices that can recognize, interpret, process, and simulate human affects. It is an interdisciplinary field spanning computer science, psychology, and cognitive science. While some core ideas in the field may be traced as far back as to early philosophical inquiries into emotion, the more modern branch of computer science originated with Rosalind Picard's 1995 paper on affective computing and her book Affective Computing published by MIT Press. One of the motivations for the research is the ability to give machines emotional intelligence, including to simulate empathy. The machine should interpret the emotional state of humans and adapt its behavior to them, giving an appropriate response to those emotions.

Body language is a type of communication in which physical behaviors, as opposed to words, are used to express or convey information. Such behavior includes facial expressions, body posture, gestures, eye movement, touch and the use of space. The term body language is usually applied in regard to people but may also be applied to animals. The study of body language is also known as kinesics. Although body language is an important part of communication, most of it happens without conscious awareness.

Nonverbal communication (NVC) is the transmission of messages or signals through a nonverbal platform such as eye contact (oculesics), body language (kinesics), social distance (proxemics), touch (haptics), voice (paralanguage), physical environments/appearance, and use of objects. When communicating, we utilize nonverbal channels as means to convey different messages or signals, whereas others can interpret these message. The study of nonverbal communication started in 1872 with the publication of The Expression of the Emotions in Man and Animals by Charles Darwin. Darwin began to study nonverbal communication as he noticed the interactions between animals such as lions, tigers, dogs etc. and realized they also communicated by gestures and expressions. For the first time, nonverbal communication was studied and its relevance questioned. Today, scholars argue that nonverbal communication can convey more meaning than verbal communication.

Gesture recognition is an area of research and development in computer science and language technology concerned with the recognition and interpretation of human gestures. A subdiscipline of computer vision, it employs mathematical algorithms to interpret gestures.

The Facial Action Coding System (FACS) is a system to taxonomize human facial movements by their appearance on the face, based on a system originally developed by a Swedish anatomist named Carl-Herman Hjortsjö. It was later adopted by Paul Ekman and Wallace V. Friesen, and published in 1978. Ekman, Friesen, and Joseph C. Hager published a significant update to FACS in 2002. Movements of individual facial muscles are encoded by the FACS from slight different instant changes in facial appearance. It has proven useful to psychologists and to animators.

When classification is performed by a computer, statistical methods are normally used to develop the algorithm.

Dyssemia is a difficulty with receptive and/or expressive nonverbal communication. The word comes from the Greek roots dys (difficulty) and semia (signal). The term was coined by psychologists Marshall Duke and Stephen Nowicki in their 1992 book, Helping The Child Who Doesn't Fit In, to decipher the hidden dimensions of social rejection. These difficulties go beyond problems with body language and motor skills. Dyssemic persons exhibit difficulties with the acquisition and use of nonverbal cues in interpersonal relationships. "A classic set of studies by Albert Mehrabian showed that in face-to-face interactions, 55 percent of the emotional meaning of a message is expressed through facial, postural, and gestural means, and 38 percent of the emotional meaning is transmitted through the tone of voice. Only seven percent of the emotional meaning is actually expressed with words." Dyssemia represents the social dysfunction aspect of nonverbal learning disorder.

Activity recognition aims to recognize the actions and goals of one or more agents from a series of observations on the agents' actions and the environmental conditions. Since the 1980s, this research field has captured the attention of several computer science communities due to its strength in providing personalized support for many different applications and its connection to many different fields of study such as medicine, human-computer interaction, or sociology.

Human–computer interaction (HCI) is research in the design and the use of computer technology, which focuses on the interfaces between people (users) and computers. HCI researchers observe the ways humans interact with computers and design technologies that allow humans to interact with computers in novel ways. A device that allows interaction between human being and a computer is known as a "Human-computer Interface (HCI)".

Pain negatively affects the health and welfare of animals. "Pain" is defined by the International Association for the Study of Pain as "an unpleasant sensory and emotional experience associated with actual or potential tissue damage, or described in terms of such damage." Only the animal experiencing the pain can know the pain's quality and intensity, and the degree of suffering. It is harder, if even possible, for an observer to know whether an emotional experience has occurred, especially if the sufferer cannot communicate. Therefore, this concept is often excluded in definitions of pain in animals, such as that provided by Zimmerman: "an aversive sensory experience caused by actual or potential injury that elicits protective motor and vegetative reactions, results in learned avoidance and may modify species-specific behaviour, including social behaviour." Nonhuman animals cannot report their feelings to language-using humans in the same manner as human communication, but observation of their behaviour provides a reasonable indication as to the extent of their pain. Just as with doctors and medics who sometimes share no common language with their patients, the indicators of pain can still be understood.

Non-verbal leakage is a form of non-verbal behavior that occurs when a person verbalizes one thing, but their body language indicates another, common forms of which include facial movements and hand-to-face gestures. The term "non-verbal leakage" got its origin in literature in 1968, leading to many subsequent studies on the topic throughout the 1970s, with related studies continuing today.

Robotic sensing is a subarea of robotics science intended to provide sensing capabilities to robots. Robotic sensing provides robots with the ability to sense their environments and is typically used as feedback to enable robots to adjust their behavior based on sensed input. Robot sensing includes the ability to see, touch, hear and move and associated algorithms to process and make use of environmental feedback and sensory data. Robot sensing is important in applications such as vehicular automation, robotic prosthetics, and for industrial, medical, entertainment and educational robots.

Social cues are verbal or non-verbal signals expressed through the face, body, voice, motion and guide conversations as well as other social interactions by influencing our impressions of and responses to others. These percepts are important communicative tools as they convey important social and contextual information and therefore facilitate social understanding.

Emotion recognition is the process of identifying human emotion. People vary widely in their accuracy at recognizing the emotions of others. Use of technology to help people with emotion recognition is a relatively nascent research area. Generally, the technology works best if it uses multiple modalities in context. To date, the most work has been conducted on automating the recognition of facial expressions from video, spoken expressions from audio, written expressions from text, and physiology as measured by wearables.

Artificial empathy or computational empathy is the development of AI systems—such as companion robots or virtual agents—that can detect emotions and respond to them in an empathic way.

The present weather sensor (PWS) is a component of an automatic weather station that detects the presence of hydrometeors and determines their type and intensity. It works on a principle similar to a bistatic radar, noting the passage of droplets, or flakes, between a transmitter and a sensor. These instruments in automatic weather stations are used to simulate the observation taken by a human observer. They allow rapid reporting of any change in the type and intensity of precipitation, but include interpretation limitations.