How autonomous convergence works

Note: this is an intuitive description of how autonomous convergence theorems guarantee stability, not a strictly mathematical description.

The key point in the example theorem given above is the existence of a negative logarithmic norm, which is derived from a vector norm. The vector norm effectively measures the distance between points in the vector space on which the differential equation is defined, and the negative logarithmic norm means that distances between points, as measured by the corresponding vector norm, are decreasing with time under the action of  . So long as the trajectories of all points in the phase space are bounded, all trajectories must therefore eventually converge to the same point.

. So long as the trajectories of all points in the phase space are bounded, all trajectories must therefore eventually converge to the same point.

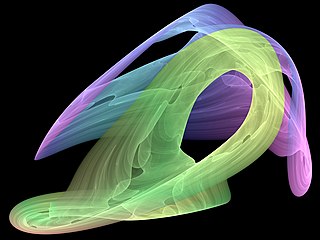

The autonomous convergence theorems by Russell Smith, Michael Li and James Muldowney work in a similar manner, but they rely on showing that the area of two-dimensional shapes in phase space decrease with time. This means that no periodic orbits can exist, as all closed loops must shrink to a point. If the system is bounded, then according to Pugh's closing lemma there can be no chaotic behaviour either, so all trajectories must eventually reach an equilibrium.

Michael Li has also developed an extended autonomous convergence theorem which is applicable to dynamical systems containing an invariant manifold. [5]

In mathematics, a dynamical system is a system in which a function describes the time dependence of a point in an ambient space, such as in a parametric curve. Examples include the mathematical models that describe the swinging of a clock pendulum, the flow of water in a pipe, the random motion of particles in the air, and the number of fish each springtime in a lake. The most general definition unifies several concepts in mathematics such as ordinary differential equations and ergodic theory by allowing different choices of the space and how time is measured. Time can be measured by integers, by real or complex numbers or can be a more general algebraic object, losing the memory of its physical origin, and the space may be a manifold or simply a set, without the need of a smooth space-time structure defined on it.

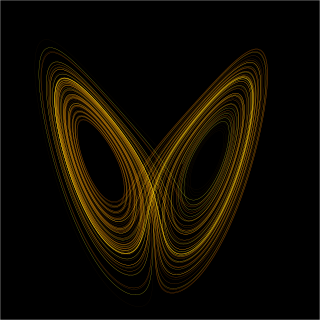

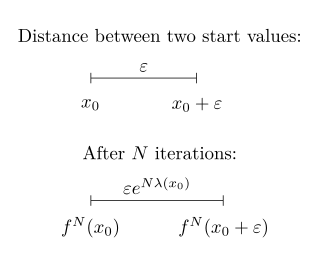

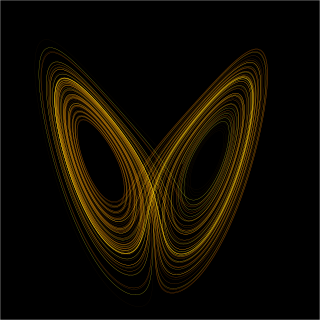

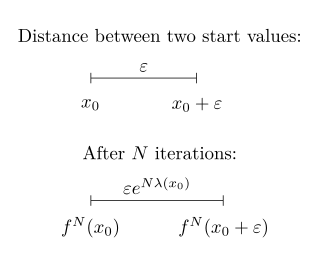

In mathematics, the Lyapunov exponent or Lyapunov characteristic exponent of a dynamical system is a quantity that characterizes the rate of separation of infinitesimally close trajectories. Quantitatively, two trajectories in phase space with initial separation vector diverge at a rate given by

In the mathematical field of dynamical systems, an attractor is a set of states toward which a system tends to evolve, for a wide variety of starting conditions of the system. System values that get close enough to the attractor values remain close even if slightly disturbed.

In mathematics, specifically in the study of dynamical systems, an orbit is a collection of points related by the evolution function of the dynamical system. It can be understood as the subset of phase space covered by the trajectory of the dynamical system under a particular set of initial conditions, as the system evolves. As a phase space trajectory is uniquely determined for any given set of phase space coordinates, it is not possible for different orbits to intersect in phase space, therefore the set of all orbits of a dynamical system is a partition of the phase space. Understanding the properties of orbits by using topological methods is one of the objectives of the modern theory of dynamical systems.

Ergodic theory is a branch of mathematics that studies statistical properties of deterministic dynamical systems; it is the study of ergodicity. In this context, "statistical properties" refers to properties which are expressed through the behavior of time averages of various functions along trajectories of dynamical systems. The notion of deterministic dynamical systems assumes that the equations determining the dynamics do not contain any random perturbations, noise, etc. Thus, the statistics with which we are concerned are properties of the dynamics.

In the theory of ordinary differential equations (ODEs), Lyapunov functions, named after Aleksandr Lyapunov, are scalar functions that may be used to prove the stability of an equilibrium of an ODE. Lyapunov functions are important to stability theory of dynamical systems and control theory. A similar concept appears in the theory of general state space Markov chains, usually under the name Foster–Lyapunov functions.

Various types of stability may be discussed for the solutions of differential equations or difference equations describing dynamical systems. The most important type is that concerning the stability of solutions near to a point of equilibrium. This may be discussed by the theory of Aleksandr Lyapunov. In simple terms, if the solutions that start out near an equilibrium point stay near forever, then is Lyapunov stable. More strongly, if is Lyapunov stable and all solutions that start out near converge to , then is said to be asymptotically stable. The notion of exponential stability guarantees a minimal rate of decay, i.e., an estimate of how quickly the solutions converge. The idea of Lyapunov stability can be extended to infinite-dimensional manifolds, where it is known as structural stability, which concerns the behavior of different but "nearby" solutions to differential equations. Input-to-state stability (ISS) applies Lyapunov notions to systems with inputs.

Bifurcation theory is the mathematical study of changes in the qualitative or topological structure of a given family of curves, such as the integral curves of a family of vector fields, and the solutions of a family of differential equations. Most commonly applied to the mathematical study of dynamical systems, a bifurcation occurs when a small smooth change made to the parameter values of a system causes a sudden 'qualitative' or topological change in its behavior. Bifurcations occur in both continuous systems and discrete systems.

In mathematics, more specifically in dynamical systems, the method of averaging exploits systems containing time-scales separation: a fast oscillationversus a slow drift. It suggests that we perform an averaging over a given amount of time in order to iron out the fast oscillations and observe the qualitative behavior from the resulting dynamics. The approximated solution holds under finite time inversely proportional to the parameter denoting the slow time scale. It turns out to be a customary problem where there exists the trade off between how good is the approximated solution balanced by how much time it holds to be close to the original solution.

In the mathematics of evolving systems, the concept of a center manifold was originally developed to determine stability of degenerate equilibria. Subsequently, the concept of center manifolds was realised to be fundamental to mathematical modelling.

LaSalle's invariance principle is a criterion for the asymptotic stability of an autonomous dynamical system.

In mathematics, stability theory addresses the stability of solutions of differential equations and of trajectories of dynamical systems under small perturbations of initial conditions. The heat equation, for example, is a stable partial differential equation because small perturbations of initial data lead to small variations in temperature at a later time as a result of the maximum principle. In partial differential equations one may measure the distances between functions using Lp norms or the sup norm, while in differential geometry one may measure the distance between spaces using the Gromov–Hausdorff distance.

In mathematics, especially in the study of dynamical systems and differential equations, the stable manifold theorem is an important result about the structure of the set of orbits approaching a given hyperbolic fixed point. It roughly states that the existence of a local diffeomorphism near a fixed point implies the existence of a local stable center manifold containing that fixed point. This manifold has dimension equal to the number of eigenvalues of the Jacobian matrix of the fixed point that are less than 1.

In numerical analysis, fixed-point iteration is a method of computing fixed points of a function.

In mathematics, the Markus–Yamabe conjecture is a conjecture on global asymptotic stability. If the Jacobian matrix of a dynamical system at a fixed point is Hurwitz, then the fixed point is asymptotically stable. Markus-Yamabe conjecture asks if a similar result holds globally. Precisely, the conjecture states that if a continuously differentiable map on an -dimensional real vector space has a fixed point, and its Jacobian matrix is everywhere Hurwitz, then the fixed point is globally stable.

Numerical continuation is a method of computing approximate solutions of a system of parameterized nonlinear equations,

In mathematics, in the study of dynamical systems, the Hartman–Grobman theorem or linearisation theorem is a theorem about the local behaviour of dynamical systems in the neighbourhood of a hyperbolic equilibrium point. It asserts that linearisation—a natural simplification of the system—is effective in predicting qualitative patterns of behaviour. The theorem owes its name to Philip Hartman and David M. Grobman.

In mathematics, the logarithmic norm is a real-valued functional on operators, and is derived from either an inner product, a vector norm, or its induced operator norm. The logarithmic norm was independently introduced by Germund Dahlquist and Sergei Lozinskiĭ in 1958, for square matrices. It has since been extended to nonlinear operators and unbounded operators as well. The logarithmic norm has a wide range of applications, in particular in matrix theory, differential equations and numerical analysis. In the finite-dimensional setting, it is also referred to as the matrix measure or the Lozinskiĭ measure.

In dynamical systems theory, the Olech theorem establishes sufficient conditions for global asymptotic stability of a two-equation system of non-linear differential equations. The result was established by Czesław Olech in 1963, based on joint work with Philip Hartman.

In mathematics, and especially differential and algebraic geometry, K-stability is an algebro-geometric stability condition, for complex manifolds and complex algebraic varieties. The notion of K-stability was first introduced by Gang Tian and reformulated more algebraically later by Simon Donaldson. The definition was inspired by a comparison to geometric invariant theory (GIT) stability. In the special case of Fano varieties, K-stability precisely characterises the existence of Kähler–Einstein metrics. More generally, on any compact complex manifold, K-stability is conjectured to be equivalent to the existence of constant scalar curvature Kähler metrics.