A citation index is a kind of bibliographic index, an index of citations between publications, allowing the user to easily establish which later documents cite which earlier documents. A form of citation index is first found in 12th-century Hebrew religious literature. Legal citation indexes are found in the 18th century and were made popular by citators such as Shepard's Citations (1873). In 1961, Eugene Garfield's Institute for Scientific Information (ISI) introduced the first citation index for papers published in academic journals, first the Science Citation Index (SCI), and later the Social Sciences Citation Index (SSCI) and the Arts and Humanities Citation Index (AHCI). American Chemical Society converted its printed Chemical Abstract Service into internet-accessible SciFinder in 2008. The first automated citation indexing was done by CiteSeer in 1997 and was patented. Other sources for such data include Google Scholar, Microsoft Academic, Elsevier's Scopus, and the National Institutes of Health's iCite.

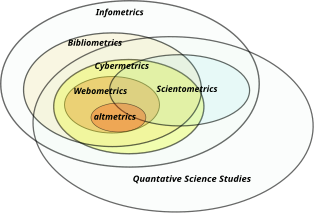

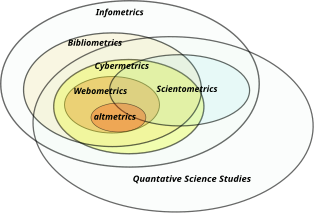

Bibliometrics is the use of statistical methods to analyse books, articles and other publications, especially in scientific contents. Bibliometric methods are frequently used in the field of library and information science. Bibliometrics is closely associated with scientometrics, the analysis of scientific metrics and indicators, to the point that both fields largely overlap.

Scientometrics is the field of study which concerns itself with measuring and analysing scholarly literature. Scientometrics is a sub-field of informetrics. Major research issues include the measurement of the impact of research papers and academic journals, the understanding of scientific citations, and the use of such measurements in policy and management contexts. In practice there is a significant overlap between scientometrics and other scientific fields such as information systems, information science, science of science policy, sociology of science, and metascience. Critics have argued that over-reliance on scientometrics has created a system of perverse incentives, producing a publish or perish environment that leads to low-quality research.

Citation analysis is the examination of the frequency, patterns, and graphs of citations in documents. It uses the directed graph of citations — links from one document to another document — to reveal properties of the documents. A typical aim would be to identify the most important documents in a collection. A classic example is that of the citations between academic articles and books. For another example, judges of law support their judgements by referring back to judgements made in earlier cases. An additional example is provided by patents which contain prior art, citation of earlier patents relevant to the current claim. The digitization of patent data and increasing computing power have led to a community of practice that uses these citation data to measure innovation attributes, trace knowledge flows, and map innovation networks.

Informetrics is the study of quantitative aspects of information, it is an extension and evolution of traditional bibliometrics and scientometrics. Informetrics uses bibliometrics and scientometrics methods to study mainly the problems of literature information management and evaluation of science and technology. Informetrics is an independent discipline that uses quantitative methods from mathematics and statistics to study the process, phenomena, and law of informetrics. Informetrics has gained more attention as it is a common scientific method for academic evaluation, research hotspots in discipline, and trend analysis.

ScienceDirect is a website that provides access to a large bibliographic database of scientific and medical publications of the Dutch publisher Elsevier. It hosts over 18 million pieces of content from more than 4,000 academic journals and 30,000 e-books of this publisher. The access to the full-text requires subscription, while the bibliographic metadata is free to read. ScienceDirect is operated by Elsevier. It was launched in March 1997.

Howard D. White is a scientist in library and information science with a focus on informetrics and scientometrics.

Citation impact or citation rate is a measure of how many times an academic journal article or book or author is cited by other articles, books or authors. Citation counts are interpreted as measures of the impact or influence of academic work and have given rise to the field of bibliometrics or scientometrics, specializing in the study of patterns of academic impact through citation analysis. The importance of journals can be measured by the average citation rate, the ratio of number of citations to number articles published within a given time period and in a given index, such as the journal impact factor or the citescore. It is used by academic institutions in decisions about academic tenure, promotion and hiring, and hence also used by authors in deciding which journal to publish in. Citation-like measures are also used in other fields that do ranking, such as Google's PageRank algorithm, software metrics, college and university rankings, and business performance indicators.

The h-index is an author-level metric that measures both the productivity and citation impact of the publications, initially used for an individual scientist or scholar. The h-index correlates with success indicators such as winning the Nobel Prize, being accepted for research fellowships and holding positions at top universities. The index is based on the set of the scientist's most cited papers and the number of citations that they have received in other publications. The index has more recently been applied to the productivity and impact of a scholarly journal as well as a group of scientists, such as a department or university or country. The index was suggested in 2005 by Jorge E. Hirsch, a physicist at UC San Diego, as a tool for determining theoretical physicists' relative quality and is sometimes called the Hirsch index or Hirsch number.

Journal ranking is widely used in academic circles in the evaluation of an academic journal's impact and quality. Journal rankings are intended to reflect the place of a journal within its field, the relative difficulty of being published in that journal, and the prestige associated with it. They have been introduced as official research evaluation tools in several countries.

An academic discipline or academic field is a subdivision of knowledge that is taught and researched at the college or university level. Disciplines are defined and recognized by the academic journals in which research is published, and the learned societies and academic departments or faculties within colleges and universities to which their practitioners belong. Academic disciplines are conventionally divided into the humanities, including language, art and cultural studies, and the scientific disciplines, such as physics, chemistry, and biology; the social sciences are sometimes considered a third category.

HistCite is a software package used for bibliometric analysis and information visualization. It was developed by Eugene Garfield, the founder of the Institute for Scientific Information and the inventor of important information retrieval tools such as Current Contents and the Science Citation Index.

D-Sight is a company that specializes in decision support software and associated services in the domains of project prioritization, supplier selection and collaborative decision-making. It was founded in 2010 as a spin-off from the Université Libre de Bruxelles (ULB). Their headquarters are located in Brussels, Belgium.

The Science and Technology Information Center (STIC) is an Ethiopian organisation which provides information to support scientific and technological (S&T) activities in the country. STIC has published information on the financing of research and development and on the nature and progress of innovative projects, and in 2014 was planning to introduce bibliometric monitoring of publications in S&T. The center has also provided information and communications technology facilities including a digital library, a patent information system, an automated personnel management system, and a S&T-related database.

The University Ranking by Academic Performance (URAP) is a university ranking developed by the Informatics Institute of Middle East Technical University. Since 2010, it has been publishing annual national and global college and university rankings for top 2000 institutions. The scientometrics measurement of URAP is based on data obtained from the Institute for Scientific Information via Web of Science and inCites. For global rankings, URAP employs indicators of research performance including the number of articles, citation, total documents, article impact total, citation impact total, and international collaboration. In addition to global rankings, URAP publishes regional rankings for universities in Turkey using additional indicators such as the number of students and faculty members obtained from Center of Measuring, Selection and Placement ÖSYM.

Microsoft Academic was a free internet-based academic search engines for academic publications and literature, developed by Microsoft Research, shut down in 2022. At the same time, OpenAlex was launched and claimed to be a successor to Microsoft Academic.

There are a number of approaches to ranking academic publishing groups and publishers. Rankings rely on subjective impressions by the scholarly community, on analyses of prize winners of scientific associations, discipline, a publisher's reputation, and its impact factor.

Serbian Citation Index is a combination of an online multidisciplinary bibliographic database, a national citation index, an Open Access full-text journal repository and an electronic publishing platform. It is produced and maintained by the Centre for Evaluation in Education and Science (CEON/CEES), based in Belgrade, Serbia. In July 2017, it indexed 230 Serbian scholarly journals in all areas of science and contained more than 80,000 bibliographic records and more than one million bibliographic references.

Ronald Rousseau is a Belgian mathematician and information scientist. He has obtained an international reputation for his research on indicators and citation analysis in the fields of bibliometrics and scientometrics.

The Leiden Manifesto for research metrics (LM) is a list of "ten principles to guide research evaluation", published as a comment in Volume 520, Issue 7548 of Nature, on 22 April 2015. It was formulated by public policy professor Diana Hicks, scientometrics professor Paul Wouters, and their colleagues at the 19th International Conference on Science and Technology Indicators, held between 3–5 September 2014 in Leiden, The Netherlands.